URL Parameters and How They Impact SEO

Although they are an invaluable asset in the hands of seasoned SEO professionals, query strings often present serious challenges for your website rankings.

In this guide, we'll share the most common SEO issues to watch out for when working with URL parameters.

What Are URL Parameters? How to Use URL Parameters (with Examples) How Do URL Parameters Work? URL Query String Examples When Do URL Parameters Become an SEO Issue? How to Manage URL Parameters for Good SEO Check Your Crawl Budget Consistent Internal Linking Canonicalize One Version of the URL Block Crawlers via Disallow Move URL Parameters to Static URLs Using Semrush’s URL Parameter Tool Incorporating URL Parameters into your SEO StrategyWhat Are URL Parameters?

URL parameters (known also as “query strings” or “URL query parameters”) are elements inserted in your URLs to help you filter and organize content or track information on your website.

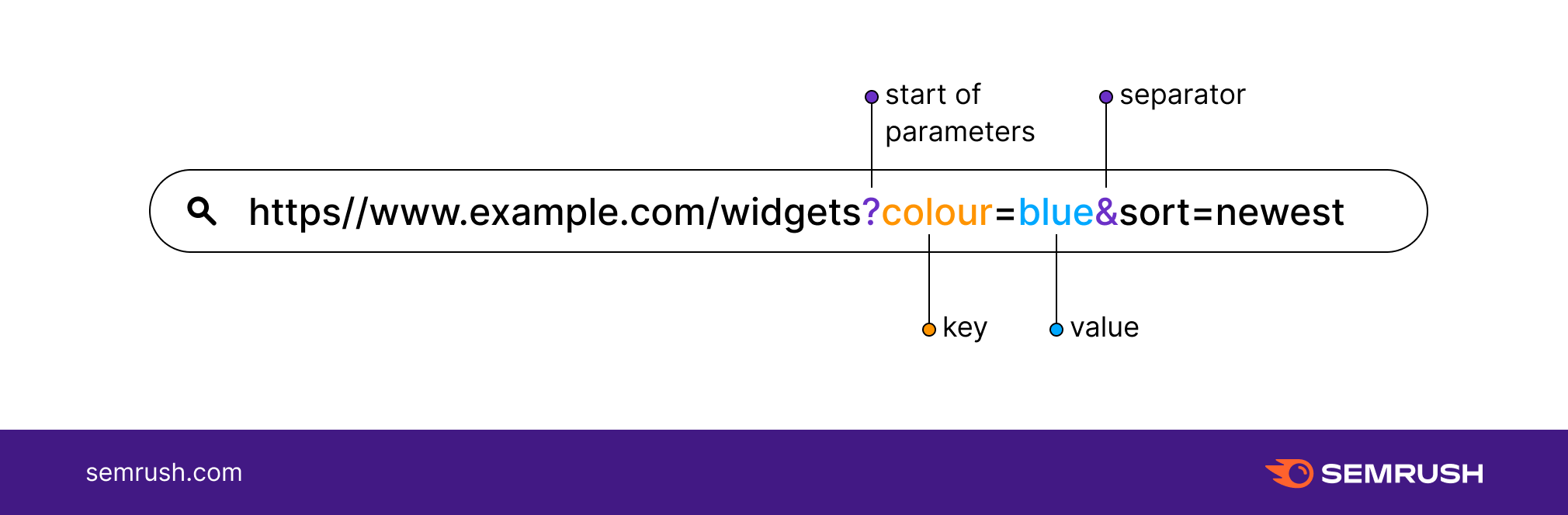

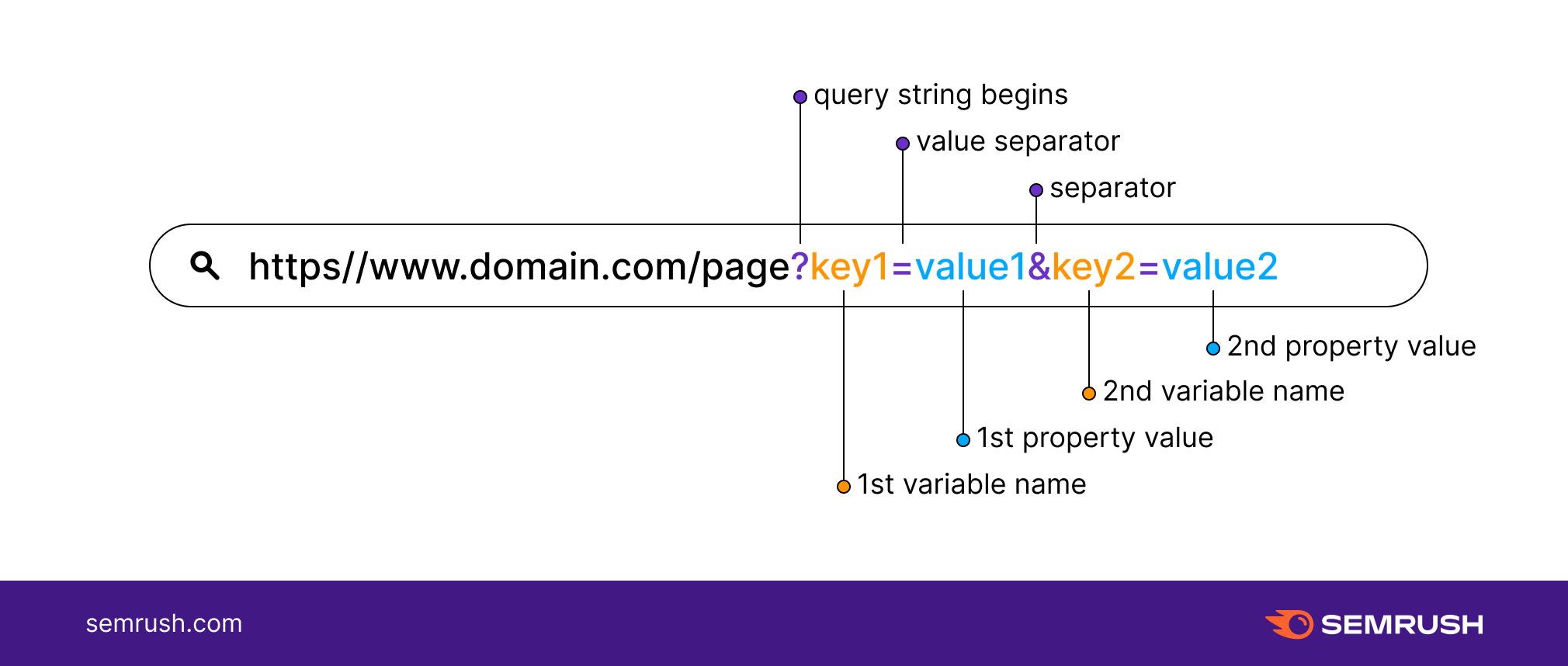

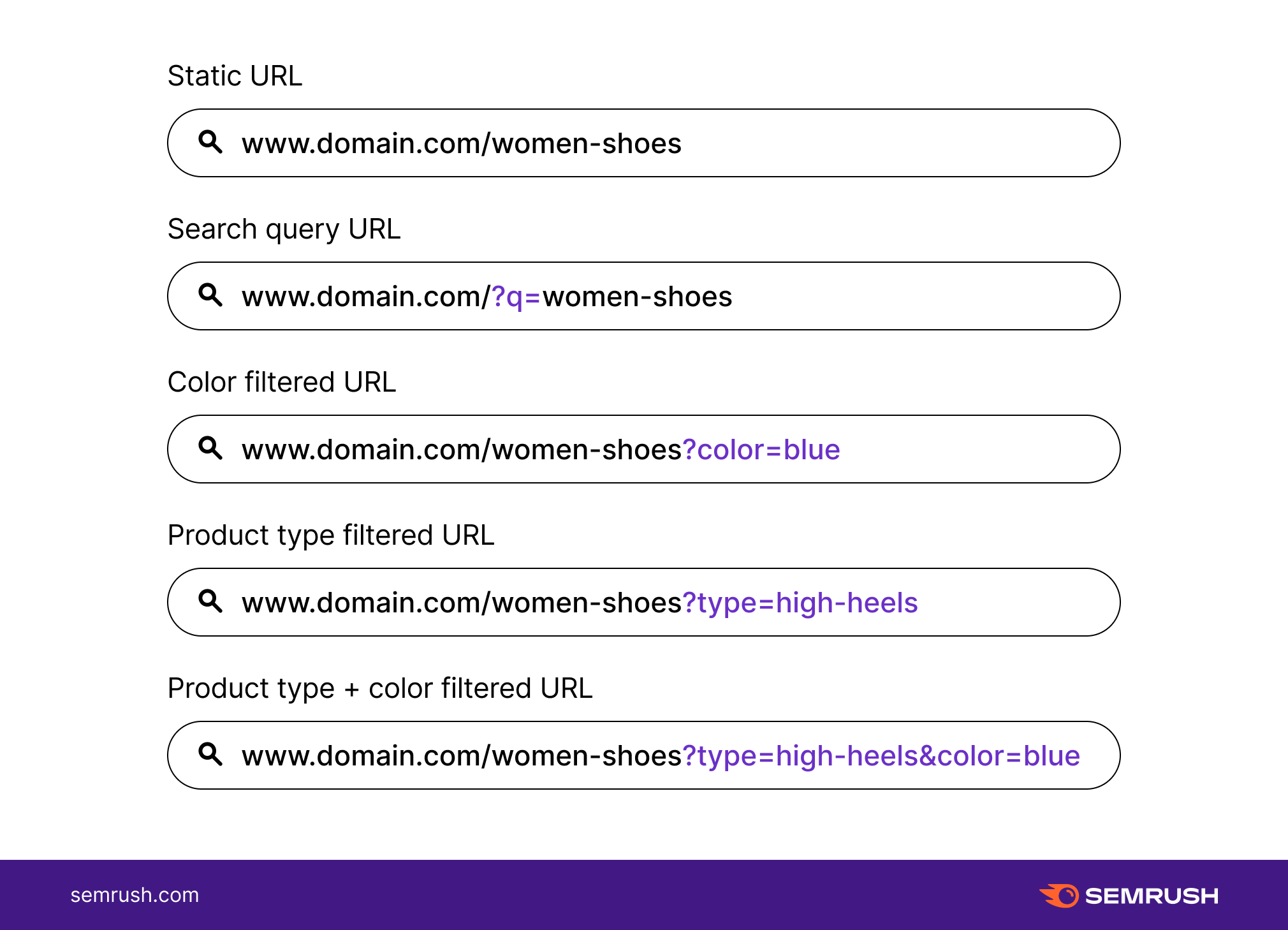

To identify a URL parameter, refer to the portion of the URL that comes after a question mark (?). URL parameters are made of a key and a value, separated by an equal sign (=). Multiple parameters are each then separated by an ampersand (&).

A URL string with parameters looks like this:

https//www.domain.com/page?key1=value1&key2=value2

Key1: first variable name Key2: second variable nameValue1: first property valueValue2: second property value? : query string begins= : value separator& : parameter separator

How to Use URL Parameters (with Examples)

URL parameters are commonly used to sort content on a page, making it easier for users to navigate products in an online store. These query strings allow users to order a page according to specific filters and to view only a set amount of items per page.

Query strings of tracking parameters are equally common. They’re often used by digital marketers to monitor where traffic comes from, so they can track their social strategy, ad campaign, or newsletter.

How Do URL Parameters Work?

According to Google Developers, there are two types of URL parameters:

1. Content-modifying parameters (active): parameters that will modify the content displayed on the page

e.g. to send a user directly to a specific product called ‘xyz’http://domain.com?productid=xyz

2. Tracking parameters (passive) for advanced tracking: parameters that will pass information — i.e. which network it came from, which campaign or ad group etc. — but won’t change the content on the page.

e.g. to track traffic from your newsletterhttps://www.domain.com/?utm_source=newsletter&utm_medium=email

e.g. to collect campaign data with custom URLshttps://www.domain.com/?utm_source=twitter&utm_medium=tweet&utm_campaign=summer-sale

It might seem fairly simple to manage, but there is a correct and an incorrect way to use URL parameters, which we’ll discuss shortly after some examples.

URL Query String Examples

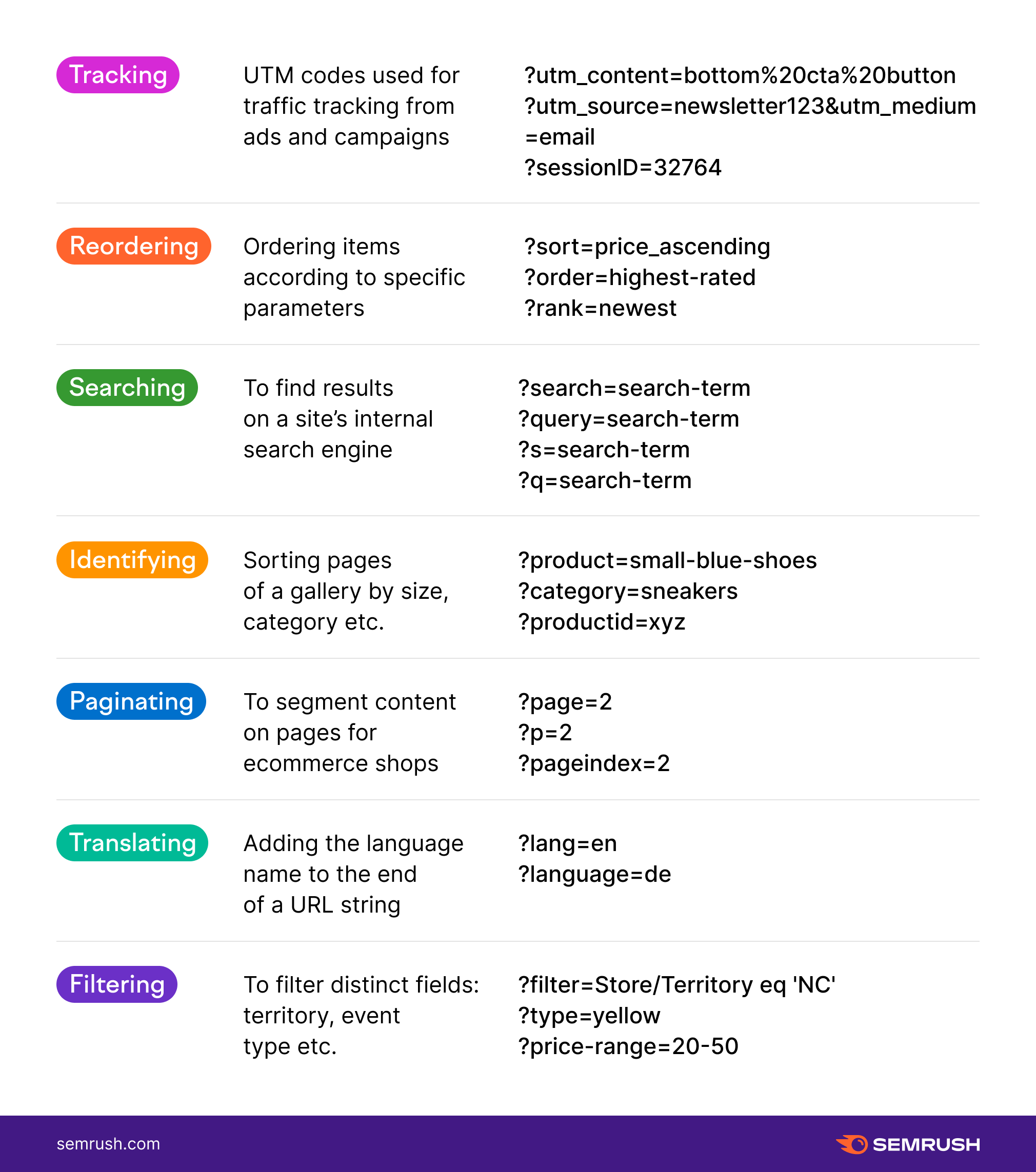

Common uses for URL parameters include:

When Do URL Parameters Become an SEO Issue?

Most SEO-friendly advice for URL structuring suggests staying away from URL parameters as much as possible. This is because however useful URLs parameters might be, they tend to slow down web crawlers.

Poorly structured, passive URL parameters that do not change the content on the page can create endless URLs with non-unique content.

The most common SEO issues caused by URL parameters are:

1. Duplicate content: Since every URL is treated by search engines as an independent page, multiple versions of the same page created by a URL parameter might be considered duplicate content. This is because a page reordered according to a URL parameter is often very similar to the original page, while some parameters might return the exact same content as the original.

2. Loss in crawl budget: Keeping a simple URL structure is part of the basics for URL optimization. Complex URLs with multiple parameters create many different URLs that point to identical (or similar) content. According to Google Developers, crawlers might decide to avoid “wasting” bandwidth indexing all content on the website, mark it as low-quality and move on to the next one.

3. Keyword cannibalization: Filtered versions of the original URL target the same keyword group. This leads to various pages competing for the same rankings, which may lead crawlers to decide that the filtered pages do not add any real value for the users.

4. Diluted ranking signals: With multiple URLs pointing to the same content, links and social shares might point to any parameterized version of the page. This can further confuse crawlers, who won’t understand which of the competing pages should be ranking for the search query.

5. Poor URL readability: When optimizing URL structure, we want the URL to be straightforward and understandable. A long string of code and numbers hardly fits the bill. A parameterized URL is virtually unreadable for users. When displayed in the SERPs or in a newsletter or on social media, the parameterized URL looks spammy and untrustworthy, making it less likely for users to click on and share the page.

How to Manage URL Parameters for Good SEO

The majority of the aforementioned SEO issues point to one main cause: crawling and indexing all parameterized URLs. But thankfully, webmasters are not powerless against the endless creation of new URLs via parameters.

At the core of good URL parameter handling, we find proper tagging.

Please note: SEO issues arise when URLs containing parameters display duplicate, non-unique content, i.e. those generated by passive URL parameters. These links — and only these links — should not be indexed.

Check Your Crawl Budget

Your crawl budget is the number of pages bots will crawl on your site before moving on to the next one. Every website has a different crawl budget, and you should always make sure yours is not being wasted.

Unfortunately, having many crawlable, low-value URLs — such as parameterized URLs created from faceted navigations — is a waste of the crawl budget.

Consistent Internal Linking

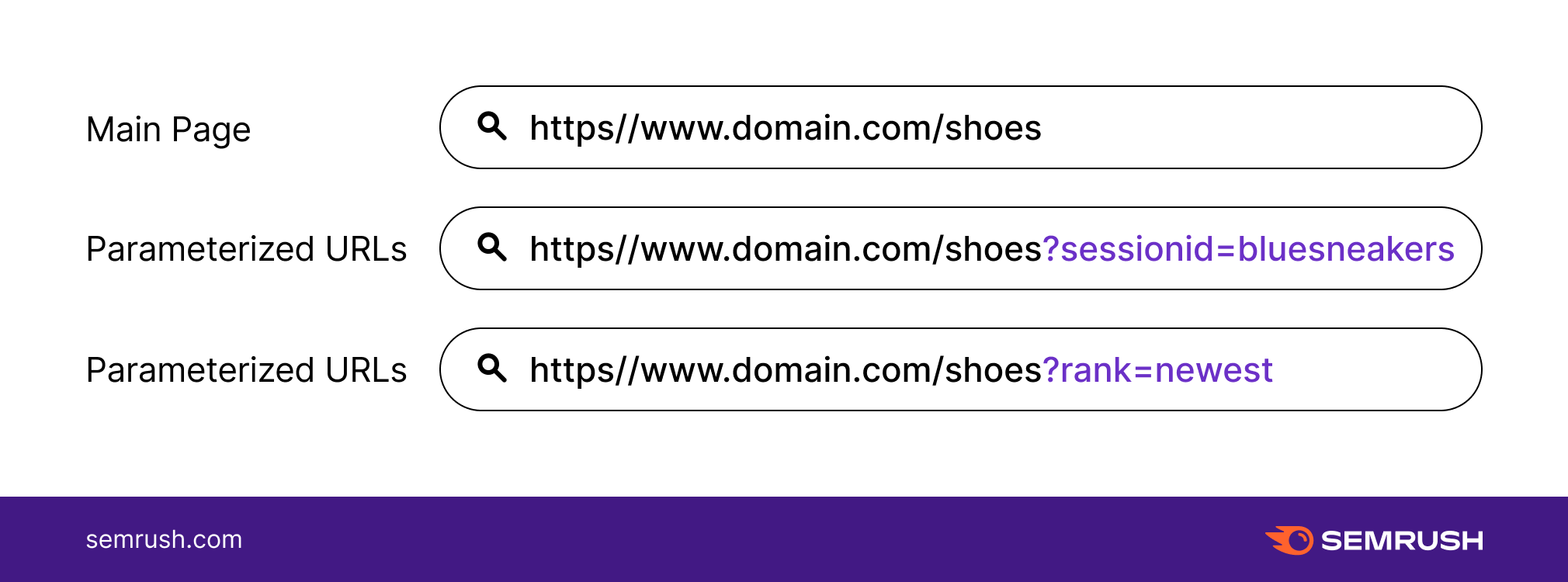

If your website has many parameter-based URLs, it is important to signal to crawlers which page not to index and to consistently link to the static, non-parameterized page.

For example, here are a few parameterized URLs from an online shoe store:

In this case, be careful and consistently link only to the static page and never to the versions with parameters. In this way you will avoid sending inconsistent signals to search engines as to which version of the page to index.

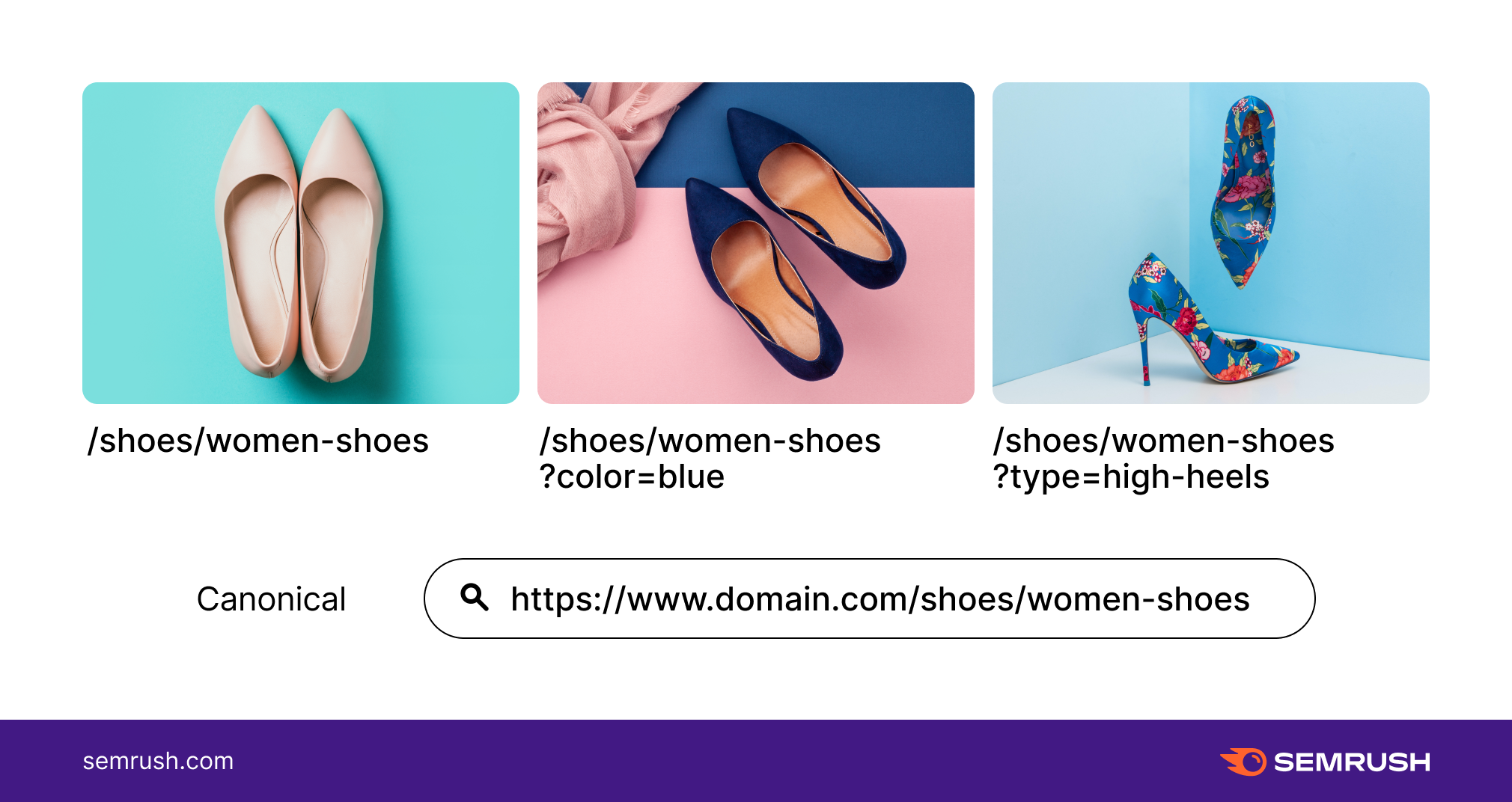

Canonicalize One Version of the URL

Once you decided on which static page should be indexed, remember to canonicalize it. Set up canonical tags on the parameterized URLs, referencing the preferred URL.

If you create parameters to help users navigate your online shop landing page for shoes, all URL variations should include the canonical tag identifying the main landing page as the canonical page. So for example:

/shoes/women-shoes/ /shoes/women-shoes?color=blue /shoes/women-shoes?type=high-heels

/shoes/women-shoes/ /shoes/women-shoes?color=blue /shoes/women-shoes?type=high-heelsIn this case, the three URLs above are “related” to the non-parameterized women shoes landing page. This will send a signal to crawlers that only the main landing page is to be indexed and not the parameterized URLs.

Block Crawlers via Disallow

URL parameters intended to sort and filter can potentially create endless URLs with non-unique content. You can choose to block crawlers from accessing these sections of your website by using the disallow tag.

Blocking crawlers, like Googlebot, from crawling parameterized duplicate content means controlling what they can access on your website via robots.txt. The robots.txt file is checked by bots before crawling a website, thus making it a great point to start when optimizing your parameterized URLs.

The following robots.txt file will disallow any URLs featuring a question mark:

Disallow:/*?tag=*

This disallow tag will block all URL parameters from being crawled by search engines. Before choosing this option, make sure no other portion of your URL structure uses parameters, or those will be blocked as well.

You might need to carry out a crawl yourself to locate all URLs containing a question mark (?).

Move URL Parameters to Static URLs

This falls into the wider discussion about dynamic vs static URLs. Rewriting dynamic pages as static ones improves the URL structure of the website.

However, especially if the parameterized URLs are currently indexed, you should take the time not only to rewrite the URLs but also to redirect those pages to their corresponding new static locations.

Google Developers also suggest to:

remove unnecessary parameters, but maintain a dynamic-looking URL create static content that is equivalent to the original dynamic content limit the dynamic/static rewrites to those that will help you remove unnecessary parameters.Using Semrush’s URL Parameter Tool

As it must be clear by now, handling URL parameters is a complex task and you might need some help with it. When setting up a site audit with Semrush, you can save yourself a headache by identifying early on all URL parameters to avoid crawling.

In the Site Audit tool settings, you’ll find a dedicated step (Remove URL parameters) where you can list the parameters URLs to ignore during a crawl (UTMs, page, language etc.) This is useful because, as we mentioned before, not all parameterized URLs need to be crawled and indexed. Content-modifying parameters do not usually cause duplicate content and other SEO issues so having them indexed will add value to your website.

If you already have a project set up in Semrush, you can still change your URL parameter settings by clicking on the gear icon.

Incorporating URL Parameters into your SEO Strategy

Parameterized URLs make it easier to modify or track content, so it’s worth incorporating them when you need to. You’ll need to let web crawlers know when to and when not to index specific URLs with parameters, and to highlight the version of the page that is the most valuable.

Take your time and decide which parameterized URLs shouldn’t be indexed. With time, web crawlers will better understand how to navigate and value your site’s pages.

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: