Poking the Bear — A Classic SEO Tale

If you’ve been in the SEO game long enough (and especially in an agency/client scenario), you’re familiar with the game of “poke the bear” that we all regularly play with search engines: make a change to the site, wait for the search engines to pick up the change, then monitor for positive, neutral or negative effects. If you’re lucky, you have years of experience or good tutelage, and you know that the bear really likes for you to scratch behind its left ear. Or you make site changes with wild abandon only to find that poking the bear in the eye is a guaranteed trip to the ER, and you’ll be lucky if your rankings ever recover.

But the reality of many SEOs’ experiences is somewhere in between. Once we’ve done baseline best practice optimizations, we then move into the realm of hypothesis: trying to divine what will work for our sites and niches based on our prior experience, SERP analysis, competitive analysis, and the fragments of a million SEO blog posts that are floating around in the back of our brains. Based on these hypotheses, we make incremental changes to our sites and “poke the bear.” Sometimes, the “GoogleBear” is affable and rewarding. Other times it is quite grumpy. Sometimes it’s slow to respond. Other times it reacts with lightning speed. And many times, it stares back emotionless and blinking, as if to question why we thought any of this would make a difference in the first place.

The Plight of SEO Testing

If you’re like me, you’ve wished more than once that there was a better way to do SEO testing on some sort of scalable level. Or a less painful way to poke the GoogleBear.

On smaller, custom sites, making modifications for SEO and testing the outcomes can be pretty straightforward. The smaller the scope of the site, usually the less templated it is, making it more malleable to tweaks in content, tagging, structure, navigation, and other elements. You make those changes and then monitor SERP positions for positive impact. Any time your SEO experimentation leads you astray, it is relatively easy to course-correct by reverting changes on a few pages.

The larger the site becomes, the more complex the backend is, and pages become more and more templated in order to handle the sheer volume of content. At ROI Revolution, we primarily work with ecommerce websites with anywhere from thousands to millions of products. If I want to test a new modification on one product page to see if it has a positive SEO impact, then I’m likely going to have to test that modification on all product pages due to its templated nature. Changes applied to these sites can be an ‘all or nothing’ affair with sweeping consequences (for better or worse) when, for example, a quarter of a million pages suddenly have a new format based on my hypothesis. If my hypothesis is correct, then it’s all high fives and rainbows. But if my hypothesis is wrong, then it can take quite a while for all product pages to get reindexed after I revert those changes that got me into trouble in the first place. Google is quick to take away gains but is slow to revert losses.

This is just one example of the headaches that can come with testing SEO improvements at scale on large sites. The problem isn’t that we can’t scale it up — it’s that we have to scale it all the way up! We’d be better off testing a smaller portion of our pages to determine if they generate positive results before applying those changes to all pages.

A Unique Vantage Point — An SEO’s Peek Into Website A/B Testing

Through my role leading ROI Revolution’s Website Optimization Services group, I’ve been lucky to lead teams that specialize in two very unique areas of online marketing: SEO (search engine optimization, which you’re likely familiar with) and CRO (conversion rate optimization). Both of these disciplines seek to optimize the user experience on a website to achieve a desirable outcome. The CRO team’s goal is to increase the likelihood of a specific user action (for ecommerce sites, it’s usually the completion of a purchase or some action earlier in the funnel). The SEO team’s goal is also to increase the likelihood of a specific user action. But in their case, the “user” is a search engine, and the goal is for the search engine to rank the site well.

With SEO, there are obviously human user implications of the optimizations you make to your site, but for the purposes of this discussion, we’re going to assume anything you’re doing to rank well is also good for the human users on your site.

Website A/B Testing

The majority of the work we do for our clients on the CRO side is to create and execute user experimentation strategies. The most common way of doing this is by utilizing A/B testing platforms, such as AB Tasty, VWO, or Google Optimize.

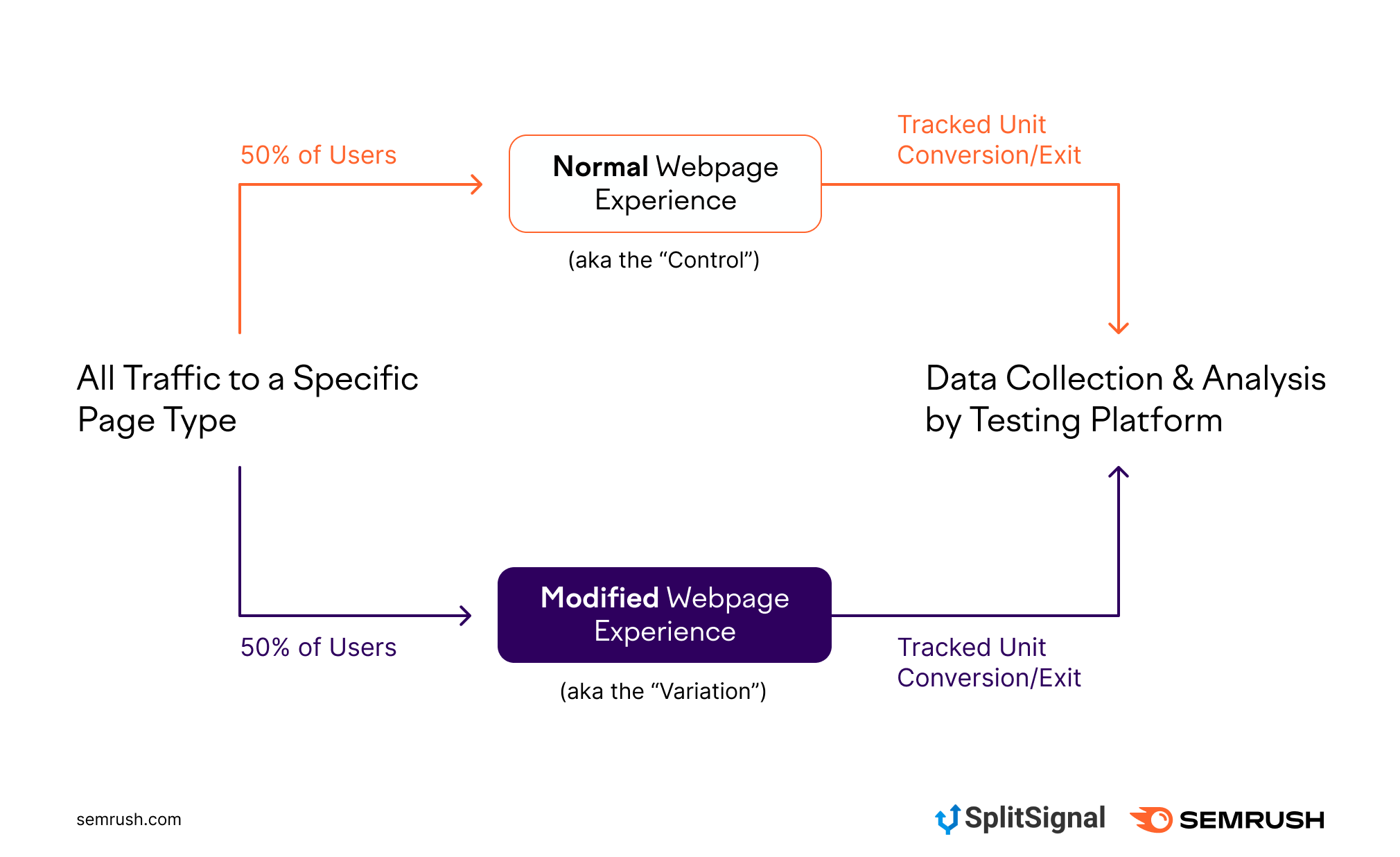

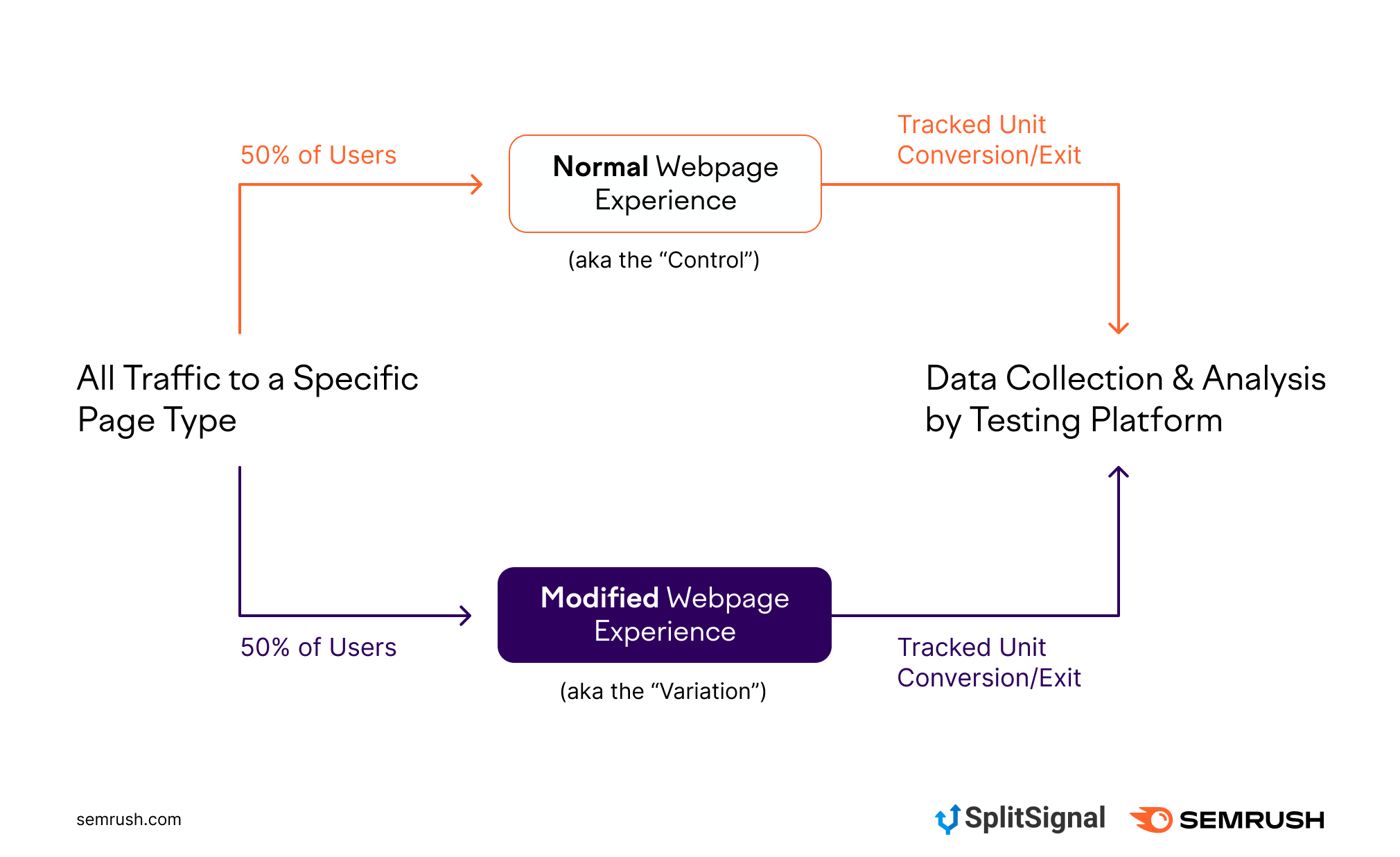

If you’re unfamiliar with A/B testing, it works by taking all of the traffic to a specific page (or page type) and serving the normal experience to half the users, while the other half encounters a different, modified experience:

Simple illustration of an SEO A/B test traffic flow. In actuality, you can split traffic in near-infinite percentages across multiple different modified experiences, but the classic 50%/50% A/B test gives you the simplest example of how this testing works.

Simple illustration of an SEO A/B test traffic flow. In actuality, you can split traffic in near-infinite percentages across multiple different modified experiences, but the classic 50%/50% A/B test gives you the simplest example of how this testing works.The basis of this type of experimentation is to hypothesize a site experience in which the user is more likely to complete an action (like completing checkout on an ecommerce site). Then you modify your site experience based on this hypothesis and serve this modified experience against a portion of your users. The types of modifications you make to your site can range from simple (a banner at the top of the page advertising free shipping) to very complex (totally rebuilding the checkout flow) and everything in between.

The testing platforms utilize a variety of statistical models in order to determine if the outcome of your test can be trusted. This is generally referred to as “statistical significance.” If the outcome of the test is statistically significant, you have more confidence that the outcome of the test wasn’t by random chance.

If your hypothesis is correct (your test won), you can then permanently implement your tested changes on your site, being confident that it will have a positive impact. If your hypothesis was incorrect (your test lost), then you’ve saved yourself from a potentially costly mistake that seemed like a good idea at the time.

This is a great way to prove the value of changes before making them, which is of great importance when the slightest shift in conversion rate can impact millions of dollars in revenue each month.

A Fringe Benefit of Most Website A/B Testing Platforms

In addition to just being an awesome way to gain insights into your user’s motivational triggers and friction points, an added bonus of many of these A/B testing platforms is that they operate independently from your company’s (or client’s) development queue, allowing you to move quicker with less red tape.

Traditionally, you’d need approvals from your dev team and other stakeholders to get site changes into your dev sprints, then have to wait for your turn to come up in the queue before seeing your changes go live on the site. By utilizing client-side scripting methods, the testing platforms allow you to create code (either through WYSIWYG editors or code editors) that runs when the page loads in the user’s browser. The testing platform’s code runs as the page is being built in the browser, and it rearranges the page in milliseconds. The end user is none the wiser to this change, and your dev team doesn’t have to lift a finger.

If the modified test experience is a winner, only then do you get your developers involved — and you can easily show them the revenue impact of making your suggested website changes.

Applying A/B Testing Principles to SEO

If you’re like me, the first time I looked at the SEO work we were doing and contrasted it against the CRO work we were doing, I thought, “Bingo. Let’s do A/B SEO split testing to determine if a tactic will work before rolling it out full-scale.”

While that sounds great in theory, there are a couple of stumbling blocks:

A User Base of 1

What makes traditional website A/B testing possible is the size of the population (i.e. the number of people in the test), which determines how long a test will need to run. Once enough people are in the test, we can reach a statistically significant conclusion about the impact of our test.

The problem is that if we’re running a test to determine the impact of a site modification on Google’s ranking algorithm, then there’s only one “user” in our test: Googlebot. This in itself blows up the whole notion of doing traditional A/B testing for SEO.

So, how do we get around this?

Bot-Based A/B Testing

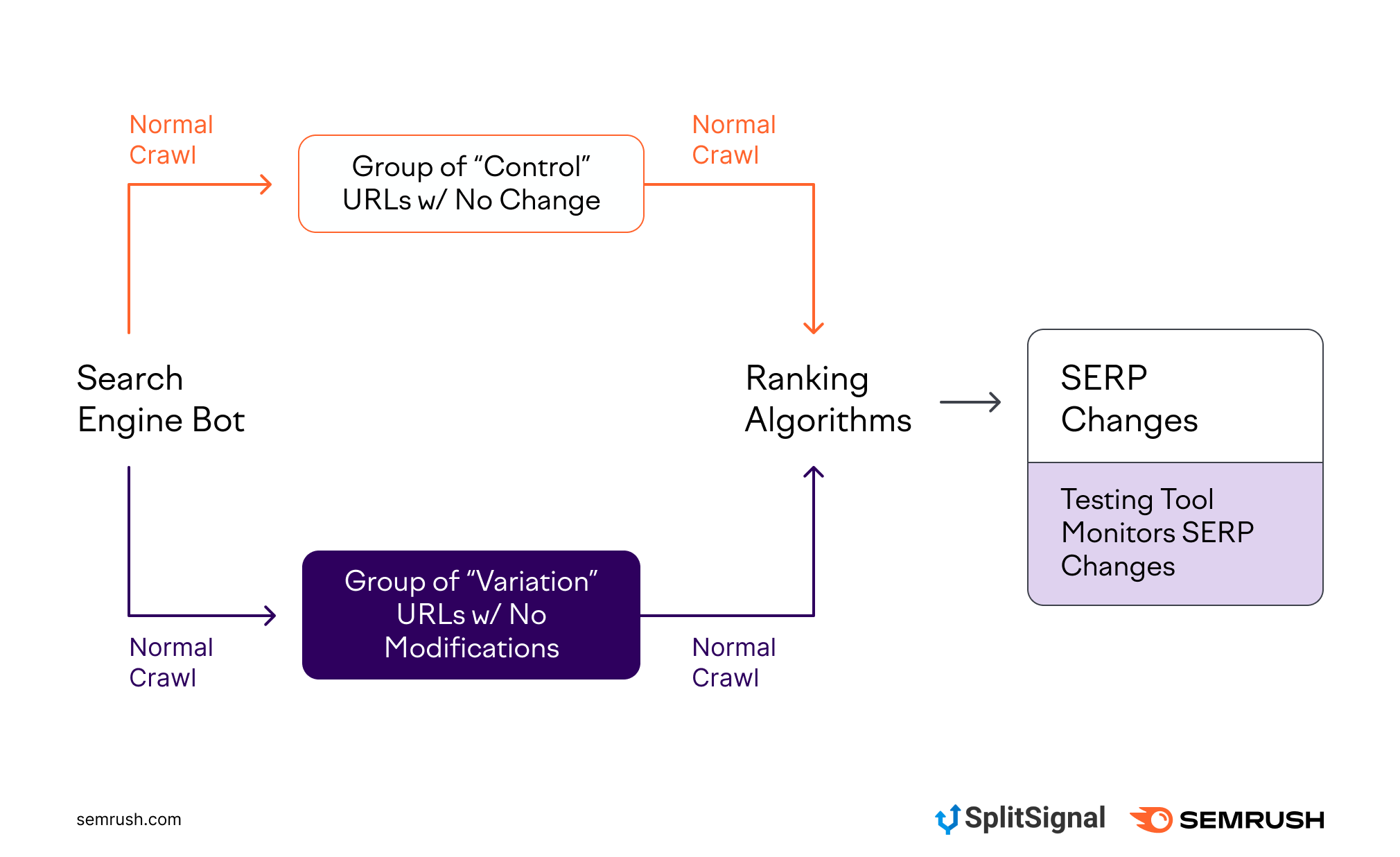

If you recall from the earlier overview on A/B testing, we require a “control” experience and at least one “variation” experience (aka the new modification we’re testing out). Then we need a page or pages to test on, and we need a large population of users to show the control and variation to. We then compare how the two experiences performed against each other:

Simple illustration of an SEO A/B test traffic flow.

Simple illustration of an SEO A/B test traffic flow.With SEO split testing, you have to flip the model on its head. Since you can’t test a population of users, you’ll have to test a population of pages instead. Rather than splitting your users between two experiences, you’ll split the pages equally, apply a different experience (aka modification) to each group of pages, and then feed those pages into the search engine via its normal crawl routine:

Simple illustration of an SEO split testing process. It’s a lot like A/B testing for your human users, except it’s not.

Simple illustration of an SEO split testing process. It’s a lot like A/B testing for your human users, except it’s not.You’ll then monitor the rankings and traffic from the two experiences over thirty days, as compared to the current baseline. If the pages that are included in the modified experience group see positive ranking gains over the duration of the experience (and the control group is flat or down), then you can be confident in implementing those modifications to your template, applying the change to all similar pages on your site.

Server-Side Implementation

In my overview of how A/B testing works, I mentioned how a fringe benefit of utilizing client-side testing is that it can keep you from getting your developers involved every time you want to test a change.

Because of search engines’ historically poor abilities to render JavaScript, any sort of A/B testing utilizing the bot-based A/B testing method described above has been an onerous task. Rather than utilizing the simple ‘client-side’ scripting method like most user-focused testing platforms, any sort of SEO A/B testing tool previously required integration into your website’s server and/or CDN in order to make changes to the pages before they were sent ‘over the wire.’ Often these solutions were home-grown and clunky. Some service providers did enter the market to offer SEO testing solutions, but server-side rendering for tests was still required, introducing the same headaches as home-grown solutions.

The Beauty of Evergreen Bots

In the olden days of SEO, search engines didn’t “render” webpages. They simply scanned through the source code and made ranking decisions based on that information. Eventually, search engines realized that they really should be making decisions based on what the end users actually saw, not just what was squeezed into (or omitted from) the server’s source code response. To address this, they began to render webpages the same way a browser does and utilized this visually-rendered version of a page in making ranking decisions.

As more and more sites began to utilize JavaScript in order to build out what end users saw in their browsers, search engines had trouble keeping up. For the longest time, Google relied on outdated versions of Chrome to render the page. As SEOs, we were constantly playing a game of whack-a-mole with regards to clients using cutting-edge JS on their sites, but Google being unable to “see” the content because it couldn’t render it properly.

In 2019, Google announced that GoogleBot’s rendering engine was going to be “evergreen.” What this means is that every time Google’s Chrome browser is updated, GoogleBot will use the most recent version of the browser as its rendering engine. Not too long after, Bing made an announcement that BingBot would also be evergreen and would always use the most recent rendering engine from the Edge browser.

THIS WAS A HUGE DEAL for A/B testing for SEO.

If BingBot and GoogleBot’s rendering engines are evergreen, then we can feel confident that nearly any JavaScript that we’re running on a site via a testing tool will be acknowledged by search engines.

This means that the same client-side coding mechanisms that make user-focused A/B testing so easy to implement can now be made available to SEO A/B testers!

Expect an SEO A/B Testing Boom

I’ll be honest. Because I spent nearly 15 years fretting over how search engines couldn’t handle JavaScript properly, when the evergreen bots were announced, I didn’t want to believe it. Preconceived notions die hard in the SEO world. Connecting the dots between evergreen bots and the ability to do more SEO testing wasn’t an obvious conclusion for the same reasons.

However, given what I’ve seen over the last year with GoogleBot being able to render most JavaScript properly on page load, I think we’re at a real turning point in SEO testing. I’m personally very excited that companies like Semrush are introducing client-side A/B testing platforms like SplitSignal. My experience in the CRO industry has built my faith in client-side testing, which has been happening successfully for many years now. With search engine bots keeping up with the times, it makes total sense to combine the bot-based A/B testing methodology with a client-side testing platform.

Bot-based A/B testing may be overkill for smaller sites. But for large CMS-driven sites like ecommerce platforms and large content hubs with tens of thousands (and more) pages, it’s a great way to be even more strategic with our optimization strategies.

Or, in other words, make the game of “poke the bear” much more rewarding! Request a demo today!

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: