A business’s website is in many ways, like a digital shop or office. It is where customers or clients go to interact with the business. It is where they make the first contact and where they ultimately buy the company’s products or services. It is also what they often use to develop their view of a business’s brand. Just as an office or shop should be attractive and welcoming to visitors, so should a commercial website.

A website needs to be easy to navigate and allow visitors to find what they want quickly. In fact, it is even more important for a website to have a straightforward structure. That is because of the negative impact poor site architecture can have on SEO. If Google bots and search engine crawlers can’t understand or navigate a site, it can cause big issues.

Unfortunately, it is all too easy for site architecture to become confused and muddled — even if a site owner or webmaster started with the best of intentions. The day-to-day operations of a business often get in the way of website structure best practice.

Our Experience With a Client Website

An ad hoc content strategy can see pages and elements added to a site with no thought for the overall architecture. Staff turnover can lead to individuals with no understanding of a site’s overall structure, suddenly being in charge of maintaining it. The end result is often an ungainly and inefficient site architecture.

That is exactly the situation we faced when we worked with a client of ours. Over time, their site had grown to have 500,000+ pages. The sheer number of pages and the way they had been added to the site had created some serious SEO issues. We were faced with the challenge of untangling the site architecture of what was a gargantuan website.

Even the biggest and most confusing site can be brought into line. What you need is a solid strategy and the will to follow through. We are going to share our experience of working with a client whose name has been anonymized. By doing so, we should hopefully give you some pointers on how to meet your own structural SEO challenges.

Analysis

As an external expert coming in to advise on or to solve technical SEO issues with a site, the first step is understanding the scope of the project. Once you appreciate the full picture, can you begin to develop a strategy to move forward. Even if you are working on improving your own site, analyzing the site as an overall entity is still a good place to start.

With smaller sites, it is possible to review site architecture and spot issues manually. As a site gets larger, this gets increasingly more difficult. Once you get to a site with over 500,000 pages, it is just not viable. You could devote every hour of every day for weeks to the process and still not scratch the surface. That is where technical SEO tools come in.

There is a wide variety of technical SEO and Google Analytics tools around today. Some are free to use, others have to be paid for, and a few have both free and pay versions. The tools can help you do everything from checking page speed to testing your structured data for implementation errors.

Website Crawl Using SEMrush

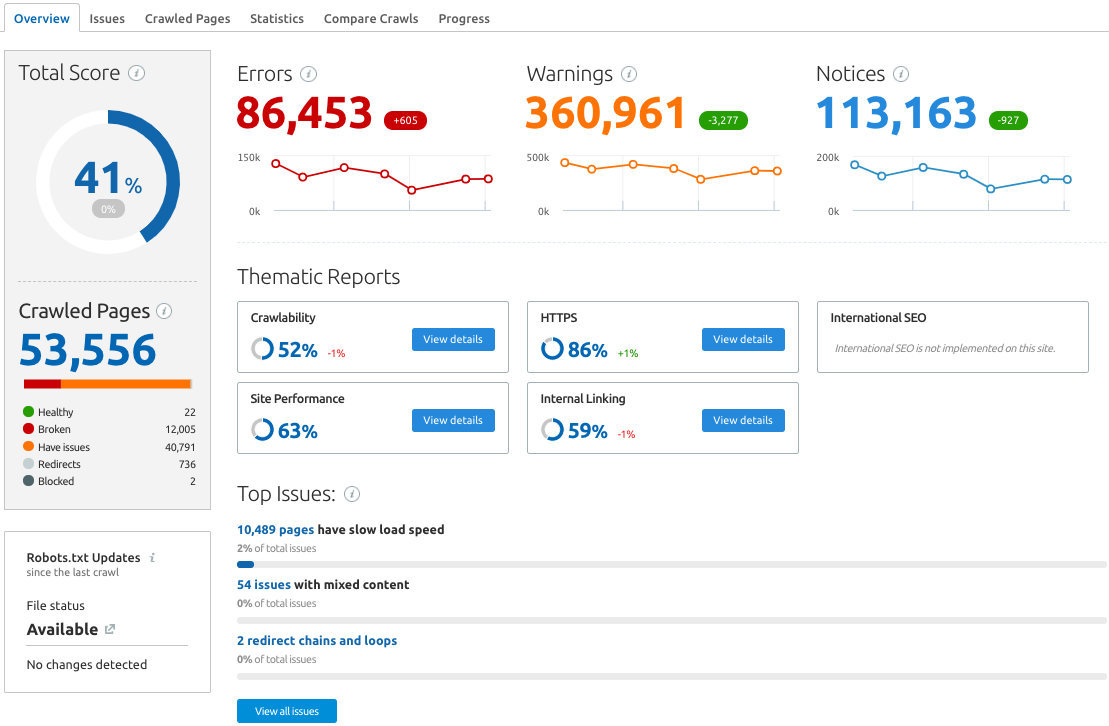

The SEMrush Bot is an efficient way to perform the kind of deep crawl required to get to grips with a huge technical SEO project. That deep crawl will help identify both basic and more advanced technical SEO issues plaguing any site.

Some of the more basic issues it can diagnose are as follows:

Errors in URLs

Missing page titles

Missing metadata

Errored response codes

Errors in canonicals

On top of that, a deep crawl with SEMrush can help you with some more advanced tasks:

Identifying issues with pagination

Evaluating internal linking

Visualizing and diagnosing other problems with site architecture

When we performed our deep crawl of the site, it highlighted several serious issues. Identifying these issues helped us develop our overall strategy for untangling the site’s architecture. Below is an overview of some of the issues we discovered.

Issue One: Distance From Homepage

One of the most apparent issues highlighted by the deep crawl was just how far some pages were from the homepage. There was content on the site found to be anything up to 15 pages away. As a rule, you should try to ensure that content is never more than three clicks from your homepage.

The reason for that ‘three click rule’ is twofold; it makes sense for both your website visitors and for SEO purposes. On the part of visitors, they are unlikely to be willing to click through 15 pages to find the content they need. If they can’t quickly find what they are looking for, they will bounce from your site and search elsewhere.

Limiting click distance also makes sense from an SEO standpoint. The distance a page is from a site’s homepage is taken into account by search engine algorithms. The further away it is, the less important a page is seen to be. What’s more, pages far from the home page are unlikely to get many benefits from the homepage’s higher link authority.

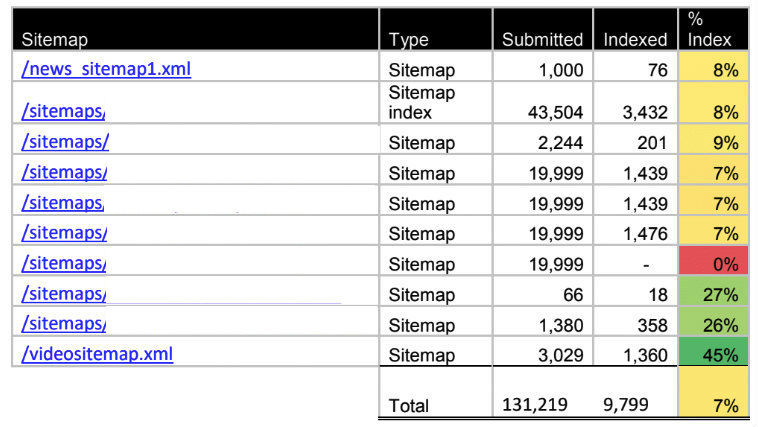

Issue Two: Only 10% of Pages Indexed

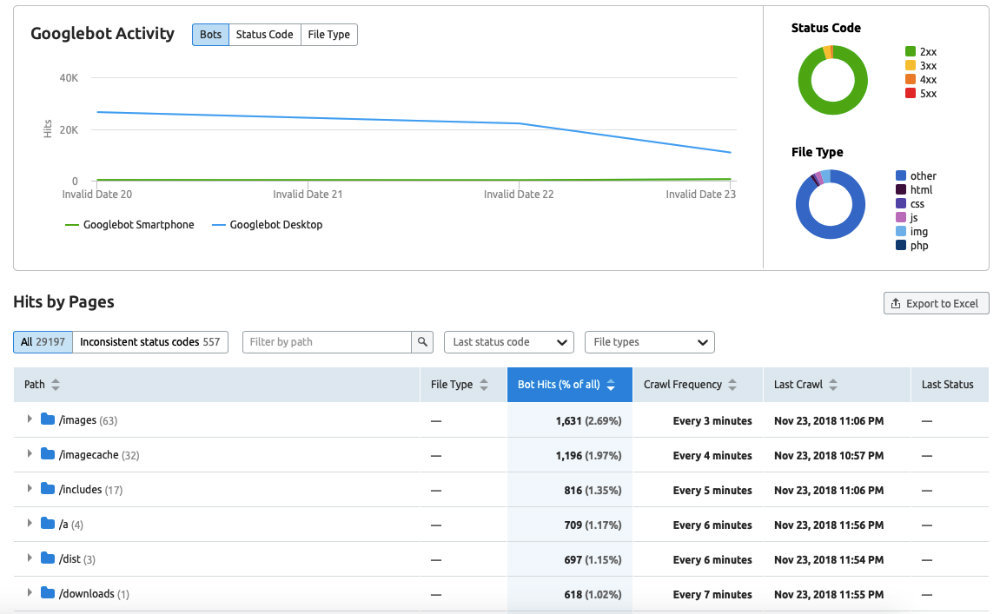

From Google Search Console, we found that only 10% of the pages were being indexed. As part of our technical SEO analysis, we also reviewed the log files of the site; that revealed many other issues.

A page which is unindexed is basically ‘unread’ by Google and other search engines. There is absolutely nothing you can do for a page from an SEO standpoint if the search engines don’t find and ‘read’ it. It will not rank for any search query and is useless for SEO.

Our deep crawl revealed that a whopping 90% of the site pages fell into that category. The site had more than 500,000 pages that equated to around 450,000 with little to no SEO value. As we said, it was a major issue.

Issue Three: Unclear URL Structure

Through the site audit, it also became clear that content lacked a clear URL structure. Pages that should have been on the same level of the site did not have URLs to reflect that. Confusing signals were being sent to Google about how the content was categorized; this is an issue you need to consider as you build out your blog or website.

The best way to explain this issue is with an example. Say you have a site which includes a variety of products. Your URLs should flow logically from domain to category to sub-category to the product. An example would be ‘website/category/sub-category/ product’. All product pages should then have that consistent URL structure.

Problems arise if some of the products have a different URL structure. For instance, if a product has a URL like ‘website/product’. That product is then on a different level in the site to the rest. That creates confusion for search engines and users alike.

Issue Four: Too Many Links On the Homepage & Menu

In addition to issues with the URL structure, another factor that needed to be addressed was the number of links found on a page; this was, in part, a sitewide issue. For example, the menu had 400+ links. The footer menu meanwhile contained 42 links. This number is far more links than a person is likely to use. It was clear that a large proportion of these links were not being used enough to make it worth incorporating them in either the menu or footer.

Through the site crawl, we also identified several pages, including the homepage, that had 100+ links. The more links a page has, including the menu, the less internal PageRank each of those links passes. It is also indicative of a confusing site structure.

Overall it was clear that there were serious issues with the internal linking strategy that the site implemented. This strategy impacted how Google indexed the site, as well as resulting in bad user experience for visitors to the site.

Additional Issues

Through the site audit, a number of other issues were identified. Some of these issues would not impact the website architecture, but if addressed, would improve search rankings. Below is an overview of some of the key factors that we needed to act on:

Robot Text File: There were thousands of tags used on the site. The majority of tags had only a few pieces of content associated with them. In addition to this, there was the opportunity to optimize the crawl budget by denying bots access to the site; this had the potential to improve page speed.

Improve Metadata: The metadata for ranking pages could be reviewed and improved to increase organic Click Through Rates.

Page Load Time: There was an opportunity to improve the load time of the pages. Again, this is a ranking factor for Google.

Relic Domain Pages: As a result of the server log analysis, we identified a number of relic domain pages that were getting a lot of Google Bot activity; this is far from ideal. The activity may have meant that those expired pages were still appearing in search results.

Solution

Our analysis and research defined the scope of the project ahead of us. It spelled out the issues that needed solving. That allowed us to develop a strategy to work step-by-step through those issues. Below is an overview of four of the key areas that we focused on to improve the website structure. The list excludes other tasks that we completed, like updating meta descriptions and content on core pages, which forms a core part of a website audit.

Step One: Redirects & Other Tweaks

The first task to get our teeth into was redirecting relic pages. Starting with those we identified as having the most Google Bot activity. We made sure that pages were redirected to the most relevant content. Sometimes that meant a page that had replaced the original. On other occasions, it meant the homepage.

This strategy gave us a quick win to get started. It ensured that any traffic resulting from the expired domains wasn’t met with a broken page. Another simple tweak we made at the outset was to the layout of the PR page; this removed Google’s confusion as to whether it was actually the site’s archive page. That led to an immediate improvement in the indexing of content.

In addition to this, the site had thousands of tags. We didn't want tags with limited content to appear in the search results as it provided a poor user experience for people accessing the site. For this reason, we deindexed those pages.

Step Two: Reduce the Number of Links in the Menu

Alongside the task of redirecting expired pages, we also worked on improving the menu structure. As I mentioned previously, the website had 400+ links on the menu; this was far more than site visitors needed. Moreover, it was four times more than the recommended number of links on a page.

While we understood that the number of links in the menu was an issue that needed to be dealt with, we still had to choose which links to remove. Our solution was to first analyze what links people were clicking on, so we understood which ones were most useful for readers. We used a combination of Google Analytics and heatmap software to generate the data we then analyzed.

Once we had identified the most useful links, we then started the process of identifying the category and sub-category pages that we knew needed to be on the menu. Our approach in this regard was to create a site structure where all content was within three clicks of the homepage.

Step Three: Clustering & Restructuring

After our initial quick wins, it was time to turn to the larger job of reshaping the site architecture. We needed to solve the issue of click distance and give the site a more logical structure. That way, it could be more easily navigated by both crawlers and actual users.

One step we took was to use the idea of clustering content. That meant bringing together related pages and linking out to them from a ‘pillar topic’ page. That page would be within a couple of clicks of the site’s homepage, which means that a huge array of related pages could be brought within three clicks. Content clusters like this make it much easier for visitors and crawlers to manoeuver a site’s content.

Clusters were how we started to organize the vast number of pages. When it came to the overall structure, we focused on the idea of creating a content pyramid. Such a pyramid is the accepted gold standard for site structure. The site’s homepage sits atop the pyramid. Category pages are beneath and sub-categories a further level down. Individual pages then make up the wide base of the pyramid.

Once the content clusters and pyramid took shape, the issue of links was also easily solved. Having a defined structure made it much more straightforward to interlink pages. No longer were there any pages with hundreds of excessive links.

Step Four: Improving URL Structure

Our restructuring of the site structure made it a cinch to improve URL structure. The content pyramid meant that the site's URLs could achieve the logical flow we mentioned earlier. No longer were pages sitting at different site levels on an ad hoc basis.

The URLs of the site’s myriad of pages were brought in line with one defined structure; that removed the mixed messages being sent to Google. The search engine could now far more easily understand – and therefore index – the site’s content.

Step Five: Adding Dimensions to images

The file size of an image is a significant issue when it comes to page speed for a lot of sites. Images are often improperly formatted, if at all. By adding dimensions to images, you can significantly improve page load time by ensuring that a correctly sized and formatted image is displayed on the first time.

By adding dimensions to the images, we reduced the category pages from often in excess of 25+ MB down to a more acceptable 2-4 MB per page. This significantly increased page speed and user experience while reducing the strain on the server.

Results

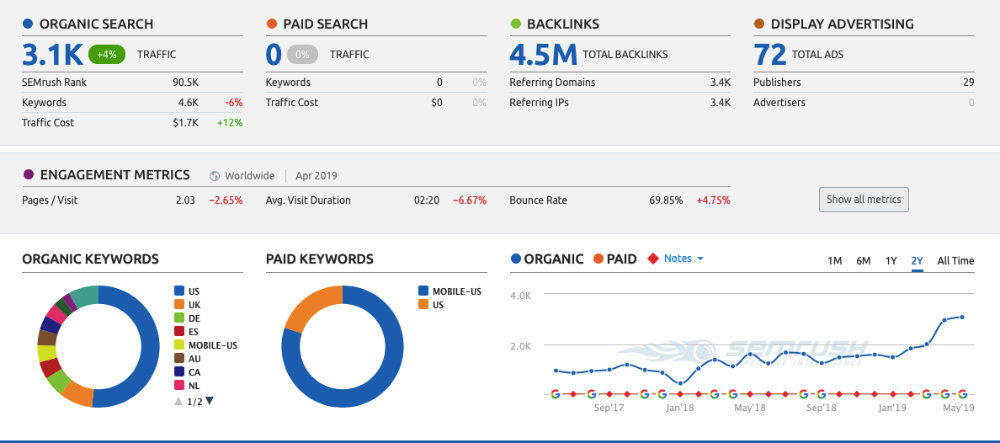

The impact of our work on the site was clear to see. Improvements in the site's structure had a profound and rapid effect on indexing. As a result, it had a similarly pleasing effect on site traffic. Within the first three months, the percentage of pages indexed by Google had risen from 10% to 93%. Moreover, the percentage of URLs submitted that were approved also improved.

Unsurprising, the fact that 350,000 pages were suddenly indexed led to an increase in site traffic. The volume of visitors rose 27% in the first three months, and 120% after 9 months.

Conclusion

The exact steps we took to improve the site’s architecture may not work for your site. What will work, however, is the general strategy we applied. It was a strategy that can deliver impressive results even for the largest, most confusing sites.

To begin, you must be thorough in researching the project at hand. Comprehensive research and analysis of your site are what should define the scope of your work. You will then have a roadmap for your improvements. If you follow through with the work according to that roadmap, you are sure to see results.

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: