The most common method of optimizing SERP listings is to follow a list of best practices. These best practices may be defined by the SEO industry at large, defined internally, or some combination of both. While this is a useful approach, it can be limiting.

Without experimentation, it isn't possible for us to discover more innovative ways of capturing attention in the search results.

For that reason, we decided to offer you five steps below that you can use to run experiments on your SERP listings and optimize them in ways you wouldn't otherwise be able to. Let's get started.

1. Think Like a Conversion Rate Optimizer (CRO)

CROs think scientifically. They test their hypotheses empirically with A/B tests so that they have a clean control group and can clearly identify which version of a page converted best using statistical analysis.

Unfortunately for us in the SEO industry, running a split test on your title tags, meta descriptions, and rich result markup isn't possible. But that doesn't mean we should stop thinking scientifically or that we can't use data to optimize conversions. If astronomers and evolutionary biologists can think scientifically with little or no ability to run experiments, so can we.

To think like a CRO without the ability to split test, we need to be able to:

Define hypotheses for what will improve conversion rates. The most crucial thing about such a hypothesis is that it is testable. It can be proven false with data. Gather observations. We test our hypothesis by making changes to our SERP listings. While no single change alone can tell us whether the impact was positive or negative, repeated results can allow us to identify a trend and see if our hypothesis stood up to the test. Refine our method. If our hypothesis stood up, we could devise new tests to see if the same principle applies elsewhere. If it doesn't, we need to go back to the drawing board and come up with something new to try.This scientific approach to SERP listing optimization keeps us from fooling ourselves and instituting changes just because they are considered best practice when in fact, for any given brand, they may be more superstition than anything else.

2. Define A Clear Change

Like we said above, it starts with a hypothesis.

For example, we might have seen a study suggesting that list posts tend to get a higher click-through rate than other types of posts. This example works well as a hypothesis because there is a very clear definition of whether it is true or not. If changing title tags so that they indicate a list post doesn't increase click-throughs, our hypothesis is false.

If we want our tests to teach us anything, our hypothesis needs to be very clearly defined, like so:

Good: All else being equal, a title tag that begins with a number which indicates a quantity of subheadings will generate a higher click-through rate than a title tag which does not. Bad: List posts do better than other posts.The reason the first option above is “good” is that it tells us precisely what kind of changes will need to be made to the title tag. In particular, that these changes can be replicated in many title tags in a systematic way.

We may not necessarily learn anything useful if we test the “bad” option, because we may use an inconsistent definition of what a list post is when we update our title tags, clouding the results and offering us no precise information.

3. Identify A Control Group

While it isn't possible for us to split-test title tags or schema markup, this doesn't mean we need to throw out the idea of a reasonably controlled experiment.

We can more reliably say that a change made a difference if we have something that wasn't changed to compare it against a control group; this is because countless other variables could have an impact. If we change a title tag and traffic goes up, we don't necessarily know if the change was responsible.

It is entirely possible that traffic would have gone up anyway, or that it would have gone up even more if we hadn't made the change.

Having a control group means having a group of pages that aren't changed, so that we can see if the updated pages are impacted positively, negatively, or insignificantly.

For example, if we were to test our list post hypothesis, we would need to select a group of pages that would be updated to include a list post title tag, as well as a group of pages that would not be updated to include a list post title tag.

Our control group pages should be selected as carefully as we choose the pages we plan to change, or our results won't be meaningful.

Keep the following in mind:

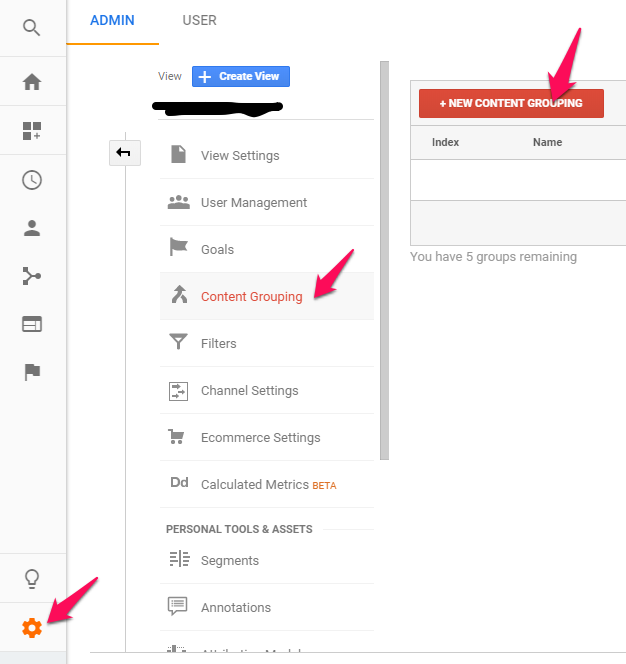

The pages in our control group should belong to the same category as the pages in our “experimental” group. Running with our list post example, all of the pages in our control group should be pages that could have been updated to be list posts. If not, we risk biasing the results. For example, pages suitable to be changed into list posts might be longer than others, so a change in traffic to those pages could have been the result of a change in the way Google looks at longer pages, rather than the changes we made to the title tags. In other words, all pages in the experimental and control groups should be suitable for the change. After selecting which pages are suitable candidates for the change, which of them go to the control group and which of them go to the experimental group should ideally be chosen at random, or at the very least arbitrarily. Again, if we don't, we risk biasing the results. For example, one lousy way we might choose which pages to update would be to update the highest performing pages and compare them to the lowest-performing page. Pages that are already performing well will respond differently to changes that aren't, so we wouldn't necessarily learn anything meaningful from this “test.”In Google Analytics, you can use Content Groups to track two groups separately. You can set them up from Admin > View > Content Grouping, then selecting the “+ New Content Grouping” button, then using a tracking code, extraction, or rule definition to define which pages are in your experimental and control groups.

4. Monitor Rankings And Traffic Separately

Aside from the Google Search Console, whose data is highly suspect, we don't have any way of directly measuring the click-through rates on our SERP listings. Instead of measuring it directly, we need to be sure we are monitoring our rankings and our traffic separately.

Here is why.

If, as with our list post example, updating the title tags coincided with an overall drop in search traffic, we wouldn't necessarily know that this was because it negatively impacted click-through rates. Adding a number to the beginning of the title tags may have pushed keywords further away from the front of the tag, causing rankings for those keywords to drop.

Overall, there may have been an improvement in click-through rates while there was a drop in rankings, leading to an overall drop in search traffic.

A strategy that leads to an overall drop in search traffic isn't one we would want to continue perusing, but we would be learning the wrong lesson if we attributed it to click-through rates. The explanation should only be attributed to CTR if the rankings remain unchanged.

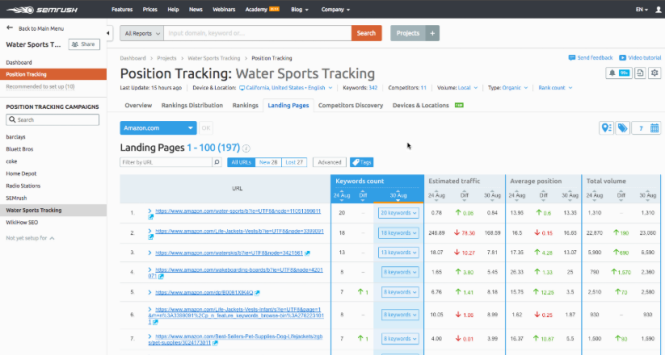

You can use SEMrush to monitor rankings for each page in your control, and experimental groups from the “keywords count” drop-down:

5. Analysis and Iteration

To evaluate the results of your SERP listing test, you will need to allow enough time for the search engines to update your SERPs as well as enough time for you to collect enough traffic data to achieve meaningful results.

Since this is not a formal A/B test, it isn't possible to achieve statistical significance by comparing traffic to your control group and your experimental group using the same math a CRO would use. More advanced time series analysis and quasi-experimental design can be applied if you happen to have a statistician on staff, but pragmatically it is often acceptable to merely compare whether a practically significant impact is observed.

In most circumstances, we are not trying to learn whether any difference exists between two different ways of setting up a SERP listing. Generally, we are more concerned with whether there is a meaningful difference.

How you define this is up to you, but generally if the difference in traffic between the control group and the experimental group is less than 5%, we can say there was no practical impact if we are dealing with more than 500 or so visits in total. In this case, we learn that we need to go back to the drawing board and think of strategies that will have a stronger impact.

Negative Impact

If, on the other hand, we see a significant negative impact on traffic, while this isn't great news, in some ways, it is better because we learn more from the result. For example, if we discovered that list posts negatively impacted results, this would teach us something about our audience. From this information, we could brainstorm new hypotheses, such as whether our audience is more impacted by emotional appeals than by the promise of easily digestible content.

Success

If the experiment was a “success,” we can also learn more than the obvious implication that the change should be instituted everywhere. In our example, if list posts outperformed the control group, this could lead us to other hypotheses to test, such as whether our audience is interested in numbers more generally. We could test adding figures and percentages to titles that aren't suitable for list posts or update our meta descriptions to include more quantitative data.

What makes testing so valuable is that it isn't just about the improvements any individual test can make to our SERP click-through rates. It is that we learn things about our audience and can test new, more general ideas based on the results; this allows us to hone our processes and arrive at increasingly positive results.Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: