One of the more common technical SEO challenges faced by SEOs is getting Google to index JavaScript content.

The use of JavaScript across the web is rapidly increasing. It's well-documented that many websites struggle to drive organic growth due to dismissing the importance of JavaScript SEO.

If you work on sites that have been developed using JavaScript frameworks (such as React, Angular, or Vue.js), you will inevitably face different challenges to those using WordPress, Shopify, or other popular CMS platforms.

However, to see success on the search engines, you must know exactly how to check whether your site's pages can be rendered and indexed, identify issues, and make it search engine friendly.

In this guide, we're going to teach you everything you need to know about JavaScript SEO. Specifically, we're going to take a look at:

What Is JavaScript? What Is JavaScript SEO? How Does Google Crawl and Index JavaScript? How To Make Your Website's JavaScript Content SEO-Friendly Server-Side Rendering vs. Client-Side Rendering vs. Dynamic Rendering Common JavaScript SEO Issues and How To Avoid ThemWhat Is JavaScript?

JavaScript, or JS, is a programming (or scripting) language for websites.

In short, JavaScript sits alongside HTML and CSS to offer a level of interactivity that would otherwise not be possible. For most websites, this means animated graphics and sliders, interactive forms, maps, web-based games, and other interactive features.

But it's becoming increasingly common for entire websites to be built using JavaScript frameworks like React or Angular, which can be used to power mobile and web apps. And the fact that these frameworks can build both single-page and multiple-page web applications has made them increasingly popular with developers.

But using JavaScript, along with other frameworks, brings a set of SEO challenges. We'll look at these below.What Is JavaScript SEO?

JavaScript SEO is a part of technical SEO that involves making it easy for search engines to crawl and index JavaScript.

SEO for JavaScript sites presents its own unique challenges and processes that must be followed to maximize your chances of ranking by making it possible for the search engines to index your web pages.

That said, it's easy to fall foul of common mistakes when working with JavaScript sites. There's going to be a lot more back-and-forth with developers to ensure everything is done correctly.

However, JavaScript is gaining popularity and, as SEOs, understanding how to optimize these sites properly is an important skill to learn.

How Does Google Crawl and Index JavaScript?

Let's make one thing clear: Google is better at rendering JavaScript than a few years back when it would commonly take weeks for this to happen.

But before we take a deep dive into ways to make sure that your website's JavaScript is SEO friendly and can actually be crawled and indexed, you need to understand how Google processes it. This happens in a three-phase process:

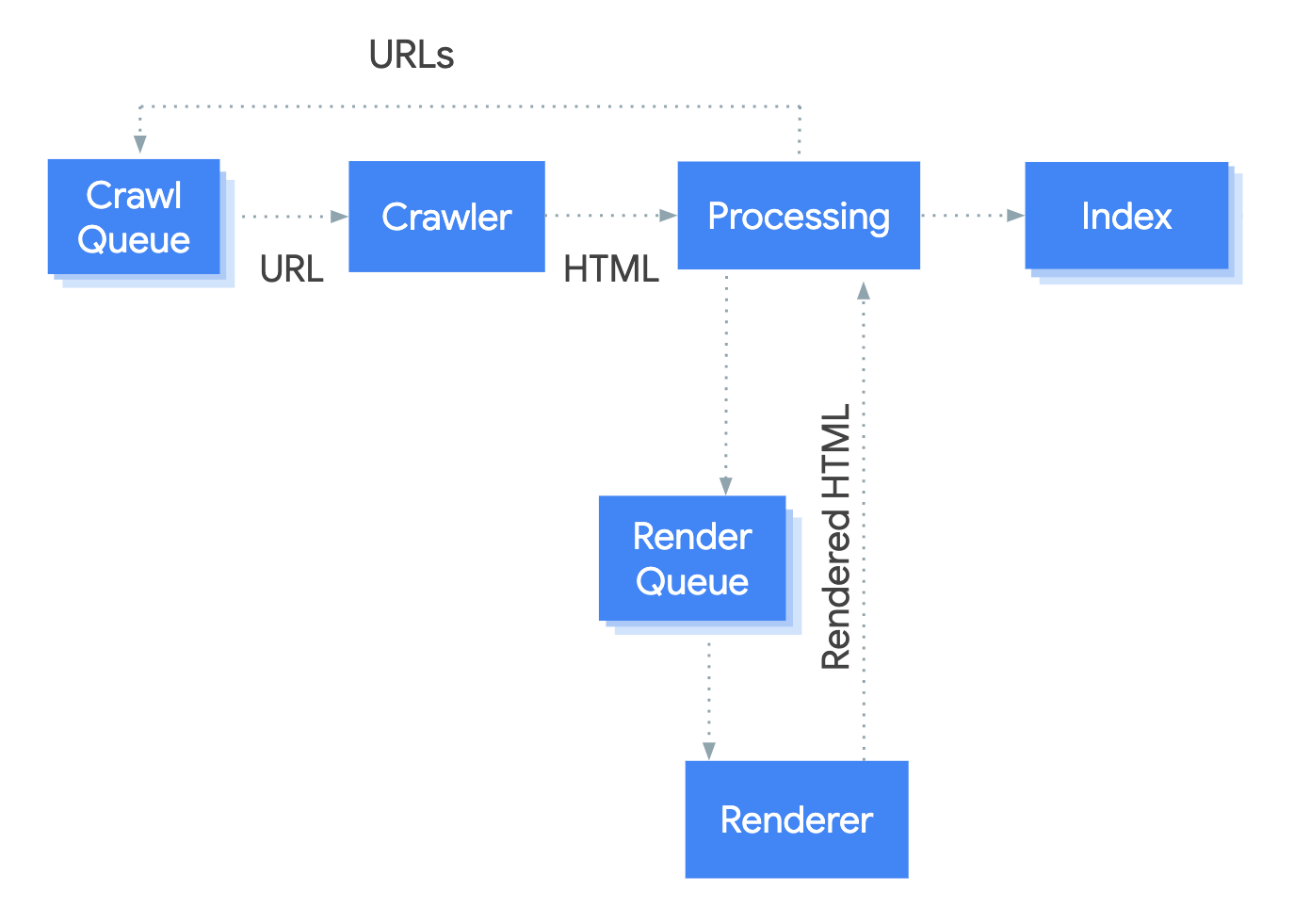

Crawling Rendering IndexingYou can see this process visualized in more detail below:

Image credit: Google

Image credit: Google

Let's look at this process in a little more depth, comparing it to how Googlebot crawls an HTML page.

It's a quick and simple process that starts with an HTML file being downloaded, links extracted, and CSS files downloaded before these resources are sent to Caffeine, Google's indexer. Caffeine then indexes the page.

As with an HTML page, the process starts with the HTML file being downloaded. Then the links are generated by JavaScript, but these cannot be extracted the same. So Googlebot downloads the page's CSS and JS files and then needs to use the Web Rendering Service that's part of Caffeine to index this content. The WRS can then index the content and extract links.

And the reality is that this is a complicated process that required more time and resources than an HTML page, and Google cannot index content until the JavaScript has been rendered.

Crawling an HTML site is fast and efficient: Googlebot downloads the HTML, then extracts the links on the page and crawls them. But when JavaScript is involved, this cannot happen in the same way, as this must be rendered before links can be extracted.

Let's take a look at ways to make your website's JavaScript content SEO friendly.

How To Make Your Website's JavaScript Content SEO-Friendly

Google must be able to crawl and render your website's JavaScript to be able to index it. However, it's not uncommon to face challenges that prevent this from happening.

But when it comes to making sure your website's JavaScript is SEO friendly, there are several steps that you can follow to make sure that your content is being rendered and indexed.

And really, it comes down to three things:

Making sure Google can crawl your website's content Making sure Google can render your website's content Making sure Google can index your website's contentThere are steps you can take to make sure that these things can happen, as well as ways to improve the search engine friendliness of JavaScript content.

Let's take a look at what these are.

Ensure That Google Can Render Your Web Pages Using Google Search Console

While Googlebot is based on Chrome's newest version, it doesn't behave in the same way as a browser. That means opening up your site in this isn't a guarantee that your website's content can be rendered.

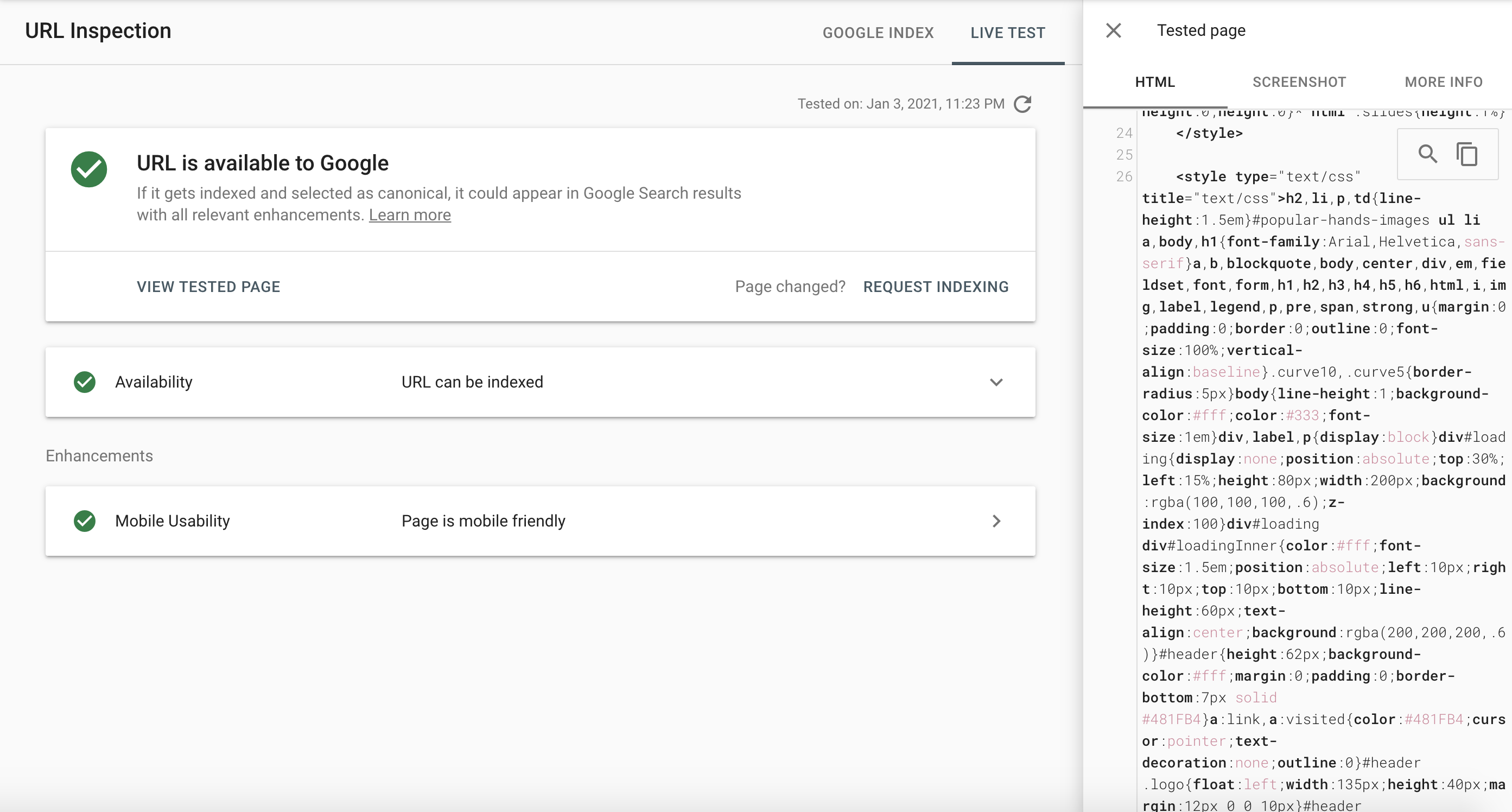

You can use the URL Inspection Tool in Google Search Console to check that Google can render your webpages.

Enter the URL of a page that you want to test and look for the 'TEST LIVE URL' button at the top right of your screen.

After a minute or two, you'll see a 'live test' tab appear, and when you click 'view tested page,' you'll see a screenshot of the page that shows how Google renders it. You can also view the rendered code within the HTML tab.

Check for any discrepancies or missing content, as this can mean that resources (including JavaScript) are blocked or that errors or timeouts occurred. Hit the 'more info' tab to view any errors, as these can help you determine the cause.

The Most Common Reason Why Google Cannot Render JavaScript Pages

The most common reason why Google cannot render JavaScript pages is that these resources are blocked in your site's robots.txt file, often accidentally.

Add the following code to this file to ensure that no crucial resources are blocked from being crawled:

User-Agent: Googlebot Allow: .js Allow: .cssBut let's clear one thing up; Google doesn't index .js or .css files in the search results. These resources are used to render a webpage.

There's no reason to block crucial resources, and doing so can prevent your content from being rendered and, in turn, from being indexed.

Ensure That Google Is Indexing Your JavaScript Content

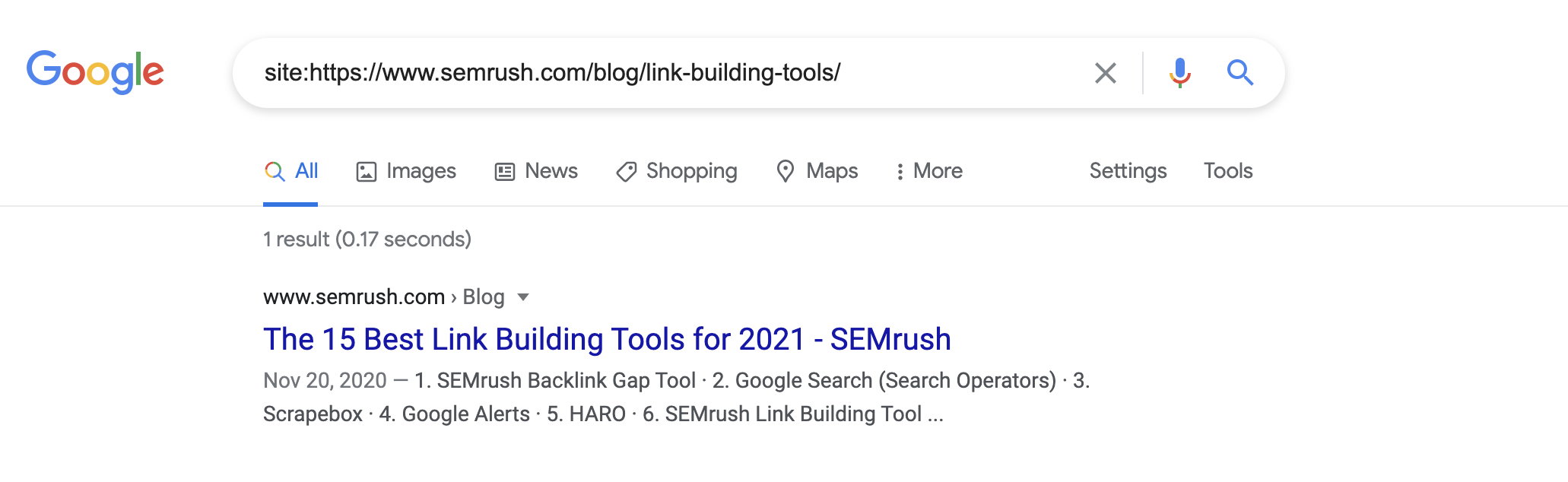

If you've confirmed that your web page is rendering properly, you need to determine whether or not it's being indexed.

And you can check this through Google Search Console as well as straight on the search engine.

Head to Google and use the site: command to see whether your web page is in the index. As an example, replace yourdomain.com below with the URL of the page you want to test:

site:yourdomain.com/page-URL/If the page is in Google's index, you'll see the page showing as a returned result:

If you don't see the URL, this means that the page isn't in the index.

But let's assume it is and check whether or not a section of JavaScript-generated content is indexed.

Again, use the site: command and include a snippet of content alongside this. For example:

site:yourdomain.com/page-URL/ "snippet of JS content"Here, you're checking whether this content has been indexed, and if it is, you'll see this text within the snippet.

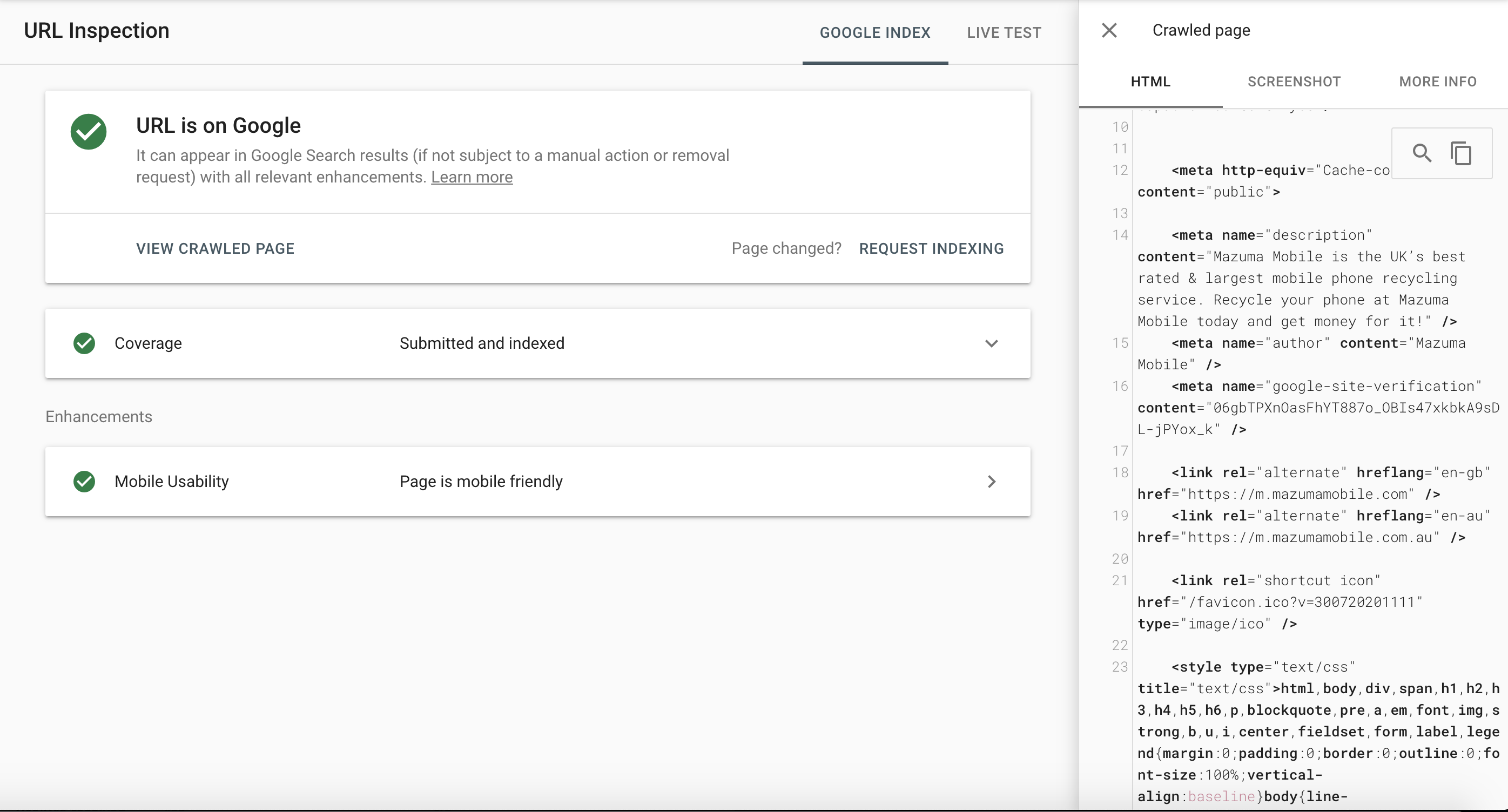

You can also analyze whether JavaScript content is indexed using Google Search Console, again using the URL Inspection Tool.

This time, rather than testing the live URL, click the 'view crawled page' button and view the indexed page's HTML source code.

Scan the HTML code for snippets of content that you know are generated by JavaScript.

Common Reasons Why Google Cannot Index JavaScript Content

There could be many reasons why Google is unable to index your JavaScript content, including:

The content cannot be rendered in the first instance The URL cannot be discovered due to links to it being generated by JavaScript on a click The page times out while Google is indexing the content Google determined that the JS resources do not change the page enough to warrant being downloadedWe'll look at the solutions to some of these commonly seen problems below.

Server-Side Rendering vs. Client-Side Rendering vs. Dynamic Rendering

Whether or not you face issues with Google indexing your JavaScript content is largely impacted by how your site renders this code. And you must understand the differences between server-side rendering, client-side rendering, and dynamic rendering.

As SEOs, we need to learn to work with developers to overcome the challenges of working with JavaScript. While Google continues to improve the way it crawls, renders, and indexed content generated by JavaScript, you can prevent many of the commonly experienced problems from becoming issues in the first place.

In fact, understanding the different ways to render JavaScript is perhaps the single most important thing you need to know for JavaScript SEO.

So what are these different types of rendering, and what do they mean?

Server-Side Rendering (SSR) is when the JavaScript is rendered on the server, and a rendered HTML page is served to the client (the browser, Googlebot, etc.). The process for the page to be crawled and indexed is just the same as any HTML page as we described above, and JavaScript-specific issues shouldn't exist.

According to Free Code Camp, here's how SSR works: "Whenever you visit a website, your browser makes a request to the server that contains the contents of the website. Once the request is done processing, your browser gets back the fully rendered HTML and displays it on the screen." The problem here is that SSR can be complex and challenging for developers. Still, tools such as Gatsby and Next.JS (for the React framework), Angular Universal (for the Angular framework), or Nuxt.js (for the Vue.js framework) exist to help to implement this.

Client-Side Rendering (CSR) is pretty much the polar opposite of SSR and is where JavaScript is rendered by the client (browser or Googlebot, in this case) using the DOM. When the client has to render the JavaScript, the challenges outlined above can exist when Googlebot attempts to crawl, render, and index content.

Again, according to Free Code Camp, "When developers talk about client-side rendering, they’re talking about rendering content in the browser using JavaScript. So instead of getting all of the content from the HTML document itself, you are getting a bare-bones HTML document with a JavaScript file that will render the rest of the site using the browser."

When you understand how CSR works, it becomes easier to see why SEO issues can occur.

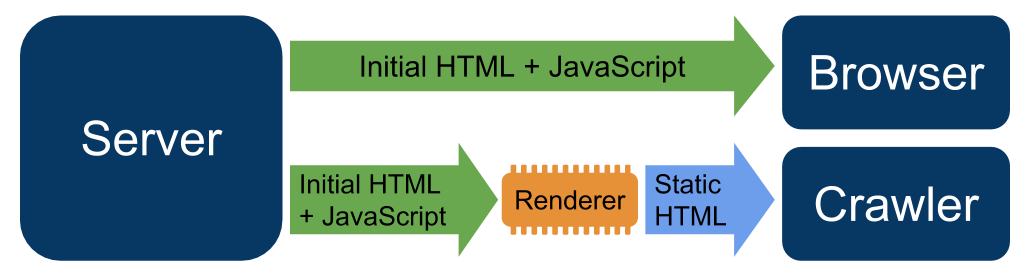

Dynamic Rendering is an alternative to server-side rendering and a viable solution for serving a site to users containing JavaScript content generated in the browser but a static version to Googlebot.

This is something that was introduced by Google's John Mueller at Google I/O 2018:

Think of this as sending client-side rendered content to users in the browser and server-side rendered content to the search engines. This is also supported and recommended by Bing and can be achieved using tools such as prerender.io, a tool that describes itself as 'rocket science for JavaScript SEO.' Puppeteer and Rendertron are other alternatives to this.

Image Credit: Google

Image Credit: Google

To clear up a question that many SEOs will likely have: dynamic rendering is not seen as cloaking as long as the content that's served is similar. The only time when this would be considered cloaking is if entirely different content was served. With dynamic rendering, the content that users and search engines see will be the same, potentially just with a different level of interactivity.

You can learn more about how to set up dynamic rendering here.

Common JavaScript SEO Issues and How To Avoid Them

It's not uncommon to face SEO issues caused by JavaScript, and below you'll find some of the ones that are frequently seen, as well as tips on how to avoid these.

Blocking .js files in your robots.txt file can prevent Googlebot from crawling these resources and, therefore, rendering and indexing these. Allow these files to be crawled to avoid issues caused by this. Google typically doesn't wait long periods of time for JavaScript content to render, and if this is being delayed, you may find that content isn't indexed because of a timeout error. Setting up pagination where links to pages beyond the first (let's say on an eCommerce category) are only generated with an on click event (clicks) will result in these subsequent pages not being crawled as search engines do not click buttons. Always be sure to use static links to help Googlebot discover your site's pages. When lazy loading a page using JavaScript, be sure not to delay the loading of content that should be indexed. This should usually be used for images rather than text content. Client-side rendered JavaScript is unable to return server errors in the same way as server-side rendered content. Redirect errors to a page that returns a 404 status code, for example. Make sure that static URLs are generated for your site's web pages, rather than using #. That's ensuring your URLs look like this (yourdomain.com/web-page) and not like this (yourdomain.com/#/web-page) or this (yourdomain.com#web-page). Use static URLs. Otherwise, these pages will not be indexed as Google typically ignores hashes.At the end of the day, there's no denying that JavaScript can cause problems for crawling and indexing your website's content. Still, by understanding why this is and knowing the best way of working with content generated in this way, you can massively reduce these issues.

It takes time to get to grips with JavaScript fully, but even as Google gets better at indexing it, there's a distinct need to build up your knowledge and expertise of overcoming the problems you might face.

Further recommended reading on JavaScript SEO includes:

Google Search Central: Understand the JavaScript SEO basics Search Engine Journal: SEO & JavaScript: The Good, the Bad & the Uncertainty Search Engine Watch: JavaScript rendering and the problems for SEO in 2020 Blueclaw: JavaScript & SEO: What You Need to KnowInnovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: