Just as CEOs have their assistants and Santa has his elves, Google (along with other search engines) has its website crawlers.

Website crawlers (or web crawlers) might sound kind of creepy. What are these mysterious things crawling around on the world wide web and what exactly are they doing?

In this guide, we’ll look at what web crawlers are, how search engines use them, and how they can be useful to website owners.

We’ll also let you in on how you can use our free website crawler, the Site Audit tool, to discover what web crawlers might find on your site and how you can improve your online performance as a result.

What Is a Web Crawler and What Does It Do?

A web crawler is an internet bot, also known as a web spider, automatic indexer, or web robot, which works to systematically crawl the web. These bots are almost like the archivists and librarians of the internet.

They pull together and download information and content, which is then indexed and cataloged in the SERPs so that it can appear to users by order of relevance.

This is how a search engine such as Google is able to quickly respond to users’ search queries with exactly what we’re looking for: by applying its search algorithm to the web crawler data.

Hence, crawlability is a key performance attribute of your website.

How Do Web Crawlers Work?

To find the most reliable and relevant information, a bot will start with a certain selection of web pages. It will search (or crawl) these for data, then follow the links mentioned in them (or spider) to other pages, where it will do the same thing all over again.

In the end, crawlers produce hundreds of thousands of pages, whose information has the potential to answer your search query.

The next step for search engines like Google is to rank all the pages according to specific factors to present the users with only the best, most reliable, most accurate, and most interesting content.

The factors influencing Google’s algorithm and ranking process are numerous and ever-changing. Some are more commonly known (keywords, the placement of keywords, the internal linking structure and the external links, etc.). Others are more complex to pinpoint as, for example, the overall quality of the website.

Basically, when we talk about how crawlable your website is, we’re actually assessing how easy it is for web bots to crawl your site for information and content. The clearer your site structure and navigation are to crawl, the more likely you’ll rank higher on the SERPs.

Web crawlers and crawlability come full circle to SEO.

How Semrush Uses Web Crawlers

Website crawlers aren’t just a secret tool of search engines. At Semrush, we also use web crawlers. We do this for two key reasons:

To build and maintain our backlinks database To help you analyze the health of your siteOur backlinks database is a huge part of what we use to make our tools stronger. Our crawlers regularly search the web for new backlinks to allow us to update our interfaces.

Thanks to this, you can study your site’s backlinks through the Backlink Audit tool and check out your competitors’ backlink profiles through our Backlink Analytics tool.

Basically, you can keep an eye on the links that your competitors are making and breaking while ensuring that your backlinks are healthy.

The second reason we use web crawlers is for our Site Audit tool. The Site Audit tool is a high-powered website crawler that will comb and categorize your site content to let you analyze its health.

When you do a site audit through Semrush, the tool crawls the web for you to highlight any bottlenecks or errors, making it easier for you to change gears and optimize your website on the spot. It’s a super-easy way to crawl a website.

Why you should use the Semrush Site Audit tool to crawl your site

By using the Site Audit tool, you ask our crawlers to access a site. The crawlers will then return a list of issues that show exactly where a given website needs to improve to boost its SEO.

There are over 120 issues that you can check in on, including:

duplicate content broken links HTTPS implementation crawlability (yes, we can tell you how easy it is for crawlers to access your website!) indexability.And this is all finished in minutes, with an easy-to-follow user interface, so there’s no need to worry about wasting hours only to be left with a huge document of unreadable data.

What are the benefits of website crawling for you?

But why is it so important to check this stuff out? Let’s break down the benefits of a few of these checks.

Crawlability

It should come as no surprise that the crawlability check is easily the most relevant. Our web crawlers can tell you exactly how easy it is for Google bots to navigate your site and access your information.

You’ll learn how to clean up your site structure and organize your content, focussing on your sitemap, robots.txt, internal links and URL structure.

Sometimes some pages on your site can’t be crawled at all. There are many reasons why this could be happening. One might be a slow response from the server (longer than 5 seconds) or a flat-out access refusal from the server. The main thing is that once you know you have a problem, you can get started fixing it.

HTTPS implementation

This is a really important part of the audit if you want to move your website from HTTP to HTTPS. We’ll help you avoid some of the most common mistakes that site owners make in this area by crawling for proper certificates, redirects, canonicals, encryption, and more. Our web crawlers will make this as clear as possible.

Broken links

Broken links are a classic cause of user discontent. Too many broken links might even drop your placement in the SERPs because they can lead crawlers to believe that your website is poorly maintained or coded.

Our crawlers will find these broken links and fix them before it’s too late. The fixes themselves are simple: remove the link, replace it, or contact the owner of the website you’re linking to and report the issue.

Duplicate content

Duplicate content can cause your SEO some big problems. In the best case, it might cause search engines to choose one of your duplicated pages to rank, pushing out the other one. In the worst case, search engines may assume that you’re trying to manipulate the SERPs and downgrade or ban your website altogether.

A site audit can help you nip that in the bud. Our web crawlers will find the duplicate content on your site, and orderly list it.

You can then use your preferred method to fix the issue – whether that be informing search engines by adding a rel=”canonical” link to the correct page, using a 301 redirect, or hands-on editing the content on the implicated pages.

You can find out more about these issues in our previous guide on how to fix crawlability issues.

How to Set Up a Website Crawler Using Semrush Site Audit

Setting up a website crawler through Semrush’s Site Audit is so easy that it only takes six steps.

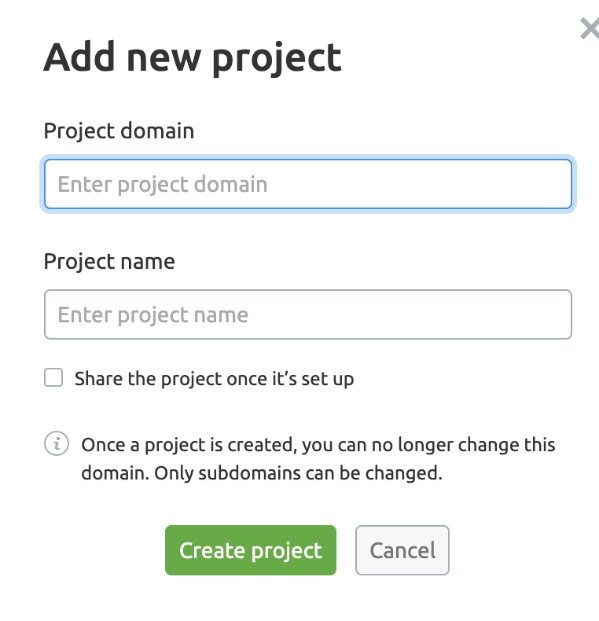

Before we get started, make sure you’ve set up your project. You can do that easily from your dashboard. Alternatively, pick up a project you’ve already started but haven’t yet done a site audit for.

Step 1: Basic Settings

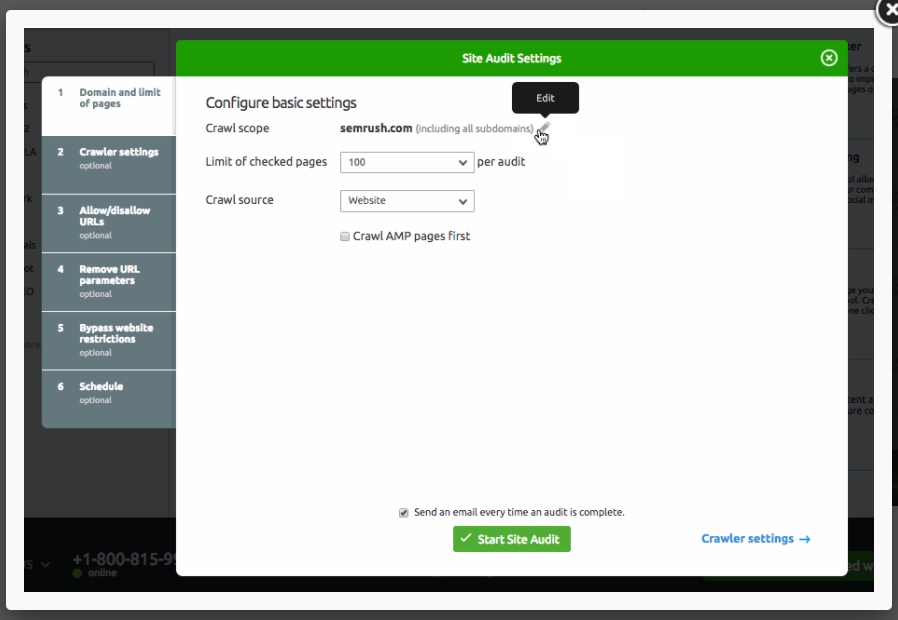

Once your project is established, it’s time for step one: configuring your basic settings.

Firstly, set your crawl scope. Whatever the specific domain, subdomain, or subfolder that you want to crawl, you can enter it here in the ‘crawl scope’ section. As shown below, if you enter a domain, you can also choose whether you want to crawl all of the subdomains with it.

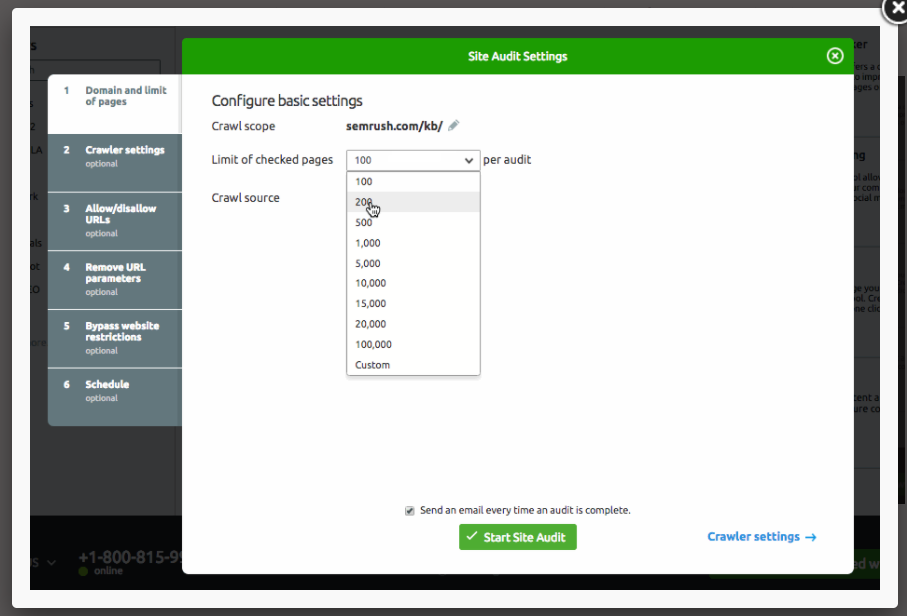

Then adjust the maximum number of pages you want to check per audit.The more pages you crawl, the more accurate your audit will be, but it’s also important to pay attention to your own commitment and skill level. What’s the level of your subscription? How often are you going to come back and audit again?

For Pros, we’d recommend crawling up to 20,000 pages per audit. For Gurus, we’d recommend the same, 20,000 pages per audit, and for Business users we’d recommend 100,000 pages per audit. Find what works for you.

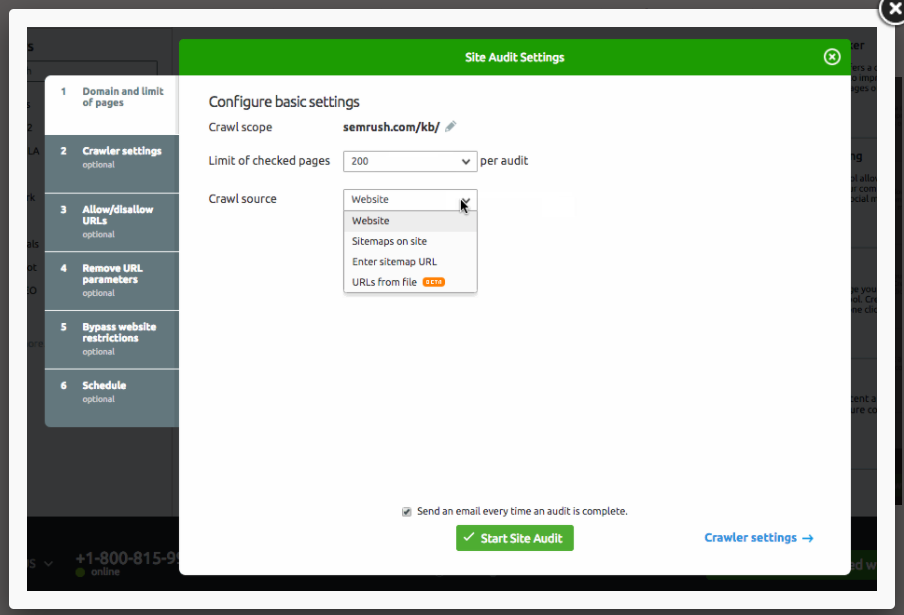

Choose your crawl source. This is what decides how our bot crawls your website and finds the pages to audit.

As shown, there are four options.

Website: with this option, we’ll crawl the site like the GoogleBot (via a breadth-first search algorithm), navigating through your links (starting at your home page). This is a good choice if you’re only interested in crawling the most accessible pages a site has to offer from its homepage. Sitemaps on site: if you choose this option, we’ll only crawl the URLs found in the sitemap from the robots.txt file. Enter sitemap URL: this is similar to sitemaps on site, but in this case you can enter your own sitemap URL, making your audit a tiny bit more specific.4. URLs from file: here is where you can get really specific and knuckle down into exactly which pages you’d like to audit. You just need to have them saved as .csv or .txt files on your computer and ready to upload directly to Semrush. This option is great for when you don’t need a general overview. For example, when you’ve made specific changes to specific pages and just want to see how they are performing. This can pare you some crawl budget and get you the information you really want to see.

Step 2: Crawler Settings

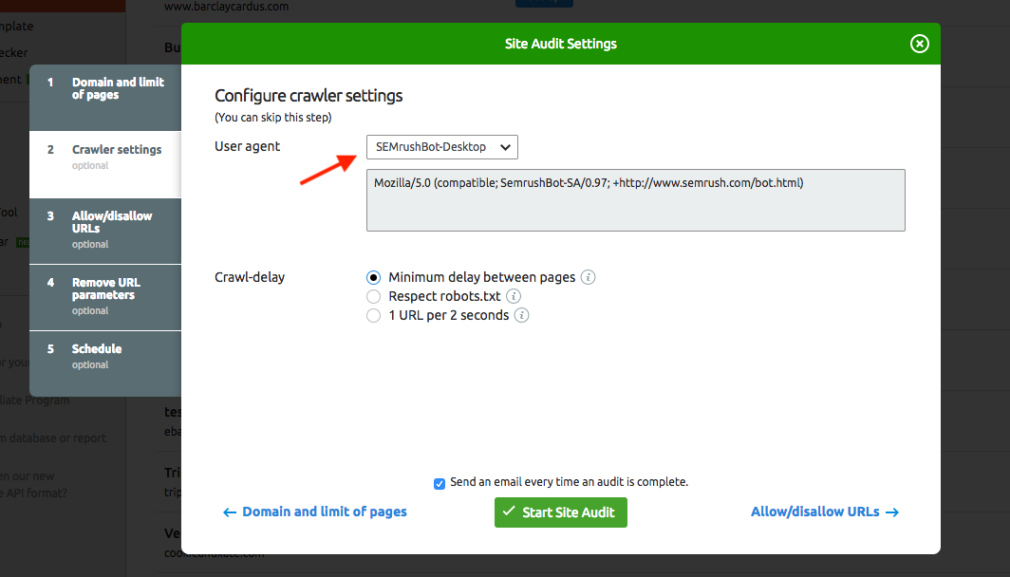

Next, you have to decide upon the kind of bot you want crawling your site. There are four possible combinations, depending on whether you choose the mobile or desktop version of the SemrushBot or GoogleBot.

Then choose your Crawl-Delay settings. Decide between Minimum delay between pages, Respect robots.txt, or 1 URL per 2 seconds.

Choose ‘minimum delay’ for the bot to crawl at its usual speed. For the SemrushBot, that means it will leave about one second before starting to crawl the next page. ‘Respect robots.txt’ is ideal for when you have a robots.txt file on your site and need a specific crawl delay as a result. If you’re concerned that your website would be slowed down by our crawler, or you don’t have a crawl directive already, then you’ll probably want to choose ‘1 URL per 2 seconds’. This can mean that the audit will take longer, but it won’t worsen the user experience during the audit.Step 3: Allow/disallow URLs

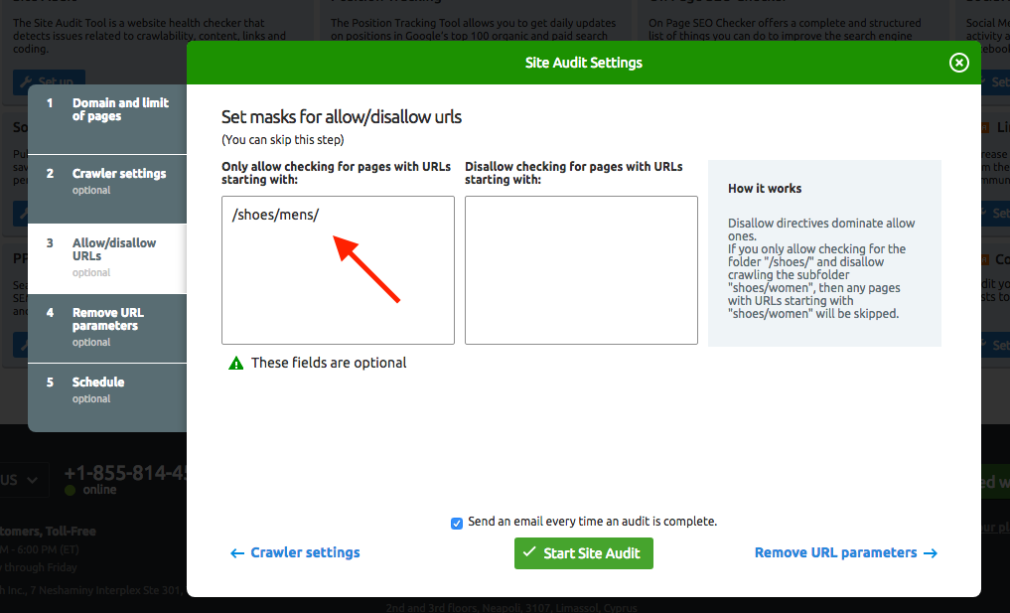

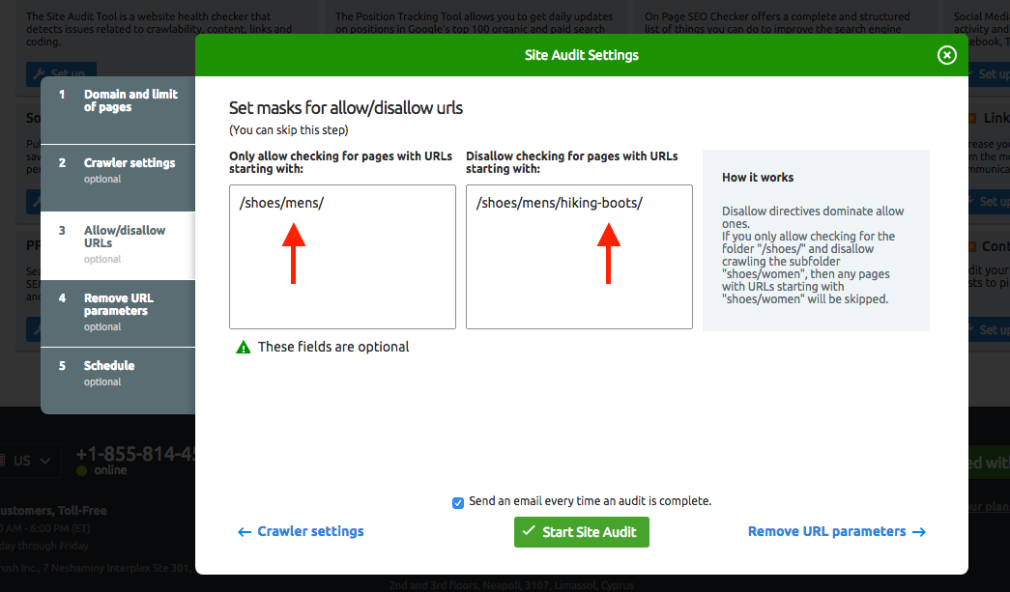

Here is where you can really get into the customization of your audit by deciding which subfolders you definitely want us to crawl and which you definitely don’t want us to crawl.

To do this properly, you need to include everything in the URL after the TLD. The subfolders you definitely want us to crawl go into the box on the left:

And the ones you definitely don’t want to be crawled go into the box on the right:

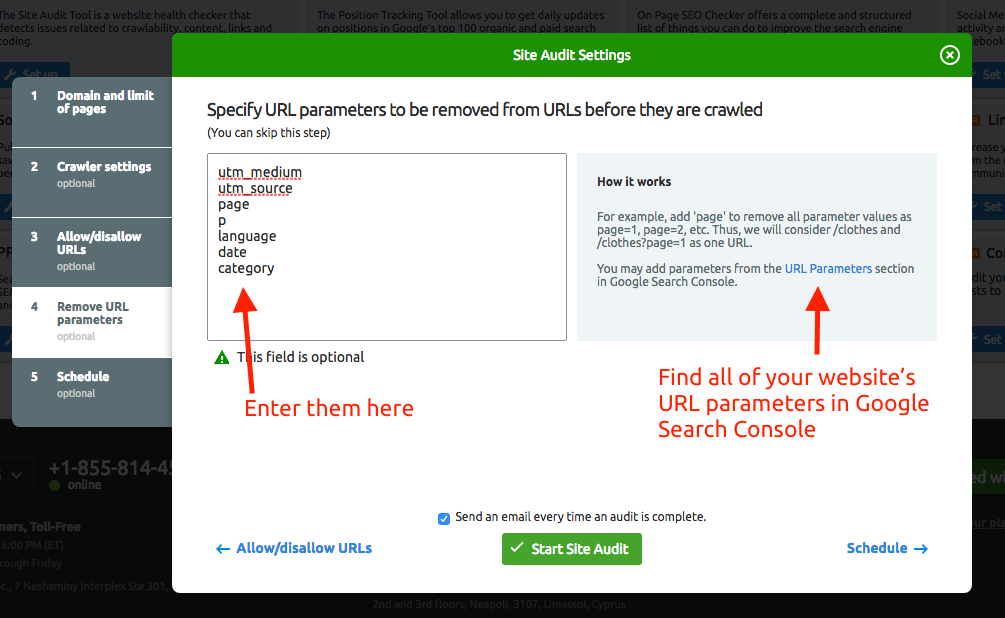

Step 4: Remove URL Parameters

This step is about helping us make sure that your crawl budget isn’t wasted on crawling the same page twice. Just specify the URL parameters that you use on your site to remove them before crawling.

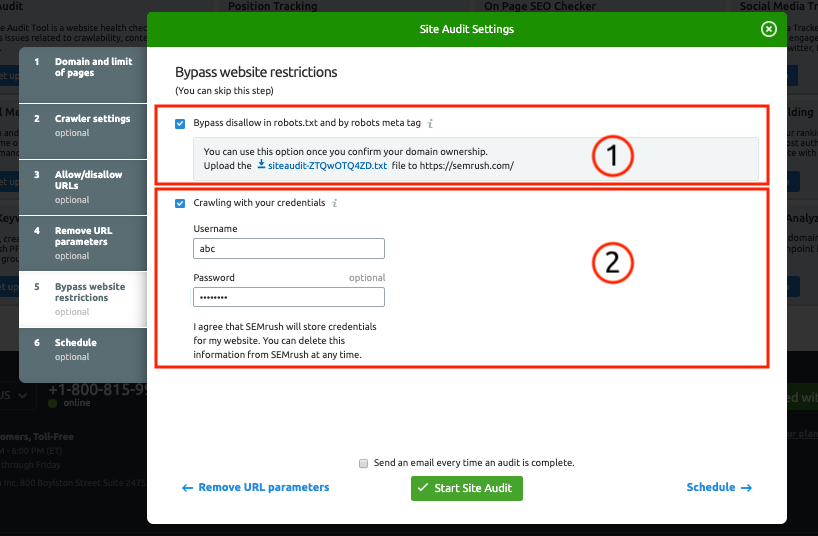

Step 5: Bypass website restrictions

This perfect when you need a little workaround. Say, for example, that your website is still in pre-production, or it’s hidden by basic access authentication. If you think this means we can’t run an audit for you, you’d be wrong.

You have two choices for getting around this and making sure your audit is up and running.

Option 1 is to bypass disallow in robots.txt and by robots meta tag which involves uploading the .txt file, that we’ll provide you with, onto the main folder of your website. Option 2 is to crawl with your credentials. To do that, all you have to do is input the username and password you’d use to access the hidden part of your website. The SemrushBot will use this info to run the audit.

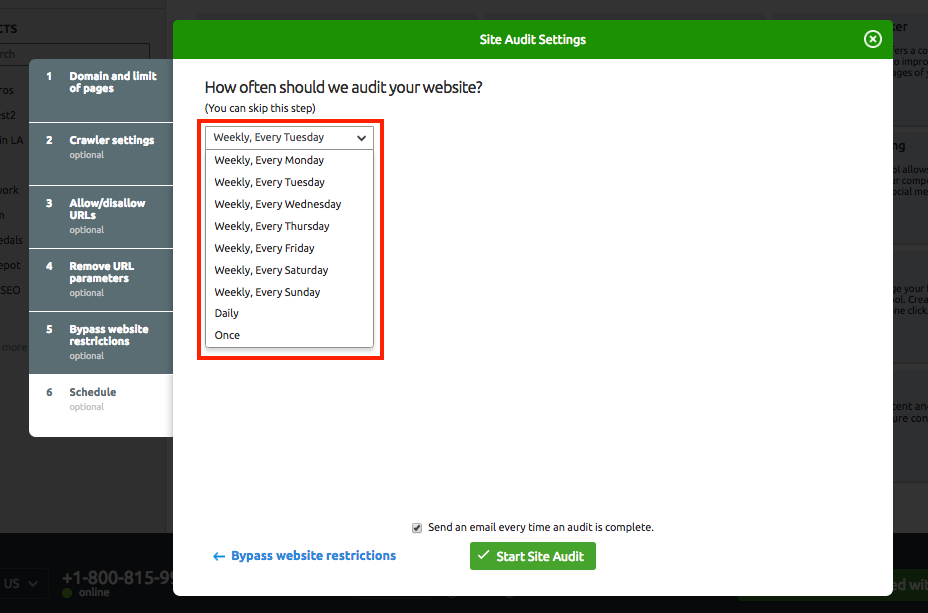

Option 1 is to bypass disallow in robots.txt and by robots meta tag which involves uploading the .txt file, that we’ll provide you with, onto the main folder of your website. Option 2 is to crawl with your credentials. To do that, all you have to do is input the username and password you’d use to access the hidden part of your website. The SemrushBot will use this info to run the audit. Step 6: Schedule

The final step is to tell us how often you’d like your website to be audited. This could be every week, every day, or just once. Whatever you decide, auditing regularly is definitely advisable to keep up with your site health.

And that’s it! You’ve learned how to crawl a site with the Site Audit tool.

Looking at Your Web Crawler Data with Semrush

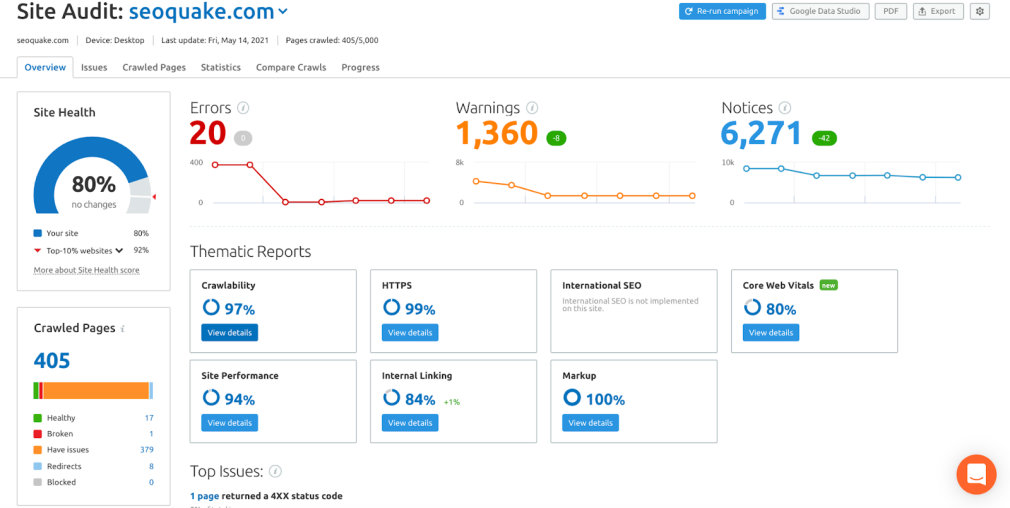

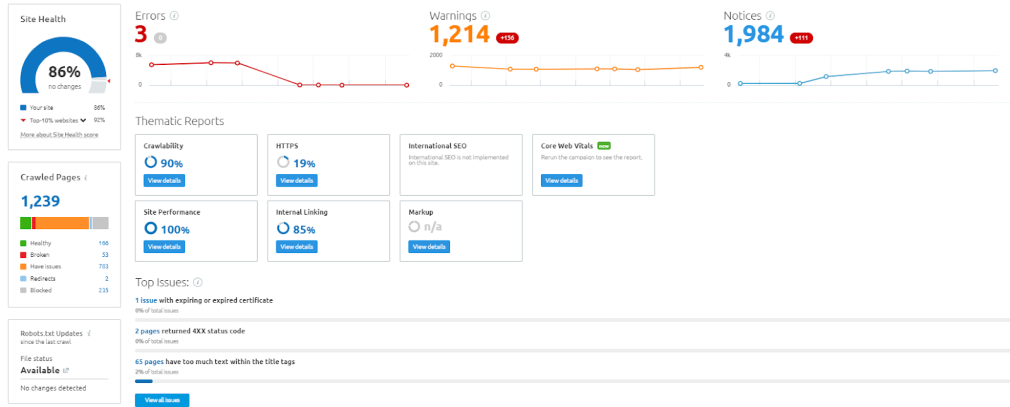

All the data about your web pages collected during the crawls is recorded and saved into the Site Audit section of your project.

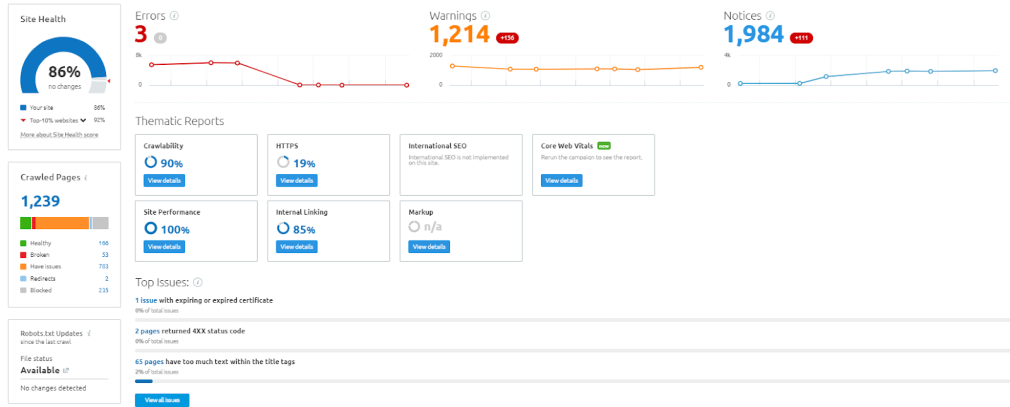

Here, you can find your Site Health score:

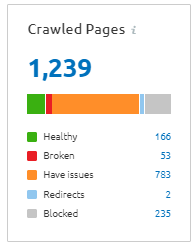

Check also the total number of crawled pages split into ‘Healthy’ ‘Broken’ or ‘Have Issues’ pages. This view practically halves the time it takes you to identify problems and solve them.

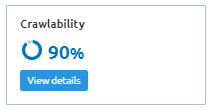

Finally, you’ll find here also our appraisal of how easy it is to crawl your pages:

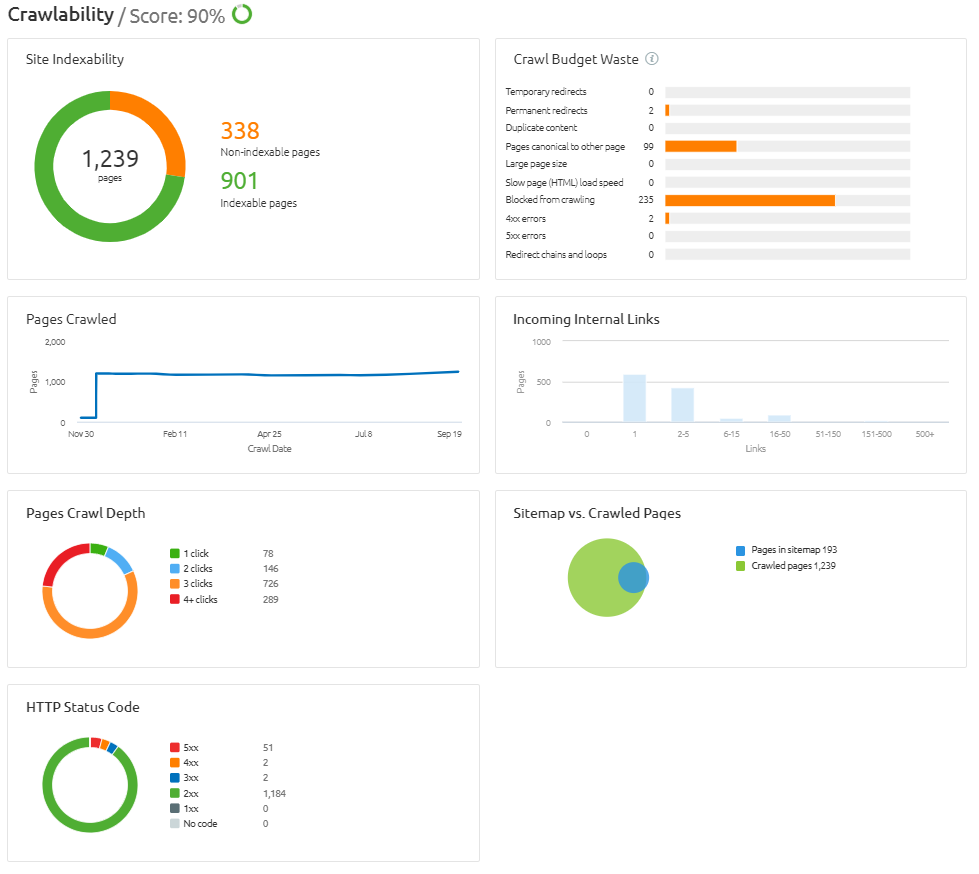

By going into the crawlability section, you’ll get an even closer look at your crawl budget, crawl depth, sitemap vs. crawled pages, indexability, and more.

And now you know how to set up your web crawler site audit and where to find the data that we can pull together just for you.

Remember: when you improve your crawlability, you make sure that search engines understand your website and its content. Helping search engines crawl your website more easily will help you rank higher and slowly climb your way up the SERPs.

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: