Tell me, what’s the first thing that comes to your mind when you think about ranking a website?

Content? Or maybe backlinks?

I admit, both are crucial factors for positioning a website in search results. But they’re not the only ones.

In fact, two other factors play a significant role in SEO – crawlability and indexability. Yet, most website owners have never heard of them.

At the same time, even small problems with indexability or crawlability could result in your site losing its rankings. And that’s regardless of what great content or how many backlinks you have.

What are crawlability and indexability? What affects crawlability and indexability? How to make a website easier to crawl and index? Tools for managing crawlability and indexabilityWhat are crawlability and indexability?

To understand these terms, let’s start by taking a look at how search engines discover and index pages. To learn about any new (or updated) page, they use what’s known as web crawlers, bots whose aim is to follow links on the web with a single goal in mind:

To find, and index new web content.

As Google explains:

“Crawlers look at webpages and follow links on those pages, much like you would if you were browsing content on the web. They go from link to link and bring data about those webpages back to Google’s servers.”

Matt Cutts, formerly of Google, posted an interesting video explaining the process in detail. You can watch it below:

In short, both of these terms relate to the ability of a search engine to access and index pages on a website to add them to its index.

Crawlability describes the search engine’s ability to access and crawl content on a page.

If a site has no crawlability issues, then web crawlers can access all its content easily by following links between pages.

However, broken links or dead ends might result in crawlability issues – the search engine’s inability to access specific content on a site.

Indexability, on the other hand, refers to the search engine’s ability to analyze and add a page to its index.

Even though Google could crawl a site, it may not necessarily be able to index all its pages, typically due to indexability issues.

What affects crawlability and indexability?

1. Site Structure

The informational structure of the website plays a crucial role in its crawlability.

For example, if your site features pages that aren’t linked to from anywhere else, web crawlers may have difficulty accessing them.

Of course, they could still find those pages through external links, providing that someone references them in their content. But on the whole, a weak structure could cause crawlability issues.

2. Internal Link Structure

A web crawler travels through the web by following links, just like you would have on any website. And therefore, it can only find pages that you link to from other content.

A good internal link structure, therefore, will allow it to quickly reach even those pages deep in your site’s structure. A poor structure, however, might send it to a dead end, resulting in a web crawler missing some of your content.

3. Looped Redirects

Broken page redirects would stop a web crawler in its tracks, resulting in crawlability issues.

4. Server Errors

Similarly, broken server redirects and many other server-related problems may prevent web crawlers from accessing all of your content.

5. Unsupported Scripts and Other Technology Factors

Crawlability issues may also arise as a result of the technology you use on the site. For example, since crawlers can’t follow forms, gating content behind a form will result in crawlability issues.

Various scripts like Javascript or Ajax may block content from web crawlers as well.

6. Blocking Web Crawler Access

Finally, you can deliberately block web crawlers from indexing pages on your site.

And there are some good reasons for doing this.

For example, you may have created a page you want to restrict public access to. And as part of preventing that access, you should also block it from the search engines.

However, it’s easy to block other pages by mistake too. A simple error in the code, for example, could block the entire section of the site.

The whole list of crawlability issues you can find in this article - 18 Reasons Your Website is Crawler-Unfriendly: Guide to Crawlability Issues.

How to make a website easier to crawl and index?

I’ve already listed some of the factors that could result in your site experiencing crawlability or indexability issues. And so, as a first step, you should ensure they don’t happen.

But there also other things you could do to make sure web crawlers can easily access and index your pages.

1. Submit Sitemap to Google

Sitemap is a small file, residing in the root folder of your domain, that contains direct links to every page on your site and submits them to the search engine using the Google Console.

The sitemap will tell Google about your content and alert it to any updates you’ve made to it.

2. Strengthen Internal Links

We’ve already talked about how interlinking affects crawlability. And so, to increase the chances of Google’s crawler finding all the content on your site, improve links between pages to ensure that all content is connected.

3. Regularly update and add new content

Content is the most important part of your site. It helps you attract visitors, introduce your business to them, and convert them into clients.

But content also helps you improve your site’s crawlability. For one, web crawlers visit sites that constantly update their content more often. And this means that they’ll crawl and index your page much quicker.

4. Avoid duplicating any content

Having duplicate content, pages that feature the same or very similar content can result in losing rankings.

But duplicate content can also decrease the frequency with which crawlers visit your site.

So, inspect and fix any duplicate content issues on the site.

5. Speed up your page load time

Web crawlers typically have only a limited time they can spend crawling and indexing your site. This is known as the crawl budget. And basically, they’ll leave your site once that time is up.

So, the quicker your pages load, the more of them a crawler will be able to visit before they run out of time.

Tools for managing crawlability and indexability

If all of the above sounds intimidating, don’t worry. There are tools that can help you to identify and fix your crawlability and indexability issues.

Log File Analyzer

Log File Analyzer will show you how desktop and mobile Google bots crawl your site, and if there are any errors to fix and crawl budget to save. All you have to do is upload the access.log file of your website, and let the tool do its job.

An access log is a list of all requests that people or bots have sent to your site; the analysis of a log file allows you to track and understand the behavior of crawl bots.

Read our manual on Where to Find the Access Log File.

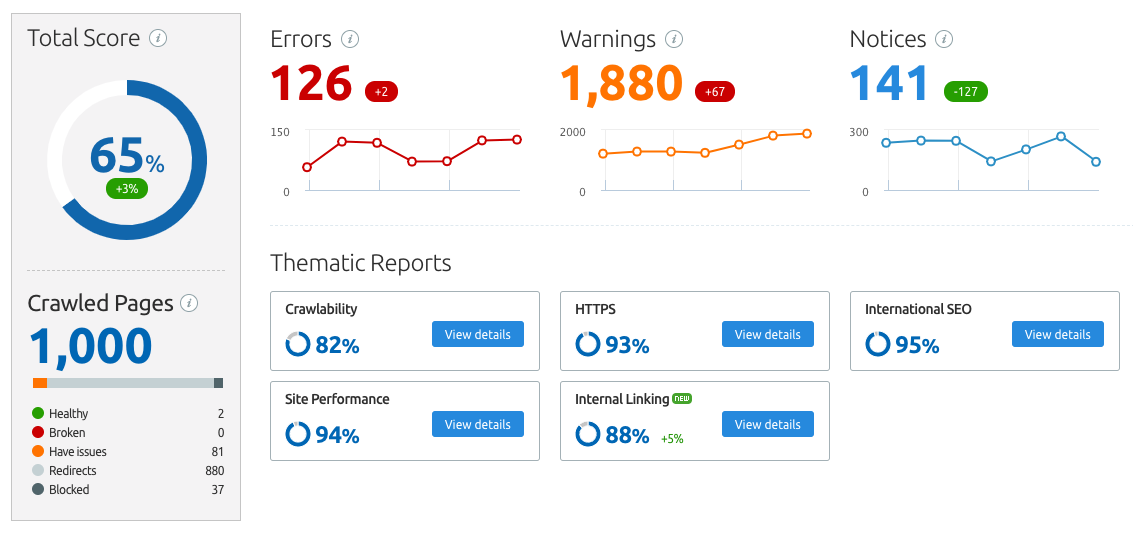

Site Audit

Site Audit is a part of the SEMrush suite that checks the health of your website. Scan your site for various errors and issues, including the ones that affect a website’s crawlability and indexability.

Google tools

Google Search Console helps you monitor and maintain your site in Google. It's a place for submitting your sitemap, and it shows the web crawlers' coverage of your site.

Google PageSpeed Insights allows you to quickly check a website's page loading speed.

Conclusion

Most webmasters know that to rank a website, they at least need strong and relevant content and backlinks that increase their websites’ authority.

What they don’t know is that their efforts are in vain if search engines’ crawlers can’t crawl and index their sites.

That’s why, apart from focusing on adding and optimizing pages for relevant keywords, and building links, you should constantly monitor whether web crawlers can access your site and report what they find to the search engine.

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: