FACT: Email is an amazing channel for attracting, winning and retaining customers. Not to mention generating repeat business.

But there is one problem; it is really hard to get emails right for the first time.

What you consider an engaging subject line, recipients see as a dud. A call to action you believe would compel them to click, spurred no action whatsoever.

That is why it is important to A/B test your emails to find new techniques or elements that improve conversions.

Unfortunately, many companies launch split tests hoping for the best. They disregard the rules of A/B testing and commit some major mistakes rendering their efforts useless.

So, if you have been split testing emails but see no viable results, keep on reading. I am going to show you the most common A/B testing mistakes sabotaging your efforts.

Before we begin though…

Why You Should Always Start by Testing Concepts Not Elements

As it turns out before you even begin testing various email elements, you should identify a general strategy your audience responds best to.

In other words, before you start fine-tuning the template, testing subject lines or modifying the call to action, you should first test two different marketing strategies against each other.

For instance, you could test two different ways to convert recipients — via email form or social media login. Or sending people to a landing page vs. allowing them to purchase the product directly via email.

And only when you have identified the winning strategy, should you start testing individual elements to improve conversions.

However, when you do, make sure you don’t commit any of the mistakes below:

Mistake #1. Testing More Than One Element at Once

By far and away, this is the most common mistake of them all.

You have so many ideas on how to improve email conversions. But the last thing you want is spending weeks to test every one of them in turn. And so, to speed things up a little, you decide to analyze them all at once.

You send different template variations under various sender names, using different subject lines, and including different copy in each test.

This results in so many email variations that, in the end, you can’t even tell if any of your ideas worked.

Time-consuming as it may be, you should always test only one element at a time.

Mistake #2. Checking Results Too Early

Since the majority of email platforms start delivering campaign results within 2 hours after sending, it is tempting to start analyzing a test’s performance right away, right?

However, by doing so, you miss out on some important data.

For one, users have different reading habits. Some open the email right away, flick it and either act on it or forget about it. Others put important messages aside to check out later. And as a result, might come back to your email a couple of days later.

And so, by analyzing results too early, you might miss important traffic and usage patterns, affecting the actual test results.

From personal experience, I can attest that the best time to start going through test results is about 2 weeks after launching the campaign.

Mistake #3. Ignoring Statistical Significance

80% of your test results are worthless. It is no different for almost anyone else split testing their emails.

And so, the challenge is to draw conclusions based only on the remaining 20%.

One way to achieve it is by identifying statistically significant results and weeding out those caused by pure chance.

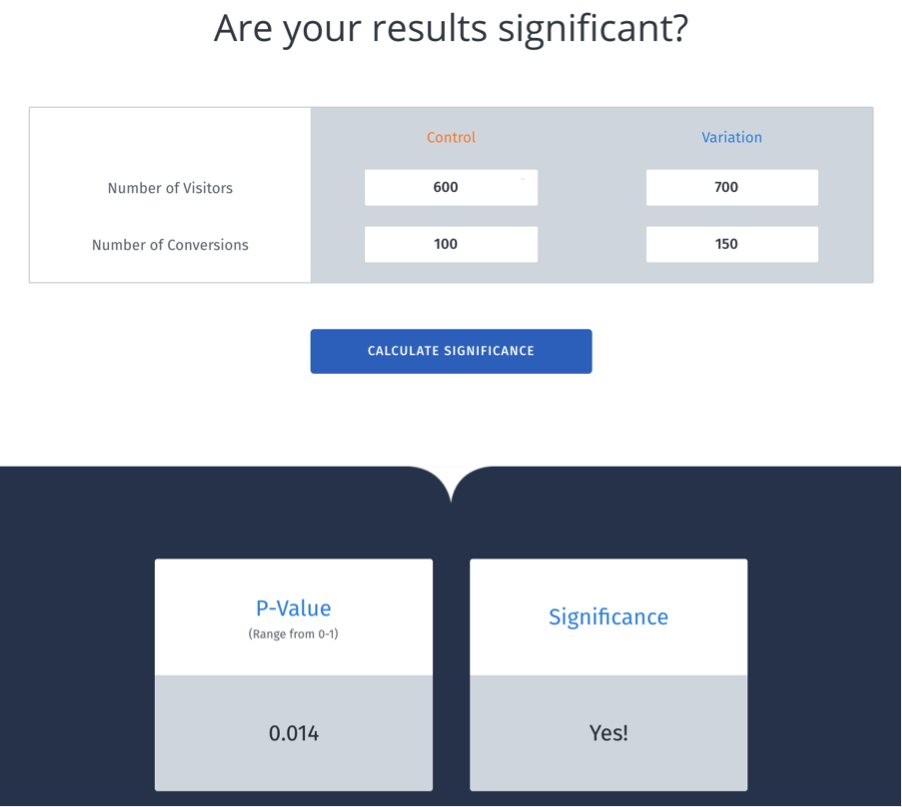

One way to do it is to use a statistical significance calculator. Personally, I use the one developed by Visual Website Optimizer, but you could use just about any similar app out there.

Mistake #4. Focusing on Too Small Sample Size

The number of recipients you include in the test affects the outcome. The smaller change you want to test, the greater sample size you might need.

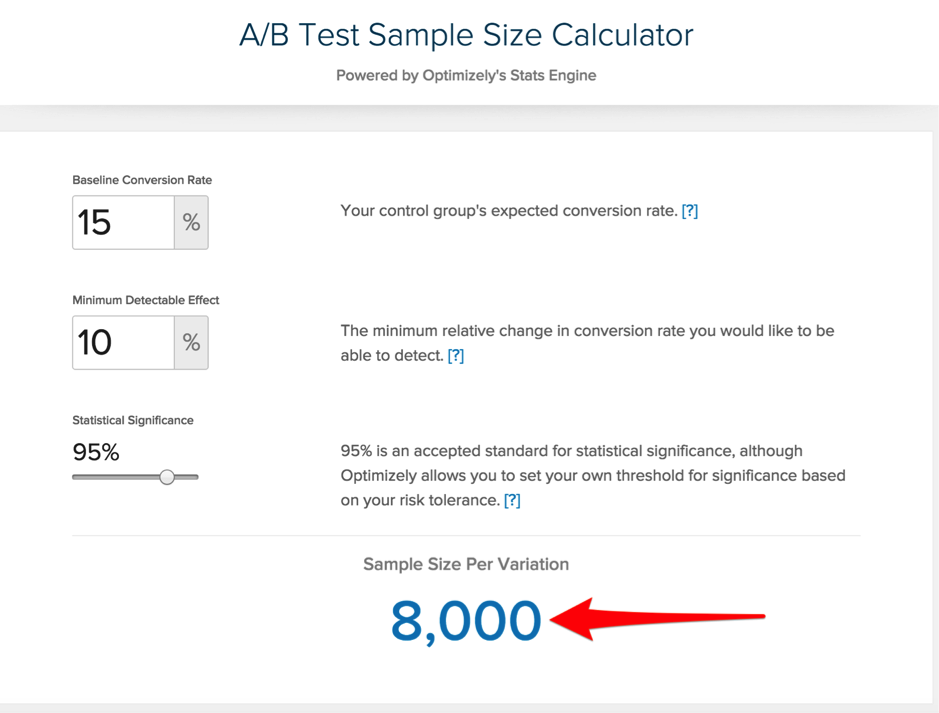

For instance, let’s assume that you developed a hypothesis stating that using emojis in the subject line should help increase the existing 15% open rate by 10%. To conduct such analysis, you need to test this hypothesis on 8,000 people.

Using a smaller sample size will deliver statistically insignificant results.

So, before you launch the test, you need to calculate what sample size you need to receive viable feedback from. To do so, use the Optimizely’s sample size calculator.

Mistake #5. Failing to Develop a Proper Hypothesis

A hypothesis is a proposed statement made on the basis of limited evidence that can be proved or disproved and is used as a starting point for further investigation. I am sure you have heard this definition already.

However, in email split testing, a hypothesis must have one other characteristic:

It must be applicable to different campaigns.So for instance, a statement such as “emails with animated pictures generate a higher CTR” would work as a hypothesis. Once proven right, it could be applied to many different campaigns.

On the other hand, assuming that a particular subject line will fare better than another would not work. It applies to a specific campaign only and cannot scale to your other email efforts.

The lack of understanding of this important email hypothesis characteristic leads to conducting tests de facto without a hypothesis at all. Or in the best case scenario, using a weak hypothesis to try and improve conversions.

To avoid making this mistake, use the industry’s approved hypotheses. Jordie van Rijn collected 150 of them in this post.

Mistake #6. Not Testing Segments

We all know that different audience segments might respond to your message in their own unique way. And thus, a hypothesis improving conversions in one segment might deliver no results in another.

Just take cultural differences as an example. Spanish recipients might have no problem with a high frequency of emails. However, emailing a couple of times a week might prompt subscribers from other countries to abandon your list.

And so, segment your tests to analyze different user behaviors.

Mistake #7. Sending Each Variation at a Different Time

To receive viable results, you should analyze no more than one variable at a time.

And yet, I see many companies unknowingly adding another factor to the mix: time. How? By sending each variation at a different time.

With this method, half of the subscribers might receive one variation at a time they are not busy and thus, susceptible to opening marketing messages. The other half, however, might get it in the middle of a busy day, resulting in many ignoring or even overlooking it.

As a result, the data gets skewed by different recipient behavior, depending on the time at which they received the email.

So, to guarantee the validity of the test, always send both variations at the same time to ensure that no other factor interferes with the test.

What About You?

Are there any other email testing mistakes you made?

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source:

![How To Create a Strategic Dashboard in Excel Using Semrush Data [Excel Template Included]](https://new.allinclusive.agency/uploads/images/how-to-create-a-strategic-dashboard-in-excel-using-semrush-data-excel-template-included.svg)