You have more control over the search engines than you think.

It is true; you can manipulate who crawls and indexes your site – even down to individual pages. To control this, you will need to utilize a robots.txt file. Robots.txt is a simple text file that resides within the root directory of your website. It informs the robots that are dispatched by search engines which pages to crawl and which to overlook.

While not exactly the be-all-and-end-all, you have probably figured out that it is quite a powerful tool and will allow you to present your website to Google in a way that you want them to see it. Search engines are harsh judges of character, so it is essential to make a great impression. Robots.txt, when utilized correctly, can improve crawl frequency, which can impact your SEO efforts.

So, how do you create one? How do you use it? What things should you avoid? Check out this post to find the answers to all these questions.

What is A Robots.txt File?

Back when the internet was just a baby-faced kid with the potential to do great things, developers devised a way to crawl and index fresh pages on the web. They called these ‘robots’ or ‘spiders’.

Occasionally these little fellas would wander off onto websites that weren’t intended to be crawled and indexed, such as sites undergoing maintenance. The creator of the world’s first search engine, Aliweb, recommended a solution – a road map of sorts, which each robot must follow.

This roadmap was finalized in June of 1994 by a collection of internet-savvy techies, as the “ Robots Exclusion Protocol”.

A robots.txt file is the execution of this protocol. The protocol delineates the guidelines that every authentic robot must follow, including Google bots. Some illegitimate robots, such as malware, spyware, and the like, by definition, operate outside these rules.

You can take a peek behind the curtain of any website by typing in any URL and adding: /robots.txt at the end.

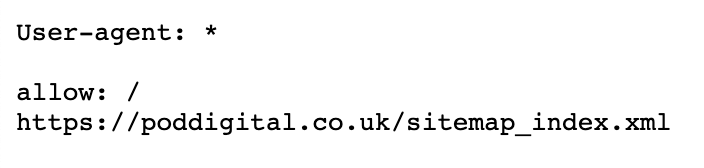

For example, here’s POD Digital’s version:

As you can see, it is not necessary to have an all-singing, all-dancing file as we are a relatively small website.

Where to Locate the Robots.txt File

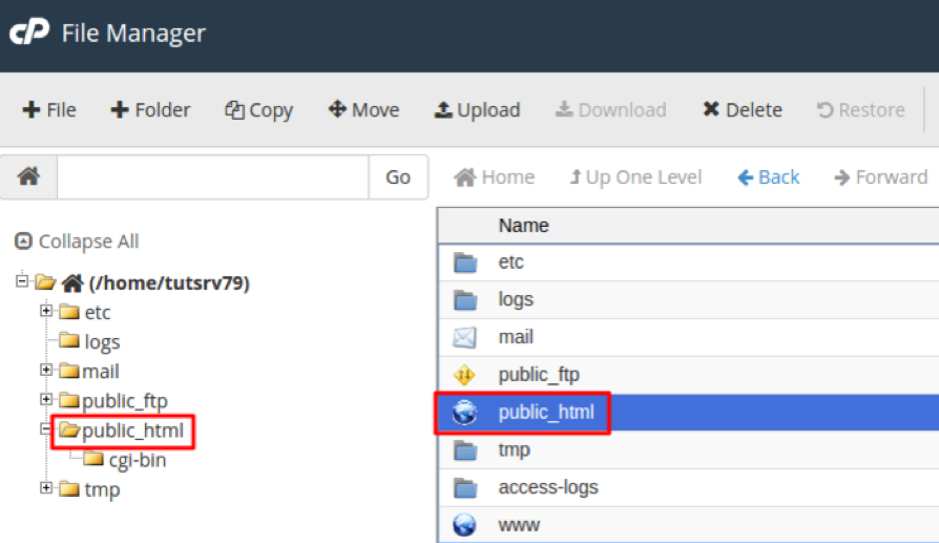

Your robots.txt file will be stored in the root directory of your site. To locate it, open your FTP cPanel, and you’ll be able to find the file in your public_html website directory.

There is nothing to these files so that they won't be hefty – probably only a few hundred bytes, if that.

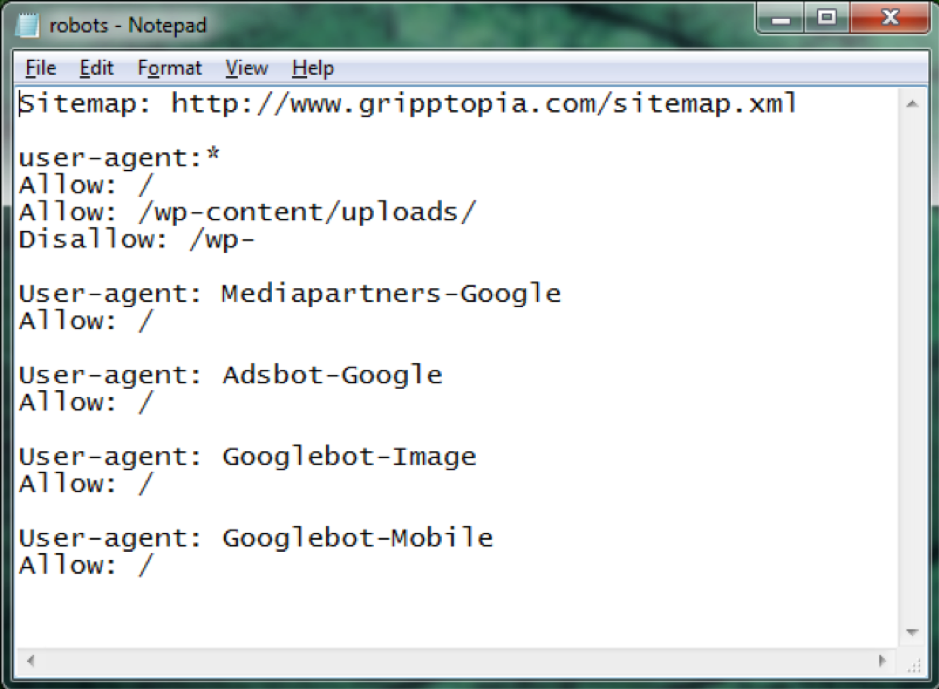

Once you open the file in your text editor, you will be greeted with something that looks a little like this:

If you aren’t able to find a file in your site’s inner workings, then you will have to create your own.

How to Put Together a Robots.txt File

Robots.txt is a super basic text file, so it is actually straightforward to create. All you will need is a simple text editor like Notepad. Open a sheet and save the empty page as, ‘robots.txt’.

Now login to your cPanel and locate the public_html folder to access the site’s root directory. Once that is open, drag your file into it.

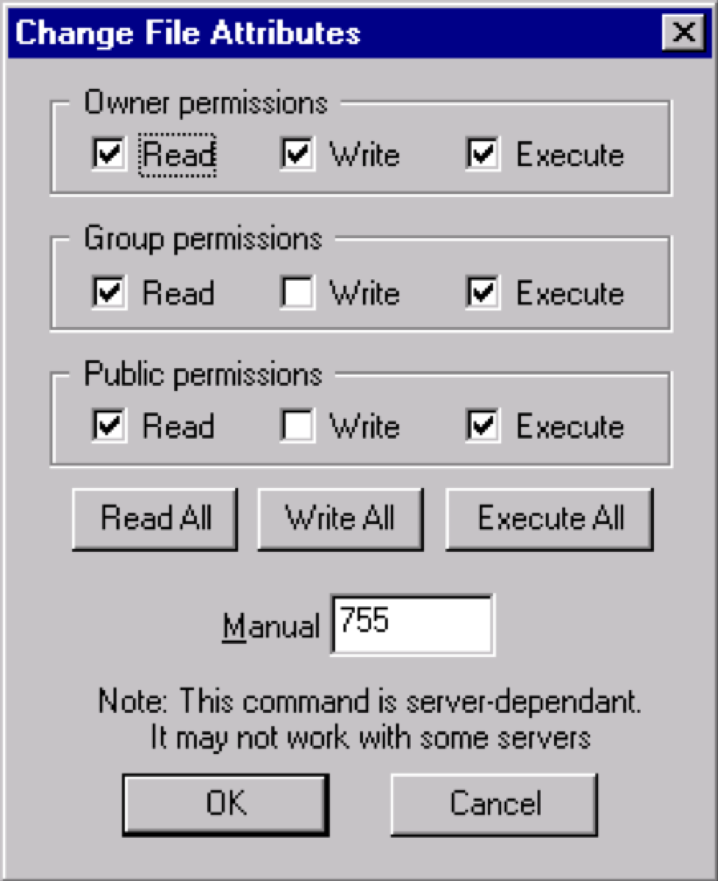

Finally, you must ensure that you have set the correct permissions for the file. Basically, as the owner, you will need to write, read and edit the file, but no other parties should be allowed to do so.

The file should display a “0644” permission code.

If not, you will need to change this, so click on the file and select, “file permission”.

Voila! You have a Robots.txt file.

Robots.txt Syntax

A robots.txt file is made up of multiple sections of ‘directives’, each beginning with a specified user-agent. The user agent is the name of the specific crawl bot that the code is speaking to.

There are two options available:

You can use a wildcard to address all search engines at once. You can address specific search engines individually.When a bot is deployed to crawl a website, it will be drawn to the blocks that are calling to them.

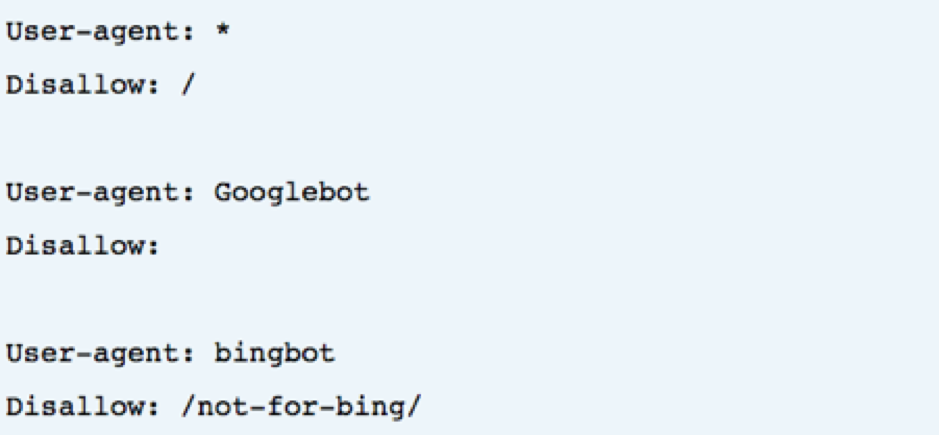

Here is an example:

User-Agent Directive

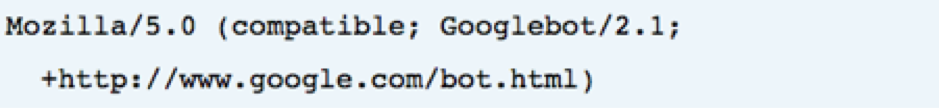

The first few lines in each block are the ‘user-agent', which pinpoints a specific bot. The user-agent will match a specific bots name, so for example:

So if you want to tell a Googlebot what to do, for example, start with:

User-agent: Googlebot

Search engines always try to pinpoint specific directives that relate most closely to them.

So, for example, if you have got two directives, one for Googlebot-Video and one for Bingbot. A bot that comes along with the user-agent ‘Bingbot’ will follow the instructions. Whereas the ‘Googlebot-Video’ bot will pass over this and go in search of a more specific directive.

Most search engines have a few different bots, here is a list of the most common.

Host Directive

The host directive is supported only by Yandex at the moment, even though some speculations say Google does support it. This directive allows a user to decide whether to show the www. before a URL using this block:

Host: poddigital.co.uk

Since Yandex is the only confirmed supporter of the directive, it is not advisable to rely on it. Instead, 301 redirect the hostnames you don't want to the ones that you do.

Disallow Directive

We will cover this in a more specific way a little later on.

The second line in a block of directives is Disallow. You can use this to specify which sections of the site shouldn’t be accessed by bots. An empty disallow means it is a free-for-all, and the bots can please themselves as to where they do and don’t visit.

Sitemap Directive (XML Sitemaps)

Using the sitemap directive tells search engines where to find your XML sitemap.

However, probably the most useful thing to do would be to submit each one to the search engines specific webmaster tools. This is because you can learn a lot of valuable information from each about your website.

However, if you are short on time, the sitemap directive is a viable alternative.

Crawl-Delay Directive

Yahoo, Bing, and Yandex can be a little trigger happy when it comes to crawling, but they do respond to the crawl-delay directive, which keeps them at bay for a while.

Applying this line to your block:

Crawl-delay: 10

means that you can make the search engines wait ten seconds before crawling the site or ten seconds before they re-access the site after crawling – it is basically the same, but slightly different depending on the search engine.

Why Use Robots.txt

Now that you know about the basics and how to use a few directives, you can put together your file. However, this next step will come down to the kind of content on your site.

Robots.txt is not an essential element to a successful website; in fact, your site can still function correctly and rank well without one.

However, there are several key benefits you must be aware of before you dismiss it:

Point Bots Away From Private Folders: Preventing bots from checking out your private folders will make them much harder to find and index.

Keep Resources Under Control: Each time a bot crawls through your site, it sucks up bandwidth and other server resources. For sites with tons of content and lots of pages, e-commerce sites, for example, can have thousands of pages, and these resources can be drained really quickly. You can use robots.txt to make it difficult for bots to access individual scripts and images; this will retain valuable resources for real visitors.

Specify Location Of Your Sitemap: It is quite an important point, you want to let crawlers know where your sitemap is located so they can scan it through.

Keep Duplicated Content Away From SERPs: By adding the rule to your robots, you can prevent crawlers from indexing pages which contain the duplicated content.

You will naturally want search engines to find their way to the most important pages on your website. By politely cordoning off specific pages, you can control which pages are put in front of searchers (be sure to never completely block search engines from seeing certain pages, though).

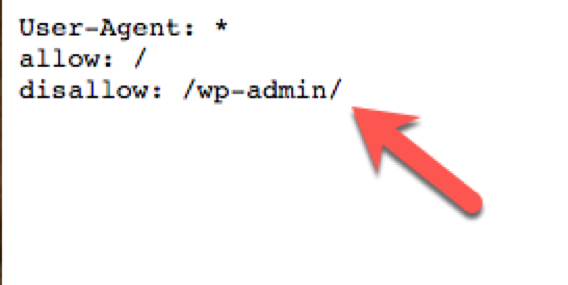

For example, if we look back at the POD Digital robots file, we see that this URL:

poddigital.co.uk/wp-admin has been disallowed.

Since that page is made just for us to login into the control panel, it makes no sense to allow bots to waste their time and energy crawling it.

Noindex

In July 2019, Google announced that they would stop supporting the noindex directive as well as many previously unsupported and unpublished rules that many of us have previously relied on.

Many of us decided to look for alternative ways to apply the noindex directive, and below you can see a few options you might decide to go for instead:

Noindex Tag/ Noindex HTTP Response Header: This tag can be implemented in two ways, first will be as an HTTP response header with an X-Robots-Tag or create a <meta> tag which will need to be implemented within the <head> section.

Your <meta> tag should look like the below example:<meta name="robots" content="noindex">

TIP: Bear in mind that if this page has been blocked by robots.txt file, the crawler will never see your noindex tag, and there is still a chance that this page will be presented within SERPs.

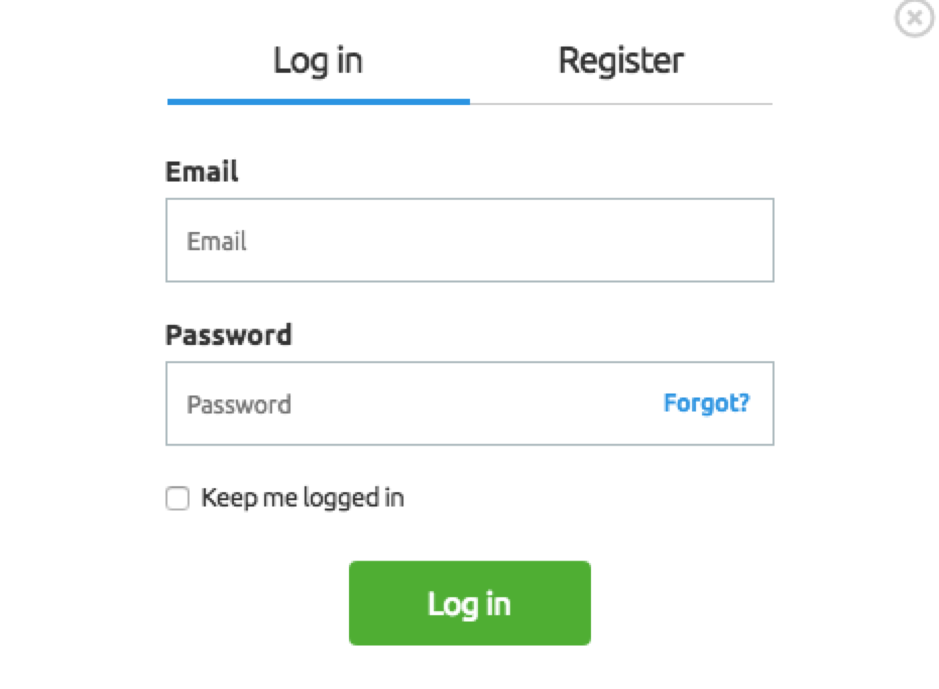

Password Protection: Google states that in most cases, if you hide a page behind a login, it should be removed from Google’s index. The only exception is presented if you use schema markup, which indicates that the page is related to subscription or paywalled content.

404 & 410 HTTP Status Code: 404 & 410 status codes represent the pages that no longer exist. Once a page with 404/410 status is crawled and fully processed, it should be dropped automatically from Google’s index.

You should crawl your website systematically to reduce the risk of having 404 & 410 error pages and where needed use 301 redirects to redirect traffic to an existing page.

Disallow rule in robots.txt: By adding a page specific disallow rule within your robots.txt file, you will prevent search engines from crawling the page. In most cases, your page and its content won’t be indexed. You should, however, keep in mind that search engines are still able to index the page based on information and links from other pages.

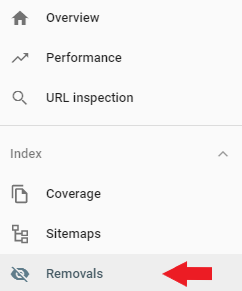

Search Console Remove URL Tool: This alternative root does not solve the indexing issue in full, as Search Console Remove URL Tool removes the page from SERPs for a limited time.

However, this might give you enough time to prepare further robots rules and tags to remove pages in full from SERPs.

You can find the Remove URL Tool on the left-hand side of the main navigation on Google Search Console.

Noindex vs. Disallow

So many of you probably wonder if it is better to use the noindex tag or the disallow rule in your robots.txt file. We have already covered in the previous part why noindex rule is no longer supported in robots.txt and different alternatives.

If you want to ensure that one of your pages is not indexed by search engines, you should definitely look at the noindex meta tag. It allows the bots to access the page, but the tag will let robots know that this page should not be indexed and should not appear in the SERPs.

The disallow rule might not be as effective as noindex tag in general. Of course, by adding it to robots.txt, you are blocking the bots from crawling your page, but if the mentioned page is linked with other pages by internal and external links, bots might still index this page based on information provided by other pages/websites.

You should remember that if you disallow the page and add the noindex tag, then robots will never see your noindex tag, which can still cause the appearance of the page in the SERPs.

Using Regular Expressions & Wildcards

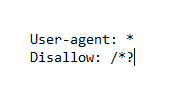

Ok, so now we know what robots.txt file is and how to use it, but you might think, “I have a big eCommerce website, and I would like to disallow all the pages which contain question marks (?) in their URLs.”

This is where we would like to introduce your wildcards, which can be implemented within robots.txt. Currently, you have two types of wildcards to choose from.

* Wildcards - where * wildcard characters will match any sequence of characters you wish. This type of wildcard will be a great solution for your URLs which follows the same pattern. For example, you might wish to disallow from crawling all filter pages which include a question mark (?) in their URLs.

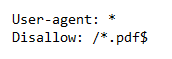

$ Wildcards - where $ will match the end of your URL. For example, if you want to ensure that your robots file is disallowing bots from accessing all PDF files, you might want to add the rule, like one presented below:

Let’s quickly break down the example above. Your robots.txt allows any User-agent bots to crawl your website, but it disallows access to all pages which contain .pdf end.

Mistakes to Avoid

We have talked a little bit about the things you could do and the different ways you can operate your robots.txt. We are going to delve a little deeper into each point in this section and explain how each may turn into an SEO disaster if not utilized properly.

Do Not Block Good Content

It is important to not block any good content that you wish to present to publicity by robots.txt file or noindex tag. We have seen in the past many mistakes like this, which have hurt the SEO results. You should thoroughly check your pages for noindex tags and disallow rules.

Overusing Crawl-Delay

We have already explained what the crawl-delay directive does, but you should avoid using it too often as you are limiting the pages crawled by the bots. This may be perfect for some websites, but if you have got a huge website, you could be shooting yourself in the foot and preventing good rankings and solid traffic.

Case Sensitivity

The Robots.txt file is case sensitive, so you have to remember to create a robots file in the right way. You should call robots file as ‘robots.txt’, all with lower cases. Otherwise, it won’t work!

Using Robots.txt to Prevent Content Indexing

We have covered this a little bit already. Disallowing a page is the best way to try and prevent the bots crawling it directly.

But it won't work in the following circumstances:

If the page has been linked from an external source, the bots will still flow through and index the page.

Illegitimate bots will still crawl and index the content.

Using Robots.txt to Shield Private Content

Some private content such as PDFs or thank you pages are indexable, even if you point the bots away from it. One of the best methods to go alongside the disallow directive is to place all of your private content behind a login.

Of course, it does mean that it adds a further step for your visitors, but your content will remain secure.

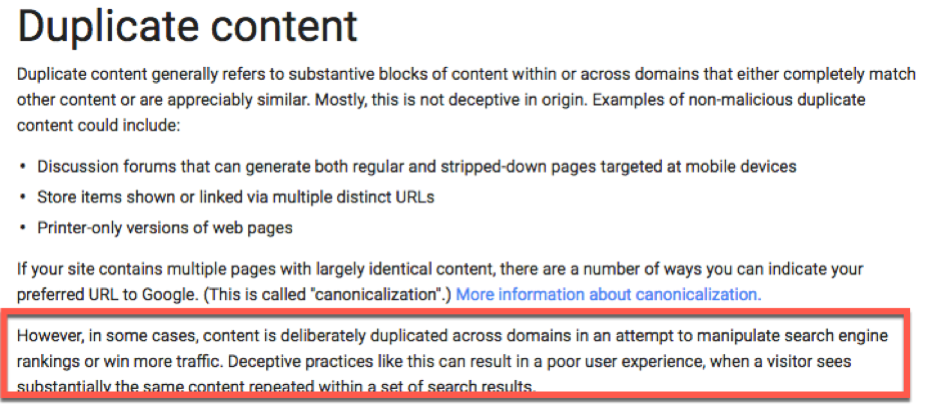

Using Robots.txt to Hide Malicious Duplicate Content

Duplicate content is sometimes a necessary evil — think printer-friendly pages, for example.

However, Google and the other search engines are smart enough to know when you are trying to hide something. In fact, doing this may actually draw more attention to it, and this is because Google recognizes the difference between a printer friendly page and someone trying to pull the wool over their eyes:

There is still a chance it may be found anyway.

Here are three ways to deal with this kind of content:

Rewrite the Content – Creating exciting and useful content will encourage the search engines to view your website as a trusted source. This suggestion is especially relevant if the content is a copy and paste job.

301 Redirect – 301 redirects inform search engines that a page has transferred to another location. Add a 301 to a page with duplicate content and divert visitors to the original content on the site.

Rel= “canonical – This is a tag that informs Google of the original location of duplicated content; this is especially important for an e-commerce website where the CMS often generates duplicate versions of the same URL.

The Moment of Truth: Testing Out Your Robots.txt File

Now is the time to test your file to ensure everything is working in the way you want it to.

Google’s Webmaster Tools has a robots.txt test section, but it is currently only available in the old version of Google Search Console. You will no longer be able to access the robot.txt tester by using an updated version of GSC (Google is working hard on adding new features to GSC, so maybe in the future, we will be able to see Robots.txt tester in the main navigation).

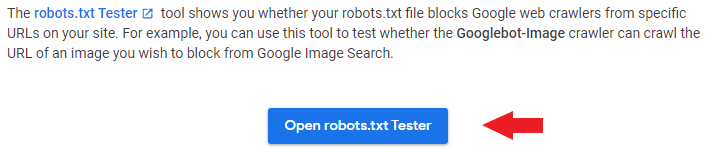

So first, you will need to visit Google Support page, which gives an overview of what Robots.txt tester can do.

There you will also find the robots.txt Tester tool:

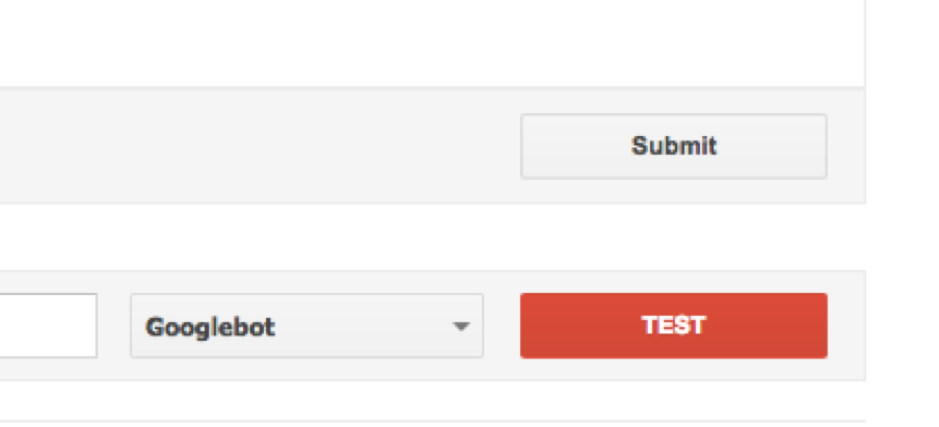

Choose the property you are going to work on - for example, your business website from the dropdown list.

Remove anything currently in the box, replace it with your new robots.txt file and click, test:

If the ‘Test’ changes to ‘Allowed’, then you got yourself a fully functioning robots.txt.

Creating your robots.txt file correctly, means you are improving your SEO and the user experience of your visitors.

By allowing bots to spend their days crawling the right things, they will be able to organize and show your content in the way you want it to be seen in the SERPs.

How To Resources for CMS Platforms

WordPress Robots.txt Magento Robots.txt Shopify Robots.txt Wix Robots.txt Joomla! Robots.txtInnovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: