Website crawlers visit your site often over the life of your website. They look for new content, follow links, and collect data on your website to better understand how it serves search intent.

These crawlers might run into errors when navigating your site, like duplicate content and broken links. Fixing these errors as soon as possible is important to maintaining the health of your website and your rankings on Google.

In this guide, we’ve included 5 critical crawl errors and how to fix them.

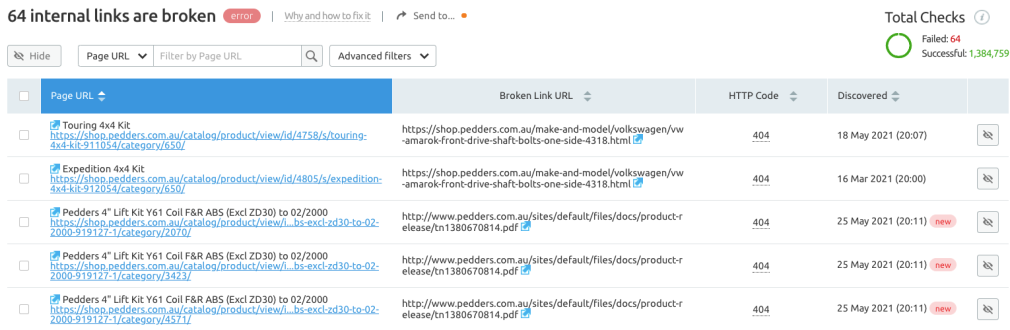

Error 1: Broken Internal Links

Internal links lead a user from one page to another on your website. Internal links are good for SEO, can help establish a site structure, and spread link equity.

Broken links are likely due to incorrect URLs or pages that have been removed. In these cases, the website hasn’t been updated to reflect the changes.

Why are Broken Links an issue?

Broken links inhibit a user from navigating your website, which can negatively impact user experience (a key factor in your website rankings.)

Broken links also prevent crawlers from exploring and indexing your website. With millions of websites to crawl, each bot only has a limited number of pages it can crawl before it has to move on to the next website. This is commonly referred to as your “crawl budget.”

If you have broken links, the bots can’t crawl your site and will burn through your “budget.”

How to fix a Broken Link Issue

You can use the Site Audit tool to identify your broken links. Once you’ve run a site audit, you can filter for any link errors the tool has indicated:

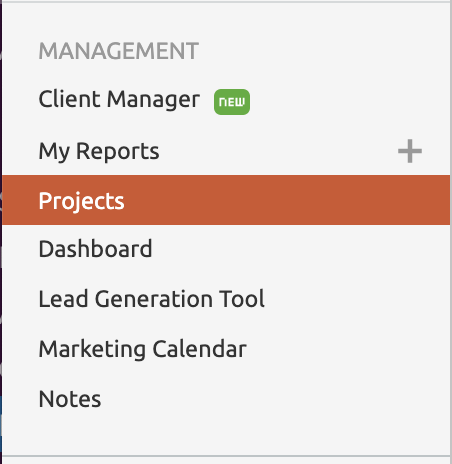

To run an audit, you’ll need to set up a project for your domain. Navigate to “Projects,” under “Management” in the main toolbar on the left:

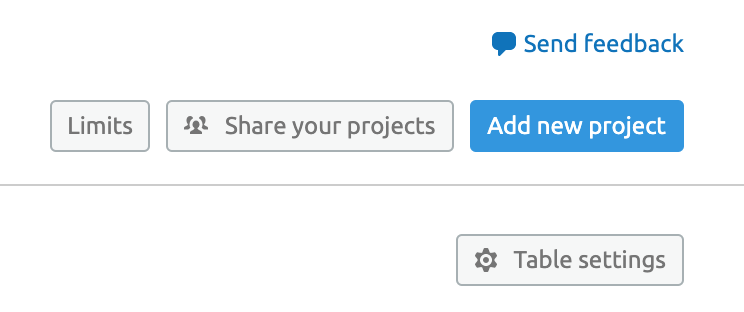

If you don’t already have a project set up for your site, create a new project by selecting the “Add new project” button at the top right of the page:

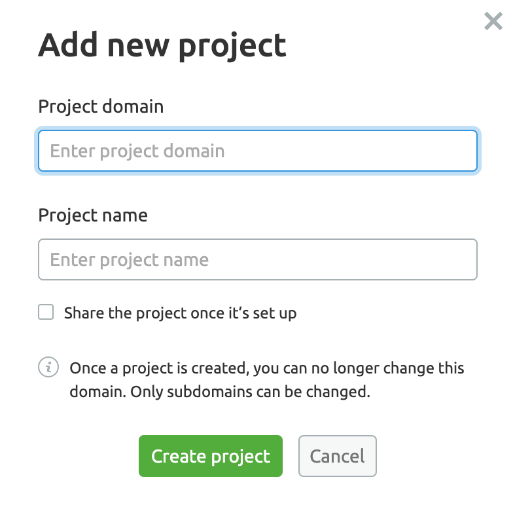

You’ll be prompted to enter your website’s domain and name the project:

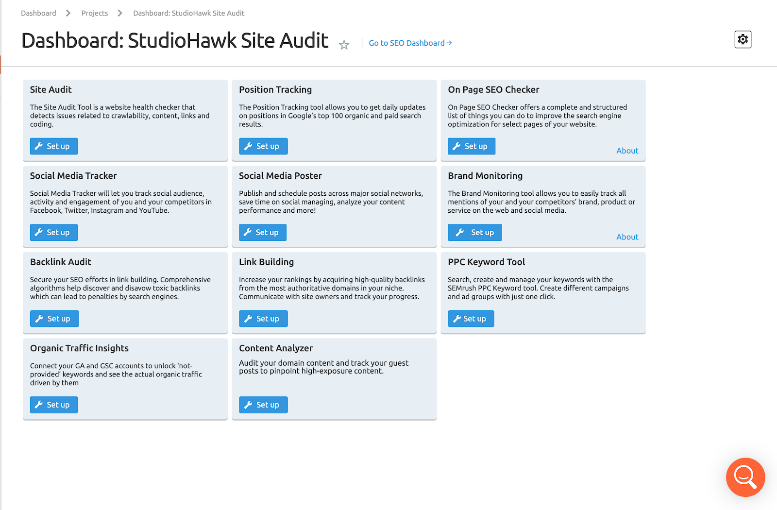

You’ll be taken to the project dashboard, where you can select the Site Audit tool:

Use the Site Audit tool to:

Remove links to pages that have been removed. Identify pages where the broken links should be pointing to, and update the links so they work. Run the Site Audit again to check that the updates have been successful.Avoid other internal linking issues by checking out our guide on the most common internal link-building mistakes.

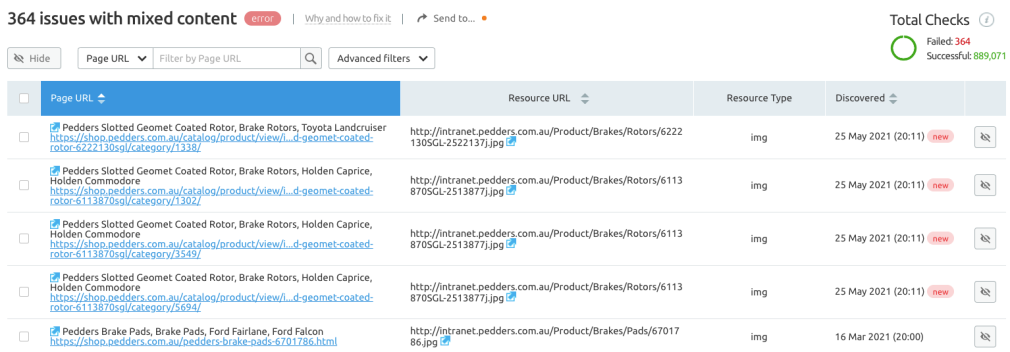

Error 2: Mixed Content

A mixed-content issue occurs when a webpage’s code loads in HTTPS (Hypertext Transfer Protocol Secure), but the content on the page (images, videos, etc) loads in HTTP.

Why is Mixed Content an issue?

When this issue occurs, the user will receive a warning pop-up indicating they may be downloading unsecured content. This could prompt the user to leave the page, leading to a high bounce rate.

Mixed content negatively affects the user experience and indicates to Google that your page has security issues.

How to fix a Mixed Content issue

You can use the Site Audit tool to find any pages with a mixed content issue:

You’ll need to replace all unsecured HTTP links on your website with newer and safer HTTPS links, then embed your resources with the same HTTPS protocol links.

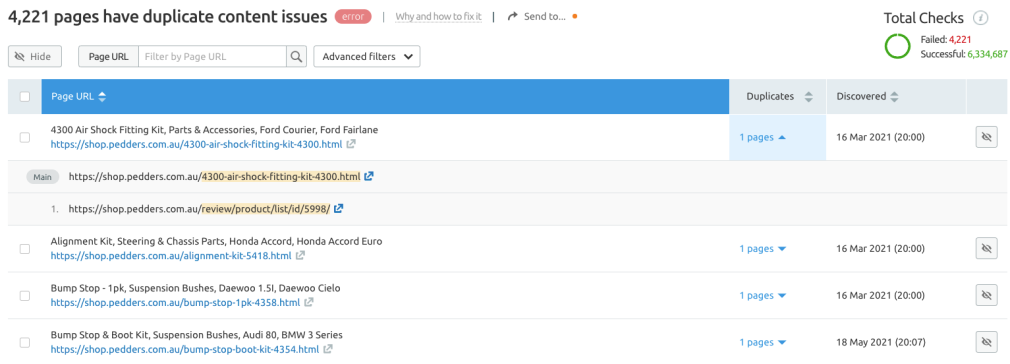

Error 3: Duplicate Content

Google flags for duplicate content when a site crawler comes upon content on your site that is 85% or more similar to other content on your website.

This can happen if you service different countries around the world with the same content in multiple languages or have multiple landing pages with similar content.

Why is Duplicate Content an Issue?

When search engines see duplicate content, they can become confused over which version to crawl or index and might exclude both.

Search engines may think you’re trying to manipulate the algorithm and may downgrade your ranking or ban your website from search results.

Link equity may also be diluted through duplication, which can impact your overall page authority score.

How to fix a Duplicate Content Issue

Once you’ve run a site audit, you can use the Site Audit Tool to see how many duplicate pages your website has:

To fix them, you can:

Add a canonical tag to the page you want Google to crawl and index. Canonical tags indicate the original page to search engines, so they’ll know which one to show in the SERP. Use a 301 redirect from the duplicate page to the original page. Instruct Googlebot to handle the URL parameters differently using the Google Search Console.The simplest way to avoid this issue is to create unique content for each page of your website.

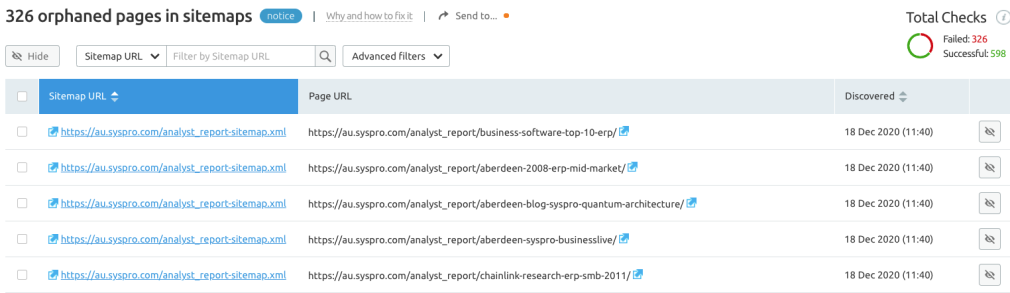

Error 4: Orphaned Pages

Orphaned pages are not internally linked to any other page on your website. Users cannot access these pages via your website’s main menu, sitemap, or a link from another page.

The only way to access an orphaned page is through a direct link.

Why are Orphaned Pages an issue?

If search engines cannot find an orphaned page, the page will not be indexed on a SERP.

Adding orphaned pages to your site.xml file wastes your crawl budget because it will take Google’s bots longer to find and crawl it.

How to fix Orphaned Pages

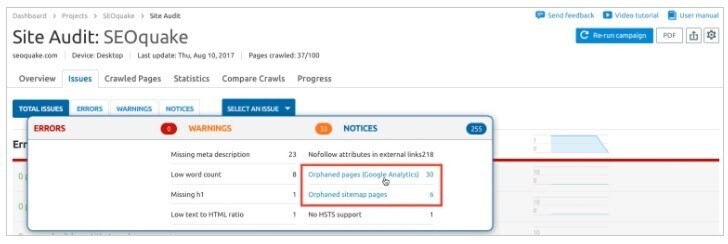

The Site Audit tool indicates orphaned pages in your site.xml file:

To do this, click on the Issues tab, and then the ‘Select issues’ button:

Remove orphan pages if they’re no longer required or useful for your site.

If you still want to keep the page, add an internal link to it from another page on your website, and make it discoverable in the menu system.

For more detail on this process, read about how to find orphan pages with a site audit.

Error 5: Lack of Backlinks & Toxic Backlinks

Backlinks function like an online referral. You earn backlines when an external site links to yours. They are a sign of trust and authority in the eyes of search engines.

Having a link from an external website with a good page authority transfers some of that authority back to your website.

Why is a Lack of Backlinks and Toxic Backlinks an Issue?

A lack of backlinks implies your website has little page authority and that you are not an authority in your field.

Toxic backlinks are links from sites that weaken your page authority and damage your SEO ranking. You’ll want to avoid backlinks from mirror sites, a domain with low authority, or a website with a poor layout.

How do you fix a Lack of Backlinks or Toxic Backlinks?

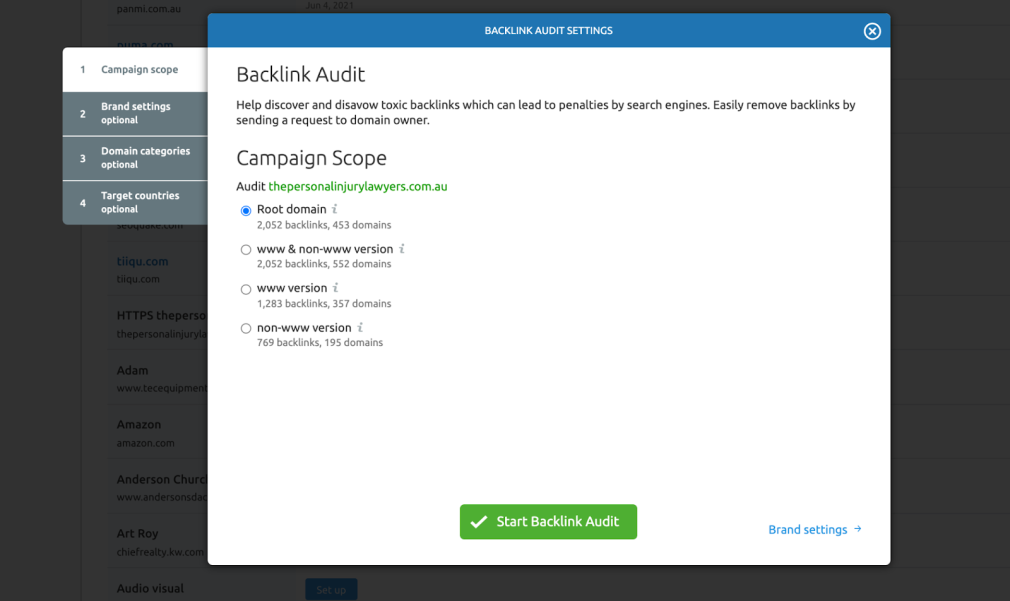

You’ll start with the Backlink Audit tool first to discover where if both types of links are present.

If you’ve already created a project for your domain, you’ll be able to run the Backlink Audit tool from your project dashboard. Once open, the tool will prompt you to set the crawl’s scope, target country, and more:

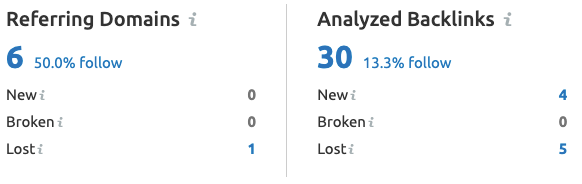

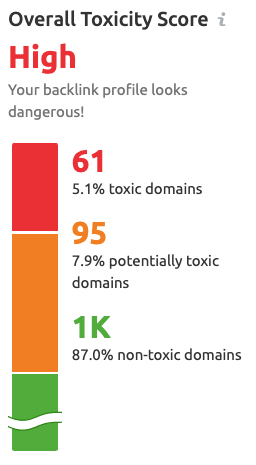

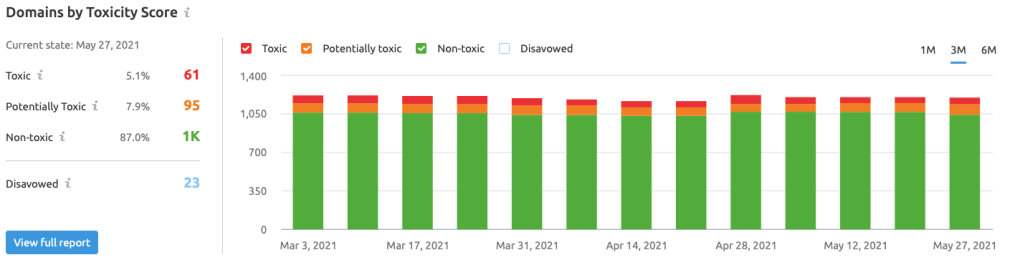

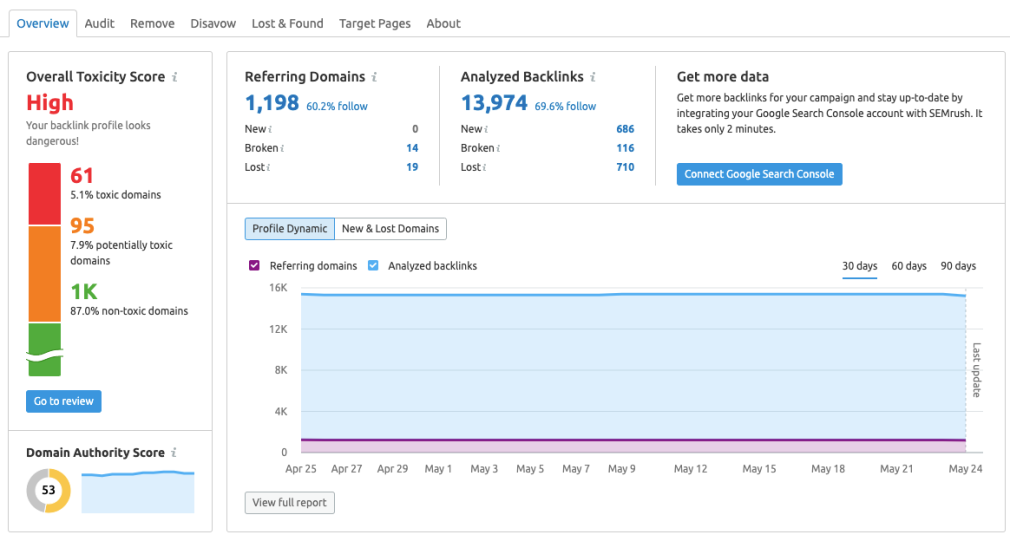

Once the audit is complete, you’ll be able to see how many backlinks your site has earned, the domains pointing back to your site, and which ones are toxic:

The Backlink Audit tool “grades” and sort toxic backlinks from a range of 0-100, with 0 as the best score.

There are over 50 checks Semrush performs against to come up with a toxicity score. You can see these checks by hovering your cursor over the toxic link.

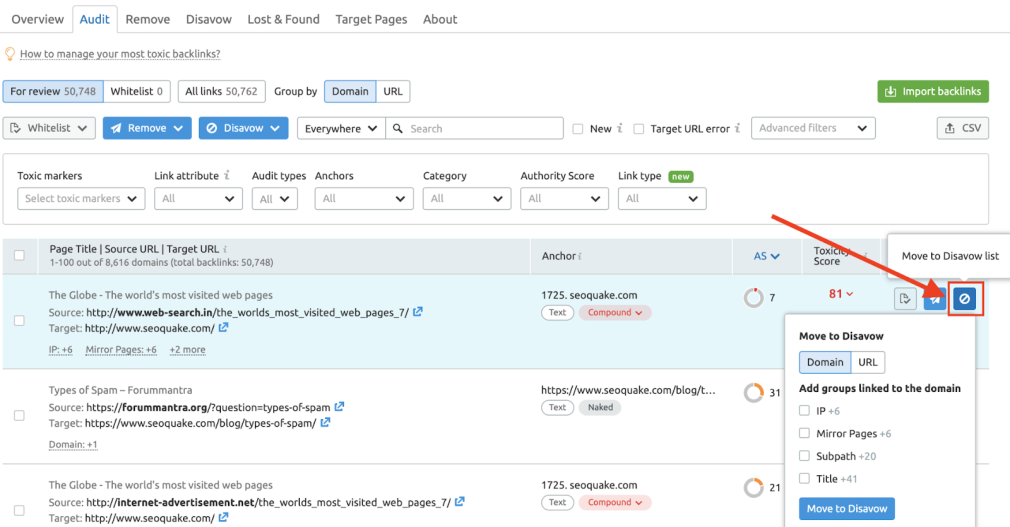

What you do with these toxic links can depend on the score and the reasons they were determined to be toxic.

You can put them on the remove list to have them manually removed, or you can ask Google to disavow them, so they do not affect the page rank of your website.

If you notice some links with a low toxic score, but you know that the websites are not toxic, you can add these links to your Whitelist.

More detail about backlink auditing can be found in our knowledge base.

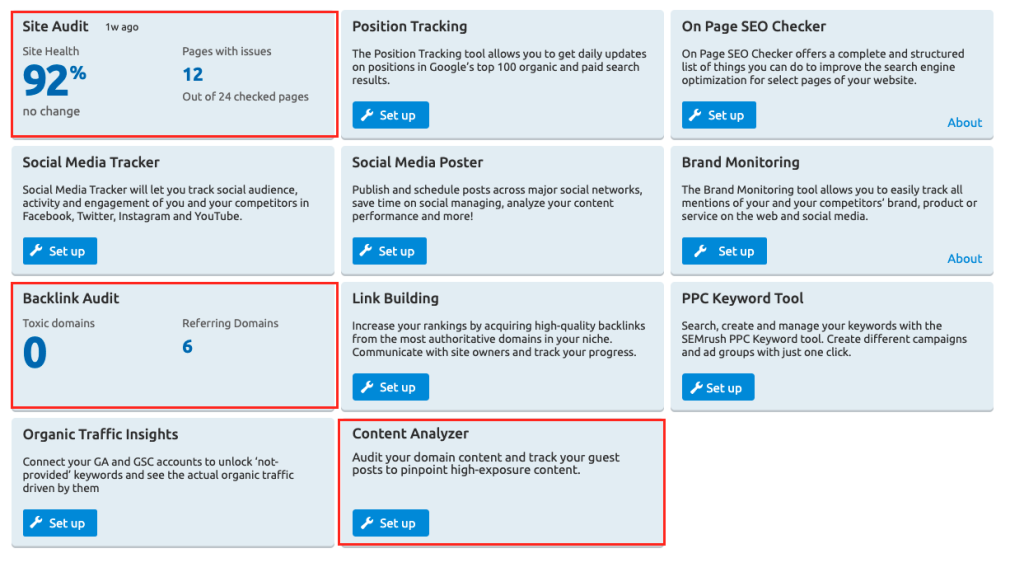

Semrush Tools that reveal Site Errors

There is an incredible array of Semrush tools to help you address all kinds of technical SEO issues, including the Site Audit tool, the Backlink Audit tool, and the Content Analyzer.

Site Audit Tool

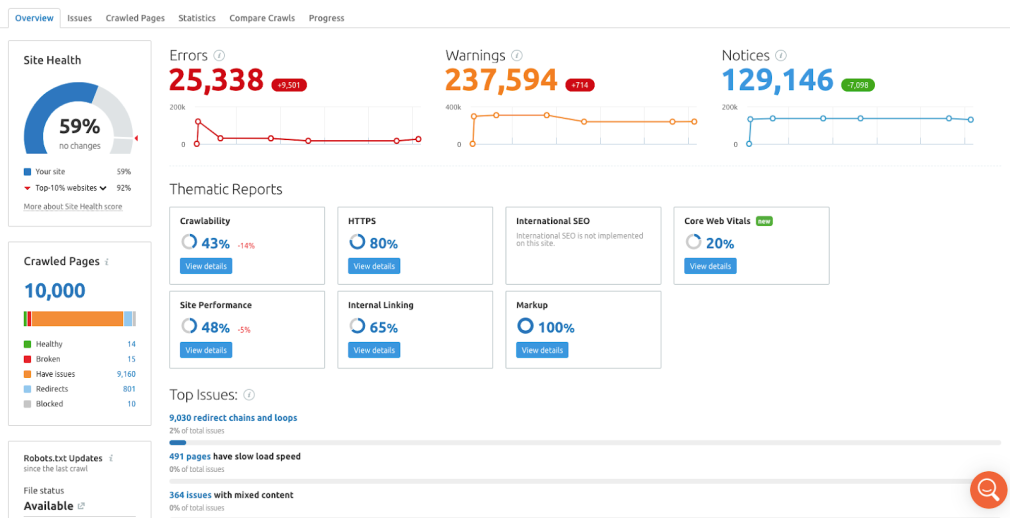

The Site Audit tool checks for over 120 on-page and technical issues when crawling your site. Use this tool to check for common issues like broken links, hreflang implementation, crawlability, and much more.

Monitoring your website’s health with a site audit is an important process that should be done regularly. Site audits help you catch site errors early and often, and the Site Audit tool offers suggestions to help you resolve any issues it finds.

You can crawl up to 100 pages a month for free. Check out our guide to the Site Audit tool on our knowledge base.

Backlink Audit Tool

The Backlink Audit tool checks the number and quality of the backlinks pointing to your site. You can then whitelist or disavow the backlinks you find to help boost your website’s page authority.

You can also further analyze toxic backlinks and use the tool to request that such links removed from external sites.

Content Analyzer

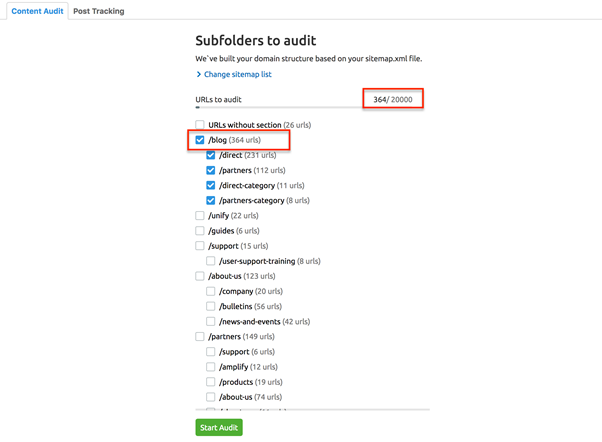

The Content Analyzer tool works in 2 steps. The first is a content audit with the Content Audit tool.

This tool audits your website’s content by exploring its sitemap and checking subfolders for URLs and internal linking. It will need your sitemap or robots.txt file to run this report.

In the example above, the tool shows that there are 364 URLs that need auditing under the “Blogs” subfolder.

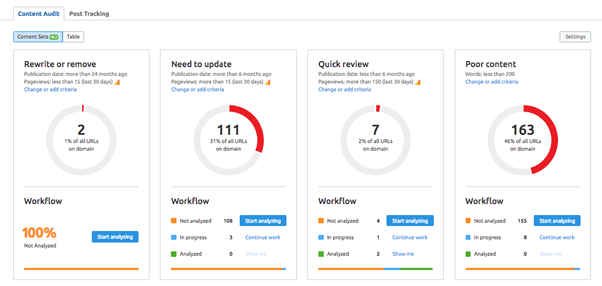

Once you have the audit, the tool will return a report:

You’ll have four categories to work through:

Rewrite or remove: Pages published more than 24 months ago and viewed less than 15 times in the last month will be added here. You’ll have the option to delete the pages or refresh the content. Need to update: Pages published more than 6 months ago and viewed less than 15 times in the past month are slotted here. You likely need to refresh to content or find new ways to link internally to this content so it becomes more useful. Quick Review: These are pages published recently that have more than 150 visits in the past month. These pages are performing well, so take notes for future content or use them as inspiration to refresh older content. Poor Content: These pages have less than 200 words featured on them. are pages with less than 200 words. This is too few words to be effective with Google. Try repurposing the content for social media or expanding to at least 600 words.Key Takeaways

The world of SEO is competitive and you’ll need every advantage you can get. Give yourself a leg up on the competition by addressing key errors that may be impacting your site.

Don’t be overwhelmed if our audit tool returns lots of errors. Take it step by step, fix each error in a logical and steady way, and run subsequent audits to ensure your work is making an impact.

For a more in-depth guide to crawlability errors, check out our piece on fixing crawlability issues.

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: