We’ve all been there, whether we’ve been an SEO for weeks or decades — staring at a website, knowing there are issues slowing it down and keeping it from the top of results pages. Just looking at the site, you can tick off a few changes that need to be made: Perhaps the title tag on the homepage doesn’t follow SEO best practices, or the navigation looks like something you need both hands to maneuver.

A technical SEO audit isn’t easy; it’s a puzzle with lots of evolving pieces. The first time you face an audit, it can seem like there’s just too much to do. That’s why we’ve put together this detailed guide.

Follow these steps to run a technical audit using Semrush tools. It will help beginners, especially, who want to reference a step-by-step guide to make sure they don’t miss anything major. We’ve broken the process into 15 steps so you can check them off as you go.

When you perform a technical SEO audit, you want to check and address issues with:

1. How to Spot and Fix Indexation and Crawlability Issues 2. How to Address Common Site Architecture Issues 3. How to Audit Canonical Tags and Correct Issues 4. How to Fix Internal Linking Issues on Your Site 5. How to Check for and Fix Security Issues 6. How to Improve Site Speed 7. How to Discover the Most Common Mobile-Friendliness Issues 8. How to Spot and Fix the Most Common Code Issues 9. How to Spot and Fix Duplicate Content Issues 10. How to Find and Fix Redirect Errors 11. Log File Analysis 12. On-Page SEO 13. International SEO 14. Local SEO 15. Additional TipsSemrush’s Site Audit Tool should be a major player in your audit. With this tool, you can scan a website and receive a thorough diagnosis of your website’s health. There are other tools, including the Google Search Console, that you’ll need to use as well.

Let’s get started.

1. How to Spot and Fix Indexation and Crawlability Issues

First, we want to make sure Google and other search engines can properly crawl and index your website. This can be done by checking:

The Site Audit Tool

The robots.txt file

The sitemaps

Subdomains

Indexed versus submitted pages

Additionally, you’ll want to check canonical tags and the meta robots tag. You’ll find more about canonical tags in section three and meta robots in section eight.

Semrush’s Site Audit Tool

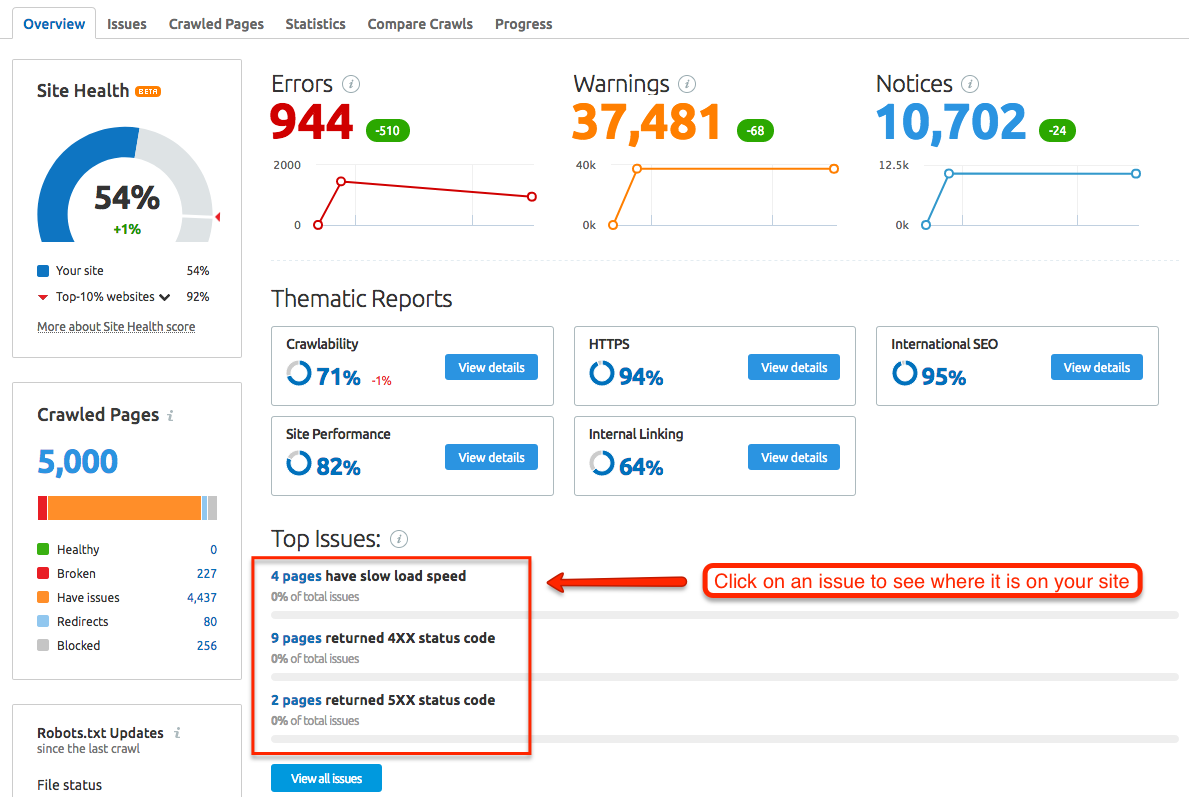

The Site Audit Tool scans your website and provides data about all the pages it’s able to crawl, including how many have issues, the number of redirects, the number of blocked pages, overall site performance, crawlability, and more. The report it generates will help you find a large number of technical SEO issues.

robots.txt

Check your robots.txt file in the root folder of the site: https://domain.com/robots.txt. You can use a validation tool online to find out if the robots.txt file is blocking pages that should be crawled. We’ll cover this file — and what to do with it — in the next section on site architecture issues.

Sitemap

Sitemaps come in two main flavors: HTML and XML.

An HTML sitemap is written so people can understand a site’s architecture and easily find pages. An XML sitemap is specifically for search engines: It guides the spider so the search engine can crawl a website properly.It’s important to ensure all indexable pages are submitted in the XML sitemap. If you’re experiencing crawling or indexing errors, inspect your XML sitemap to make sure it’s correct and valid.

Like the robots.txt file, you’ll likely find an XML sitemap in the root folder:

https://domain.com/sitemap.xml

If it’s not there, a browser extension can help you find it, or you can use the following Google search commands:

site:domain.com inurl:sitemap

site:domain.com filetype:xml

site:domain.com ext:xml

If there’s no XML sitemap, you need to create one. If the existing one has errors, you’ll need to address your site architecture. We’ll detail how to tackle sitemap issues in the next section.

To fix crawlability and indexing issues, find or create your sitemap and make sure it has been submitted to Google.

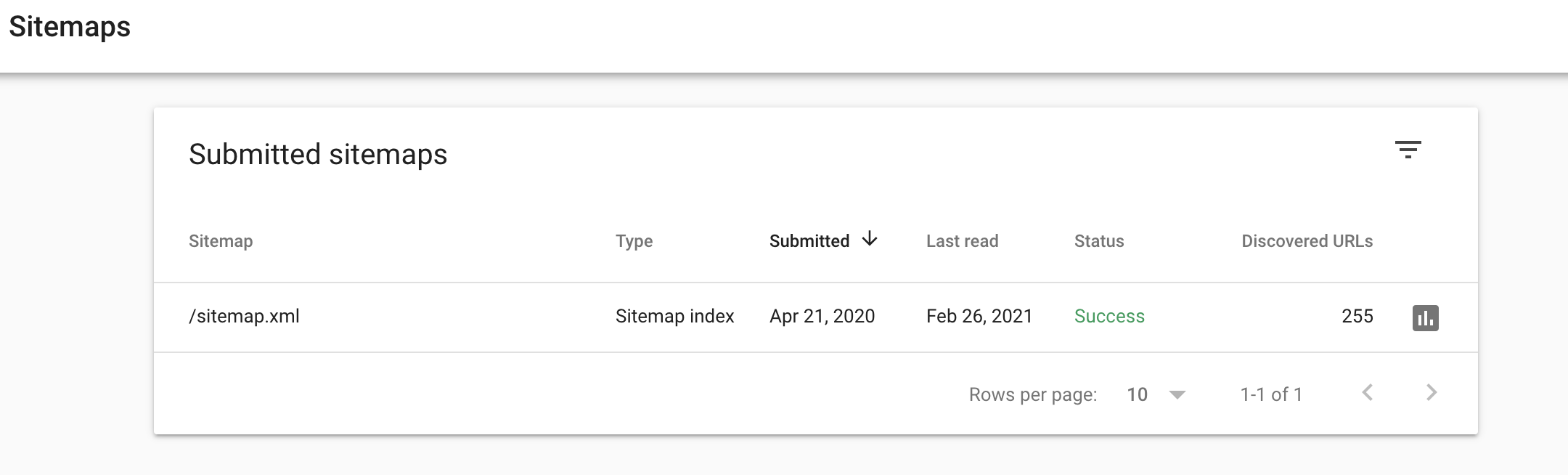

Submitting your sitemap means posting it on your website in an accessible location (not gated by a login or other page), then entering the sitemap’s URL in the Sitemaps report in Google Search Console and clicking “Submit.”

Check the Sitemap report in Google Search Console to find out if a sitemap has been submitted, when it was last read, and the status of the submission or crawl.

Your goal is for the Sitemap report to show a status of “Success.” Two other potential results, “Has errors” and “Couldn’t fetch,” indicate a problem.

Subdomains

In this step, you’re verifying your subdomains, which you can check by doing a Google search:

site:domain.com -www.

Note the subdomains and how many indexed pages exist for each subdomain. You want to check to see if any pages are exact duplicates or overly similar to your main domain. This step also allows you to see if there are any subdomains that shouldn’t be indexed.

Indexed Versus Submitted Pages

In the Google search bar, enter:

site:domain.com

or

site:www.domain.com

In this step, you’re making sure the number of indexed pages is close to the number of submitted pages in the sitemap.

What To Do with What You Find

The issues and errors you find when you check for crawlability and indexability can be put into one of two categories, depending on your skill level:

Issues you can fix on your own

Issues a developer or system administrator will need to help you fix

A number of the issues you can fix, especially those related to site architecture, are explained below. Consider the following two guides for more in-depth information:

For manageable fixes to some of the most common crawlability issues, read “ How to Fix Crawlability Issues: 18 Ways to Improve SEO.”

If you’d like more information on the specifics of crawling and indexability, check out “ What are Crawlability and Indexability: How Do They Affect SEO?”

2. How to Address Common Site Architecture Issues

You ran the Site Audit report, and you have the robots.txt file and sitemaps. With these in hand, you can start fixing some of the biggest site architecture mistakes.

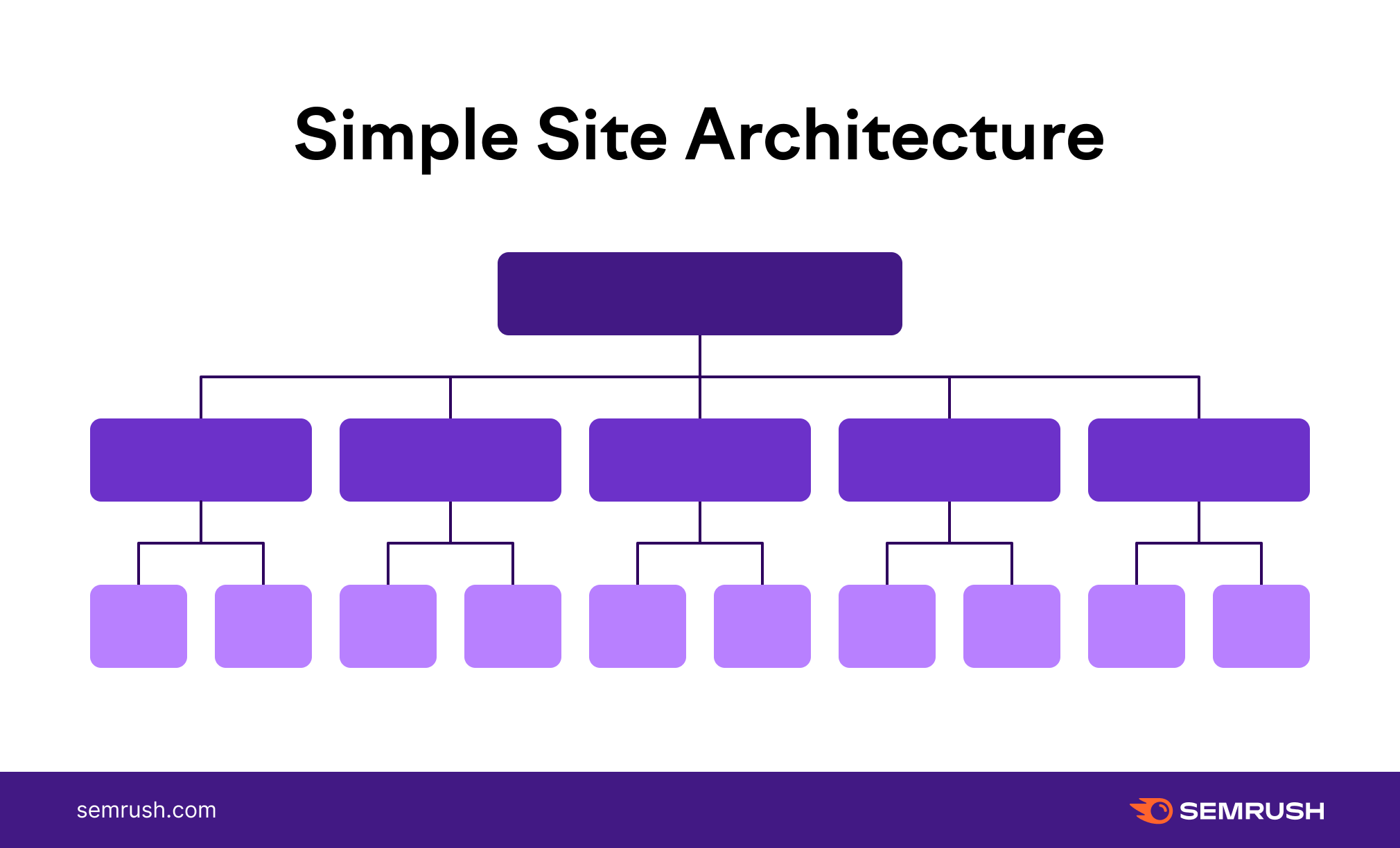

Site Structure

Site structure is how a website is organized. “A good site structure groups content and makes pages easy to reach in as few clicks as possible.” It’s logical and easily expanded as the website grows. Six signs of a well-planned and structured website:

It takes only a few clicks ( ideally three) for a user to find the page they want from the homepage.

Navigation menus make sense and improve the user experience.

Pages and content are grouped topically and in a logical way.

URL structures are consistent.

Each page shows breadcrumbs. You have a few types of breadcrumbs to choose from, but the point is to help website users see how they’ve navigated to the page they’re on.

Internal links help users make their way through the site in an organic way.

It’s harder to navigate a site with messy architecture. Conversely, when a website is structured well and uses the elements listed above, both your users and SEO efforts benefit.

Site Hierarchy

When it takes 15 clicks to reach a page from the homepage, your site’s hierarchy is too deep. Search engines consider pages deeper in the hierarchy to be less important or relevant.

Conduct an analysis to regroup pages based on keywords and try to flatten the hierarchy. Making these types of changes will likely change URLs and their structures and may also affect the navigation menus to reflect new top-level categories.

URL Structure

Like the website’s architecture, the site’s URL structure should be consistent and easy to follow. For example, if a website visitor follows the menu navigation for girls’ shoes:

Homepage > Clothing > Girls > FootwearThe URL should mirror the architecture.

domain.com/clothing/girls/footwearFurthermore, other products should have similar URL structures. A dissimilar URL would indicate an unclear URL structure, such as:

domain.com/boysfootwearIdeally, the site’s hierarchy — created by pillar pages and topic clusters — will be reflected in the URLs for the website.

domain.com/pillar/cluster-page-1/This is called a URL silo, and it helps maintain topical relevance within a site’s subdirectory.

Sitemap Fixes

Take a look at the HTML and XML sitemaps you found in the first step. In the Google Search Console, look under:

Index > Sitemaps

This will flag errors you can fix. For example, to adhere to Google’s requirements, your sitemap can’t be larger than 50MB uncompressed and can’t have more than 50,000 URLs.

Additionally, check that it only includes the proper protocol (HTTPS or HTTP) and uses the full URL you want the bot to index. For example, if the URL is:

https://www.domain.com/products

then that’s what should be listed in the sitemap, not domain.com/products or /products. The sitemap helps the bot find pages, but the pages won't be crawled if the instructions aren’t clear.

Double-check the sitemaps don’t include pages that you don't want in SERPs, such as login pages, customer account pages, or gated content. On the other hand, every page you want indexed by search engines must be included in the XML sitemap.

robots.txt Fixes

Sitemaps help users and search engine spiders find and navigate your website. The robots.txt file helps you exclude parts of your site from being crawled with the disallow command.

You found your robots.txt file earlier. You can also find it by running the Site Audit Tool, which will allow you to open the file directly from the report and reveal any formatting errors. You can also use the testing tool in Google Search Console.

As you review the site’s robots.txt file, note that it should perform the following actions:

Point search engine bots away from private folders

Keep bots from overwhelming server resources

Specify the location of your sitemap

Go through the file and check that all the disallow rules are used correctly. Should you find an issue, it may be a matter of protecting a page with a password or using a redirect to let the search engine know the page has moved. You could also add or update a meta robots tag or canonical tag.

3. How to Audit Canonical Tags and Correct Issues

Canonical tags are used to point out the “master page” that the search engine needs to index when you have pages with exact-match or similar content. They prevent search engines from indexing the wrong page by telling the bot which page to display in search results.

In the Site Audit report, you’ll potentially see several alerts related to canonical issues. These issues can affect how search engines index your site.

A canonical tag looks like this:

<link rel="canonical" href="https://www.domain.com/page-a/" />The canonical tag should be included in a page’s coding as a signal: When the bot sees the tag, it knows whether to index that page.

Best practices for canonical URLs include:

Use only one canonical URL per page. The page shouldn’t direct a bot to multiple other pages, similar to how directions at an intersection can’t be “go both left and right.”

Use the correct domain protocol: HTTPS or HTTP.

Pay attention to how your URLs end: with a trailing slash or without.

Specify whether you want the www or the non-www version of the URL indexed.

Write the absolute URL in the canonical tag, not a relative URL.

Include a URL in the canonical tag that has no redirects (such as a 301 error) and is the direct target. It needs to result in a 200 OK status.

Tag only legitimate duplicate or near-identical content.

Be certain exact duplicate pages or pages with nearly identical content have the same canonical tag.

Include canonical tags in Accelerated Mobile Pages (AMPs).

Canonical tags are one of the most important tools you can use in SEO. They help ensure your site is crawled and indexed correctly and, when used properly, can often improve your rankings.

4. How to Fix Internal Linking Issues on Your Site

As you improve your site’s structure and make it easier for both search engines and users to find content, you’ll need to check the health and status of the site’s internal links.

Your site has two primary types of internal links:

Navigational: Often found in the header, footer, or sidebar

Contextual: Included within the content of the page

A third type, breadcrumbs, is used less often but can be an excellent addition to a website.

Refer back to the Site Audit report and click “View details” next to your Internal Linking score. In this report, you’ll be alerted to two types of issues:

Orphaned pages: These pages have no links leading to them. That means you can’t gain access to them via any other page on the same website. Even if they’re listed in your sitemap, they may not be indexed by search engines.

Pages with high click depth: The farther away a page is from the homepage, the higher its click depth and the lower its value to search engines.

You’ll also see a breakdown of the page’s internal link issues:

Errors: Major issues that need to be addressed first, such as broken internal links.

Warnings: Problems, including broken external links and nofollow attributes in outgoing internal links.

Notices: Issues you should work on after handling the errors and warnings, including orphaned pages and permanent redirects.

You can also look for pages that have linking issues but are still being indexed by checking in Google Analytics. Find the pages with the least views and add them to your list of pages to assess.

As you sort through your pages with the above issues, many will be a fairly easy or quick fix. The Site Audit report has helpful tips for fixing each internal linking problem.

Ensure internal links:

Link directly to indexable pages

Link to pages that don’t have redirects

Provide relevant and helpful information for users and search engines — not random or unnecessary information

Your site’s internal linking (and external, for that matter) needs to use appropriate anchor text that uses keywords and lets users know exactly what to expect when they reach the hyperlinked page. What’s helpful for users is often also helpful for search engine bots.

Of course, you may be fixing issues that could have been avoided. To make the site as helpful and as easy to navigate as possible, talk to the people involved in content or page creation.

Make sure they understand the need for contextual links and how to create new pages with SEO in mind. This may help reduce internal linking issues in the future.

5. How to Check for and Fix Security Issues

Data transferred over a Hypertext Transfer Protocol (HTTP) is not encrypted, meaning a third-party attacker may be able to steal information. For that reason, your website’s users shouldn’t be asked to submit payment or other sensitive information over HTTP.

The solution is to move your website to a secure server on the HTTPS protocol, which uses a secure certificate called an SSL certificate from a third-party vendor to confirm the site is legitimate.

HTTPS displays a padlock next to the URL to build trust with users. HTTPS relies on transport layer security (TLS) to ensure data isn’t tampered with after it’s sent.

When moving to a secure server, many website owners make common mistakes. However, our Site Audit Tool checks for many issues that can come up, including these 10 problems.

Expired certificate: This Lets you know whether your security certificate needs to be renewed.

Certificate registered to the wrong domain name: Tells you if the registered domain name matches the one in your address bar.

Old security protocol version: Informs you if your website is running an old SSL or TLS protocol.

Non-secure pages with password inputs: Warns you if your website does not use HTTPS, which makes many users doubt the site’s security and leave.

No server name indication: Lets you know if your server supports SNI, which allows you to host multiple certificates at the same IP address to improve security.

No HSTS server support: Checks the server header response to make sure HTTP Strict Transport Security is implemented, which makes your site more secure for users.

Mixed content: Determines if your site contains any unsecure content, which can trigger a “not secure” warning in browsers.

Internal links to HTTP pages: Gives you a list of links leading to vulnerable HTTP pages.

No redirects or canonicals to HTTPS URLs from HTTP versions: Lets you know if search engines are indexing an HTTP and HTTPS version of your site, which can impact your web traffic because pages can compete against each other in search results.

HTTP links in the sitemap.xml: Lets you know if you have HTTP links in your sitemap.xml, which may lead to incomplete crawls of your site by search engines.

Semrush’s HTTPS Implementation report in the site audit tool gives you an overview of your site’s security and advises you how to fix all the issues above.

6. How To Improve Site Speed

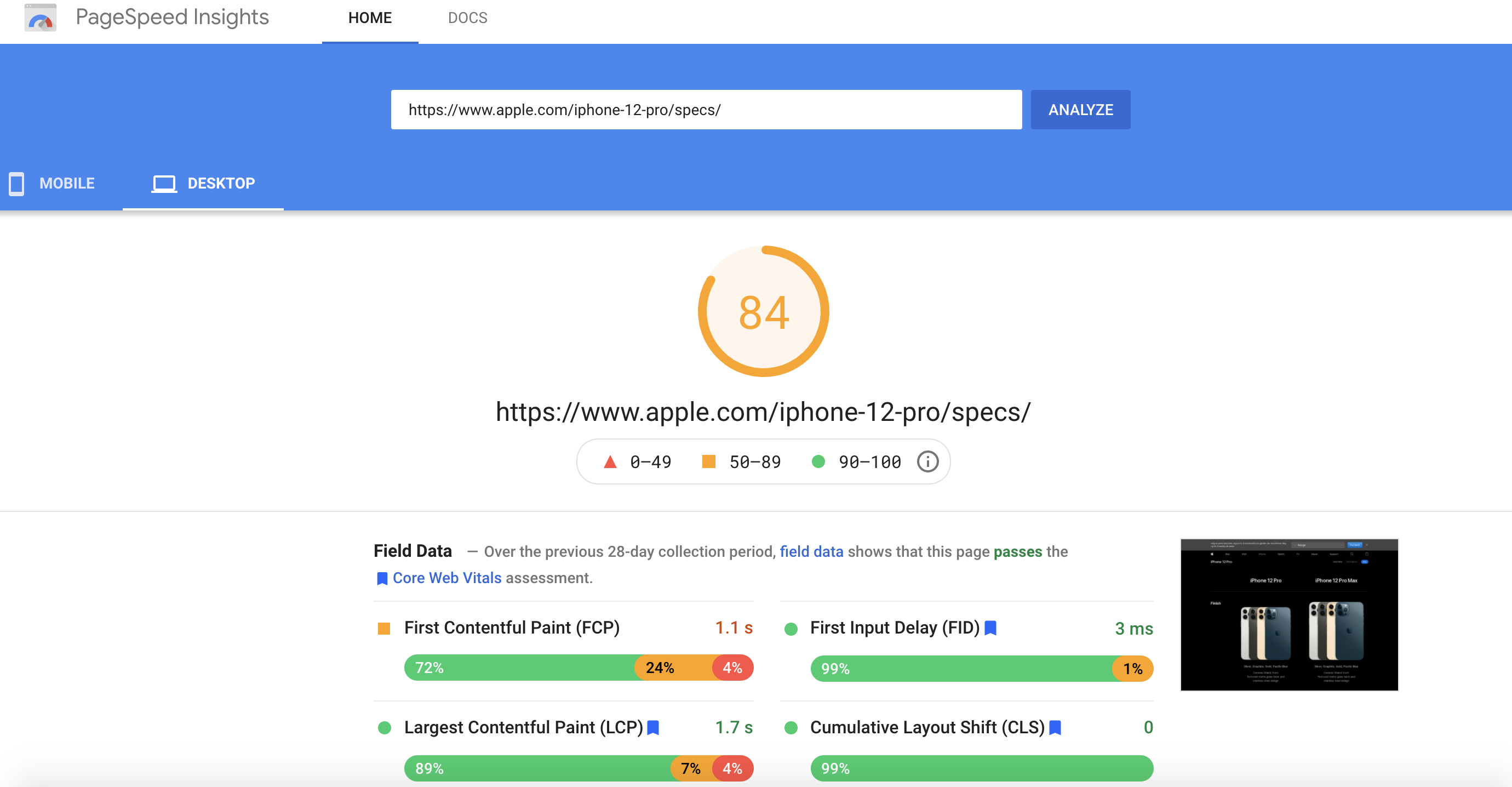

Site speed directly impacts the user experience and is a factor in search engine rankings. When you audit a site for speed, you have two data points to consider:

Page speed: How long it takes one webpage to load

Site speed: The average page speed for a sample set of page views on a site

Improve page speed, and your site speed improves. This is such an important task that Google has a tool specifically made to address it: the PageSpeed Insights analyzer. The score it gives is based on a few metrics, including page load time.

Another tool worth looking into is the Core Web Vitals extension for Google Chrome. It measures pages based on three key page speed metrics that represent the perceived user experience. With PageSpeed Insights, this extension, and the Site Audit report, you’ll have an in-depth look at your website’s performance.

The Site Audit report will help you find and fix performance issues with:

Large HTML page size

Redirect chains and loops

Slow page load speed

Uncompressed pages

Uncompressed JavaScript and CSS files

Uncached JavaScript and CSS files

Too large JavaScript and CSS total size

Too many JavaScript and CSS files

Unminified JavaScript and CSS files

Slow average document interactive time

To increase your PageSpeed Insights rating and overall site speed, your first step is to optimize images. Since images are often a major cause of slow page loads, you should optimize them without reducing their quality if possible.

You have a few options when it comes to image optimization, including sizing images to their display size (not their actual size) and compressing them in order to avoid forcing a page to load a 1MB image, for example.

After images, focus on JavaScript and CSS optimization. Use minification, which “removes whitespace and comments to optimize CSS and JS files.” To speed up your website further:

Clear up redirects

Use browser caching

Reduce the file size of media, including video and gifs

Minimize HTTP requests

Choose a hosting provider that can adequately manage your website’s size

Use a content delivery network (CDN)

Compress HTML, JavaScript, and CSS files with Gzip compression

7. How to Discover the Most Common Mobile-Friendliness Issues

As of February 2021, more than half ( 56 percent) of web traffic happens on mobile devices. Google plans to implement mobile-first indexing for all websites by the end of March 2021.

At that point, the search engine will index the mobile version of all websites rather than the desktop version. This is why it’s imperative you fix mobile-friendliness issues.

When optimizing for mobile, you can focus on responsive web design (RWD) to make webpages render well on various devices, or you can use AMPs, which are stripped-down versions of your main website pages.

AMPs load quickly on mobile devices because Google runs them from its cache rather than sending requests to your server.

AMPs improve mobile site speed, but they have a few downsides. Website owners can’t earn ad revenue and typically can’t access robust, easy-to-implement analytics from them. Plus, website content may vary on desktop and mobile.

If you use AMPs, it’s important to audit them regularly to make sure you’ve implemented them correctly to boost your mobile visibility. The Site Audit Tool helps business subscribers audit AMPs and find specific fixes.

The tool tests your AMP pages for 33 issues divided into three categories:

AMP HTML issues

AMP style and layout issues

AMP templating issues

In addition, the tool will detect if the AMP tag needs a canonical tag.

When you review your AMP report, hover over the issue description tag to find out what’s wrong, then over each issue to discover how to fix it.

8. How to Spot and Fix the Most Common Code Issues

It doesn’t matter what a website looks like. Behind the flashy interface and well-designed pages is code. That code is entirely separate language and must have proper syntax and punctuation just like any other language. As finicky readers, Google and other search engines may not bother to index your pages if there are errors in your website’s code.

During your technical SEO audit, keep an eye on several different parts of website code and markup:

HTML

CSS (.css)

JavaScript (.js)

Meta Tags

The Open Graph protocol, including Twitter Card markup

Schema.org

JavaScript

Google crawls websites coded with HTML in a straightforward way, but when the page has JavaScript, it takes more effort for search engines to crawl the page.

CSS and JavaScript files aren’t indexed by a search engine — they’re used to render the page. However, if Google can’t get them to render, it won’t index the page properly.

Check to make sure a page that uses JavaScript is rendering properly by using Google Search Console. Find the URL Inspection Tool, enter the URL, and then click “Test URL.”

Once the inspection is over, you can view a screenshot of the page as Google renders it to see if the search engine is reading the code correctly. Check for discrepancies and missing content to find out if anything is blocked, has an error, or times out.

If a JavaScript page is not rendered correctly, it may be because resources are blocked in the robots.txt file. Ensure the following code is in the file:

User-Agent: GooglebotAllow: .jsAllow: .cssIf that code is already in place, verify your scripts and where they are in the code. There may be a conflict with another script on the page because of how the scripts are ordered or optimized.

Once you know Google can render the page, you can check to see that the page is being indexed. If it isn’t, our JavaScript SEO guide can help you diagnose and fix specific JavaScript-specific indexing problems.

Meta Tags

A meta tag gives a search engine bot additional data about a piece of data (hence the name). These tags are used within your page’s HTML. Four types you should understand well are:

Title tags

Meta descriptions

Robot meta tags

Viewport meta tags

In our post about on-page SEO, we discuss title tags and their importance. The meta description sits right under the title in SERPs and while it's not directly tied to Google’s ranking algorithm, it does help people understand what they’ll see when they click through to your site from a SERP.

Robot meta tags are used in the source code and provide instructions for search engine crawlers. Some of the most useful parameters include:

Index

Noindex

Follow

Nofollow

Noarchive

The viewport content tag “alters the page size automatically as per the user’s device” when you have a responsive design.

Open Graph, Schema, and Structured Data

Within your page code, using special tags and microdata sends signals to search engines that specify what a page is about. They can make it easier for search engines to index and categorize pages correctly.

Open Graph tags are code snippets that control the content that shows up when users share a URL on select social media sites. Twitter Cards have their own markup.

Schema is a shared collection of markup language that web developers can use to help search engines produce content-rich results for a webpage on SERPs.

For webpages you think could display as an enriched search result on a SERP, you can use specific schema markup in order to compete for this position. The goal of using structured data is to help search engines better understand your site’s content, which can result in your site being shown in rich results.

One popular example of enriched search results is the featured snippet. “ How to Add FAQ Schema to Any Page Using Google Tag Manager [Easy Guide]” explains the process of creating an FAQ page on your website with the goal of having it display directly on Google’s SERP.

If you decide to try to win an enriched search result, use the Rich Results Test to check your webpage’s code to “see which rich results can be generated by the structured data it contains.”

The key to finding errors in your website’s code is practice. As you find pages that are flagged with errors and warnings in the Site Audit report, and when you see anomalies in your Google Search Console, check your code.

Diagnosing technical SEO issues goes much faster and is far less frustrating when your code is clean and correct.

9. How to Spot and Fix Duplicate Content Issues

When your webpages contain identical information or nearly identical information, it can lead to several problems.

An incorrect version of your page may display in SERPs.

Pages may not perform well in SERPs, or they may have indexing problems.

Your core site metrics may fluctuate or decrease.

Search engines may be confused about your prioritization signals, leading them to take unexpected actions.

Perform a content audit to ensure your site's not experiecing duplicate content issues. You can use pillar pages and topic clusters to create organized content that boosts your site’s authority.

To improve your site’s taxonomy, pay careful attention to how you use categories and tags, and make sure you do so in an intentional way. Also, pay mind to your site’s pagination, the sequencing of archive pages.

If the Site Audit tool detects pages with 80 percent identical content, it will flag them as duplicate content. It may happen for three common reasons: there are multiple versions of URLs, the pages have sparse content, or there are errors in the URL search parameters.

Multiple Versions of URLs

One of the most common reasons a site has duplicate content is if you have several versions of the URL. For example, a site may have an HTTP version, an HTTPS version, a www version, and a non-www version.

To fix this issue:

Add canonical tags to duplicate pages. These tags tell search engines to crawl the page with the main version of your content.

Set up a 301 redirect from your HTTP pages to your HTTPS pages. This redirect ensures users only access your secure pages.

Pages with Sparse Content

Another reason the Site Audit may find duplicate content is because you have pages with little content on them. If you have identical headers and footers, the tool may flag a page with only one or two sentences of copy. To fix this problem, add unique content to these pages to help search engines identify them as non-duplicate content.

URL Search Parameters

URL search parameters are extra elements of a URL used to help filter or sort website content. You can identify them because they have a question mark and equal sign.

They can create copies of a page, such as a product page. To fix the problem, run parameter handling through Google Search Console and Bing Webmaster Tools to make it clear to these search engines not to crawl duplicate pages.

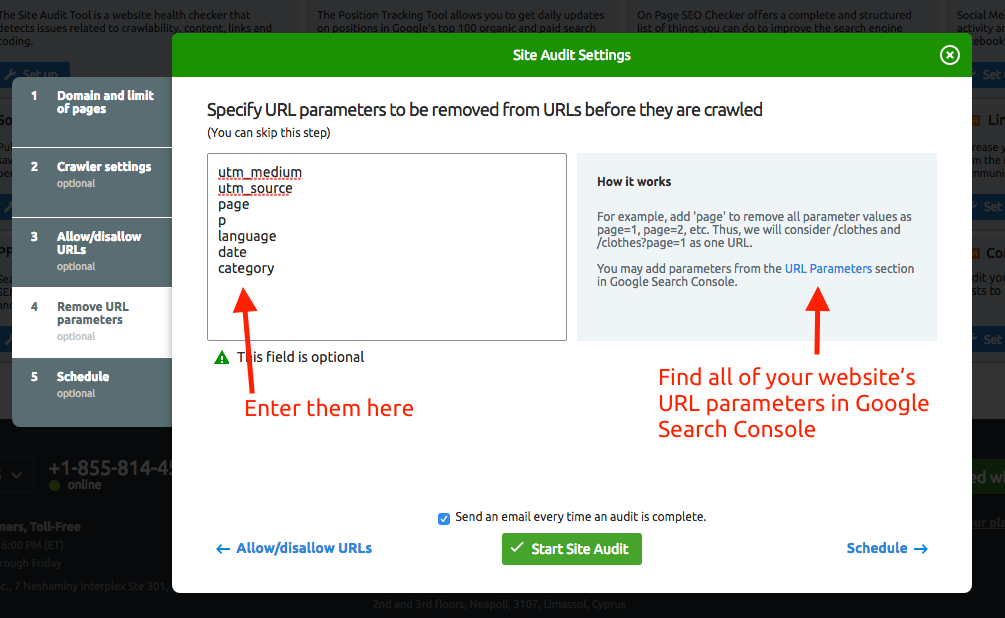

Pro tip: When you set up a site audit on Semrush, you can remove URL parameters before they're crawled as well.

Once you run Semrush’s Site Audit tool, you’ll be able to identify duplicate content and fix technical issues that can cause search engines to treat your pages as duplicates.

10. How to Find and Fix Redirect Errors

Page loading and server errors affect the user experience and can create issues when a search engine tries to crawl your site. After you run your Site Audit, you’ll see a list of your top issues; click the button below, “View all issues,” to see your redirect errors and status codes.

3xx Status Codes

These errors indicate that when users and search engines land on this page, they are being redirected to a new page.

301: The redirect is permanent. This is for identical or close-match content that’s been moved to a new URL, and is preferred because it passes SEO value to the new page.

302: This indicates a temporary redirect for identical or close-match content. This could be used in instances where you’re A/B testing a new page template, for example.

307: This also indicates a temporary redirect, but means it’s changing protocol from the source to the destination. These redirects should be avoided.

Flag any pages with multiple redirects, called a redirect chain, which is when more than one redirect exists between the original and final URL. Additionally, remove loops, which happen when the original URL forwards to itself. You can find a list of all the redirect chains and loops for your website in the Site Audit tool.

Redirects are a helpful tool in SEO and should be used when appropriate: when content has changed, that page is no longer available or up to date, or both.

4xx Status Codes

These errors indicate that a requested page can’t be accessed and are known as broken links. Common 4xx errors include:

403: Access is forbidden, which generally means a login is required.

404: The resource doesn’t exist and the link needs to be fixed.

410: The resource is permanently gone.

429: There are too many requests on the server in too short a time.

5xx Status Codes

5xx errors exist on the server side. They indicate that the server could not perform the request.

Go through your Site Audit report and fix pages that have unexpected statuses. Keep a list of pages that have a 403 or 301 status, for example, so you can make sure you’re using those redirects properly.

Also, check the pages that have 410 errors to ensure the resource is legitimately and permanently gone, and that there’s no valuable alternative to redirect traffic to.

11. Log File Analysis

This step helps you look at your website from the viewpoint of a Googlebot to understand what happens when a search engine crawls your site. Your website’s log file records information about every user and bot that visits your site, including these details.

The requested URL or resource

The HTTP status code of the request

The request server’s IP address

The time and date the request was made

The user agent making the request, for instance Googlebot

The method of the request

It can help you answer several questions about your website without analyzing your log file manually, including:

What errors did the tool find during the crawl?

What pages did it not crawl?

How efficiently is your crawl budget being spent?

What pages are the most crawled on your site?

Are there sudden increases in the amount of crawling?

Can you manage Googlebot’s crawling?

Answering these questions can help you refine your SEO strategy or resolve issues with the indexing or crawling of your webpages.

To use our Log File Analyzer Tool, you need a copy of your log file, which you can access on your server’s file manager in the control panel or via an FTP client. Once you find your log file, you need to take three steps.

Make sure it’s in the correct access.log format.

Upload the file to the log file analyzer.

Start the analyzer.

Once the log analyzer is finished, you’ll get a detailed report. In it you’ll see:

Bots: How many requests different search engine bots are making to your site each day

Status Codes: The breakdown of different HTTP status codes found per day

File Types: A breakdown of the different file types crawled each day

You can also look at your Hits by Pages report, which shows which folders or pages on your site have the most or fewest bot hits — and which pages are crawled most often.

12. On-Page SEO

On-page SEO, also referred to as on-site SEO, is the process of optimizing content on your page to make it as discoverable for search engines as possible.

Because of the sheer abundance of content online, on-page SEO matters a great deal. Successful on-page SEO increases the odds that search engines will connect the relevance of your page to a keyword you’d like to rank for.

There are several key steps to optimize on-page SEO. As a starting point, look out for these common issues.

Lengthy Title Tags

A title tag is the title of a webpage that shows up in SERPs. Clicking on a title tag brings you to the corresponding page. Title tags not only tell searchers the topic of the page, they also tell search engines how relevant that page is to the query.

As a rough guideline, title tags should clock in at around 50 to 60 characters long (since Google can only display around 600 pixels of text in results pages) and should contain important keywords. You can check your title tag length (along with other aspects of your content) with Semrush’s SEO Writing Assistant.

Missing H1s

Header tags (H1, H2, and so forth), help break up sections of text. Since lengthy chunks of text can be tough to read, headers help people scan and digest content. H1 tags serve as the most general heading while descending headers help structure more specific content.

H1 tags help search engines determine the topic of any given content, so missing H1s will leave gaps in Google’s understanding of your website. Always remember this, too: There should only be one H1 tag on each page.

Duplicate Title and H1 Tags

Identical H1 and title tags can make a page look overly optimized, which can hurt your ranking. Rather than copy a title tag word for word when writing an H1, consider a related but unique header. You can also look at header tags as new opportunities to rank for other relevant keywords.

Thin Content

In the same way duplicate content can be problematic for SEO, so too can thin content — the type of content that provides little to no value to users or search engines.

Once you identify thin pages, improve them by either adding high-quality, unique content or by telling the crawler not to index a page (you can do this by adding a noindex attribute).

Check out our full on-page SEO checklist or use the Site Audit Tool to check your site’s on-page SEO health.

13. International SEO

If you have a website that needs to reach audiences in more than one country, your SEO efforts will include hreflang, geo-targeting, and other important international SEO elements.

hreflang

The Site Audit report gives you a score for International SEO and will alert you to issues with hreflang tags. You can also check these tags in Google Search Console with the International Targeting report.

The hreflang code tells search engines the page is for people who speak a specific language. The hreflang attribute signals search engines that a user querying in a particular language should be delivered results in that language instead of a page with similar content in another language. When you use this tag, put it as a link in the HTML head of the page.

To troubleshoot issues related to hreflang, read “ Auditing Hreflang Annotations: The Most Common Issues & How to Avoid Them.” This guide is so popular and helpful that Google links to it in its “Debugging hreflang errors” section.

Geo-Targeting and Additional International SEO Tips

In addition to checking hreflang tags, you should:

Conduct keyword research for additional countries with the Keyword Magic Tool

Set up tracking for specific locations with the Position Tracking Tool

Check for potential on-page improvements with the On Page SEO Checker

Some sites will also need to consider using a URL structure that shows a page or website is relevant to a specific country. For example, a website for Canadian users of a product may use:

domain.com/ca

or

domain.ca

Finally, if the website you’re auditing has a heavy, dedicated presence in two different languages or regions, consider hiring an SEO professional who speaks the language and knows the region to handle that version of the website.

Websites that use the search engine’s built-in “translate this page” function or use a translator app to simply copy content from one language to another will not offer as good a user experience and will likely see poor results on SERPs.

14. Local SEO

Local SEO is the optimization of a website for a product, service, or business for a location-specific query. Search engines show results based on the user’s location via:

IP address for desktop users

Geolocation for mobile users

For instance, if a person searches “Thai food,” the search engine will show local Thai restaurants based on the searcher’s IP address or geolocation. If your website does not include accurate or complete information and isn’t optimized for local SEO, community members may not easily find your site.

Google My Business (GMB)

A Google My Business Page serves as one of the most important tools for any business that promotes its services or products online. It displays the business’s contact information, business category, description, hours, services, menus (for restaurants), and more. It’s important to fill out as much as you can, as accurately as possible.

GMBs also allow people to:

Request quotes

Message or call the business directly

Book appointments

Leave reviews

Additionally, GMB pages allow you to see how your customers engage with your business by keeping track of calls, follows, bookings, and more. This page is of utmost importance for local SEO. For more in-depth information on setting up and using your GMB page, check out “ The Ultimate Guide to Google My Business for 2021.”

Optimizing Your Website for Local SEO

Citation management and mobile-friendliness count as two of the most important ranking factors for local SEO.

Citation management: A local citation is any online mention — from websites to social platforms and apps — of a local company’s contact information. Correct and consistent local citations not only help people contact a business, but they can also impact search engine rankings and help a business rank on Google Maps. To ensure the consistency of your local citations, use our Listing Management Tool.

Mobile-friendliness: The mobile-friendliness of your site matters, as we discussed in section seven. To check your site’s mobile-friendliness, head to Domain Overview and enter the site name. At the top of the report, you can select Desktop or Mobile. Switch to 'Mobile' to view mobile analytics data, including a mobile-friendliness score.

Local Link Building

One helpful way to boost your local SEO: getting local blogs, local businesses, and/or industry websites to link to your site. Some old-fashioned networking in your community can eventually boost your company’s SERP performance. Try our Link Building Tool to round up prospects you may want to connect with.

Want to rank in more than one location? Follow the steps detailed in “ On-Page Strategies for Local SEO: Ranking in Multiple Cities.”

15. Additional Tips

SEO managers use spreadsheets, pivot tables, and a host of tools to investigate and improve their websites. You, too, should stay as organized as possible to catch unexpected changes and be ready for inevitable algorithm updates. Noticing trends and staying on top of preventable issues will help you build a strategy for your website’s SEO efforts.

In the Site Audit report’s Compare Crawls tab, you can select specific crawls and analyze them to compare your results from two separate reports.

The “Progress” tab features an interactive line graph that showcases your website’s health over time. You can select specific errors, warnings, or notices to see how your site has progressed, or you can look at overall stats.

The key to effective SEO is to make it a team effort. When a company siloes content creators, website managers, SEO teams, and other departments, the website comes together piecemeal.

When everyone who contributes to a website works together, users and search engines can tell. The interface looks better, the user experience is superior, and search engines can properly index and crawl your site.

Stay organized and keep your team updated on SEO basics. The more you work together to keep your website running smoothly, the better your technical SEO audits will go.

We hope this guide will help you perform a thorough technical SEO audit. Use our Site Audit Tool to help you identify issues and then you can use it to get solutions to fix any technical issues so you can improve your SEO. Now go ahead and get started on auditing your website!

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: