Prior to getting into the list of reasons why your site might be showing up, you should go through this quick pre-checklist of things to do and things you should have set up. While they might not fix the issue, they may help you narrow it down and keep you from having to go item by item on the checklist to figure out the real culprit.

Pre-Audit Checklist

Do you have Google Search Console setup?

Do you have Bing Webmaster setup?

Is Google Analytics setup and collecting data? If you are not sure, you should test that first.

Crawl your site with the SEMrush Audit tool and/or your crawler of choice.

For those more advanced you should also:

Get access to your cPanel or backend of your web host. Find and look at your log files.

If you already have access, it is highly recommended that you look at your log files and make sure user and bot sessions are showing up. Do you see any or nothing?

Now that you have done those items, we are ready to move onto what issues you may find, causing you to not show up in Google.

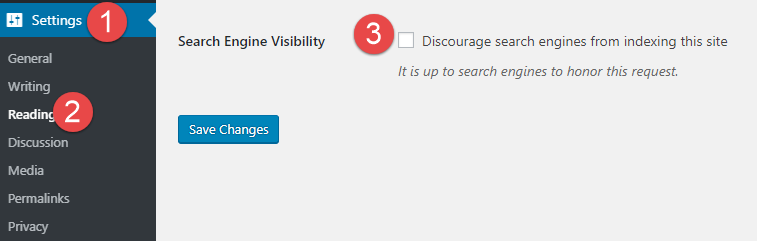

1. Search Engine Visibility Set to “Discourage” in WordPress

This is a setting in WordPress that is often by default, turned on. You will see the box selected next to “Discourage search engines from indexing this site” which will update your Robots.txt for you and tell search engines such as Bing and Google to go away.

Even if your site has been live for days, weeks, or years this setting is the first thing I look at when I hear a site owner talk about having issues getting into search engines.

2. Robots.txt is Set to Noindex

You can check this by going to HTTP or https://www.yourdomain.com/robots.txt. If you see the following code in your Robots.txt, you are telling the search engines not to crawl your site.

User-agent: * Disallow: /How can this happen? There are several reasons and ways it tends to happen:

The Search Engine Visibility button in WordPress was selected (See #1)

A Dev or Staging version of code was moved to Production, and the robots.txt there came along, and it has this disallow code.

There could be a typo on the page or possibly a misunderstanding around the robots.txt and how it works.

An example I often see that scares me is the following:

User-agent: * Disallow:While this means you want ALL search engines to crawl your site, it is just one character away from blocking your site from ALL engines. Typically, I suggest NOT putting this in your robots.txt as I always fear someone will accidentally come along and add a “/” and take the site out of Google and Bing.

Testing Tools

Technical SEO’s Robots Tester

Google’s Robots Tester via GSC

3. Noindex Meta Tag

Like #1 and #2, this is a sure-fire way to take a page out of Google or an entire site. This can be added to a site via a WordPress plugin or even hard-coded by someone into a header/site template.

<meta name="robots" content="noindex"/>

<meta name="robots" content="noindex"/>To check if this is the issue, do the following:

Open your site in your browser of choice

Right-click on your mouse and select “view source” or “view page source”

Search for robots

Search for noindex

Another way to find what pages have the meta tag is to either crawl your site with your crawler of choice or perform an audit with the SEMrush site audit tool. Both should give you a list of pages, and then you can dig further into your CMS or code and figure out the issue.

4. Host or Site Migration

Did you recently move your site to a new CMS, theme, WebHost, HTTP->HTTPs, or some other large migration? Did you also change lots of URLs and implement lots of redirects or maybe no redirects? All these things that I just listed off could be impacting your site in the eyes of search engines and possibly have them very confused.

Here is a list of checklists for HTTP-> HTTPS and Website Migrations to help you (even if you already made the move, they should help you troubleshoot):

HTTP to HTTPS: A Complete Guide to Securing Your Website

The HTTP to HTTPs Migration Checklist in Google Docs to Share, Copy & Download

The SEMrush Website Migration Checklist

So if you already did a migration, it is very possible you missed something in one of these checklists.

5. JavaScript & Mega Menus

These are individual issues, but they do often appear together. If all your navigation is in JS, it is hard for engines to crawl, and thus your deeper internal pages can become islands and orphan pages.

If your site is built purely on JavaScript, you might have much larger issues than just a Mega Menu or Menu in JavaScript. Bartosz and the Onely team have some research around Javascript SEO that you might want to dig into as well as a series by Google on JavaScript SEO.

Say that you implemented a Mega Menu and did so in an SEO friendly manner, they can still be an issue for SEO and crawling in other ways. A John Mueller started a thread on Twitter where a number of people support, hate, and praise Mega Menus might be an interesting read for you.

With that being said, Mega Menus can cause issues and deserve to be on this list. If you are having issues with crawlers, do look at your Menu code and see if it is creating 1000s or 10,000s lines of code and is the reason for your issues.

6. Redirects

One of the main reasons that #4 is likely an issue is redirects. More specifically, the issue would be either missing redirects or redirect chains. If you move your site to a new domain or move all your pages to new URLs but do not implement redirects, then crawlers will only know about your old site/pages and not the new one. I have often seen Redirects be the main issue for getting sites and pages into search engines due to both missing redirects or redirect chains.

If you are wondering what a “redirect chain” is, that is simple. It is multiple hops of pages with multiple 301 or 302 responses all in a row. A browser or crawler would possibly see something like this:

Land on site.com/page.php -> 301 Redirect -> site.com/productpage.php -> 302 Redirect site.com/productpage5.php -> 302 Redirect -> site.com/page3.php and get 200 response finally.There is some coverage of John Mueller’s comments (from late 2018) about redirects, page rank, and why you should try and avoid redirect chains.

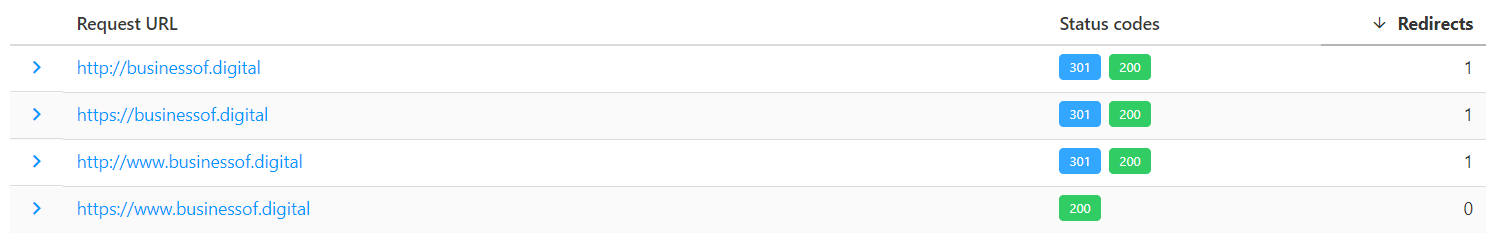

7. WWW or Non-WWW Redirects

While this could fall under #5, I thought it worth splitting out on its own. I once had a client that was a SaaS company, and they, of course, had a login page for the service. They also had white label partners and a long list of checks in the code to make sure the proper login page skin was shown based on cookies, referrer, and much more.

What a normal user also didn’t see is that the page, no matter what, often would do a series of redirects. These hops included statuses of 404, 302, 301, 301, and a few others – in short, it was a mess. The product team, however, was concerned that no matter what, we couldn’t get the login page to ever show up in Google.

As you can guess, it is because Google had no idea what a valid URL for the login page was. Now, what does your www, non-www, HTTPS www, and HTTPS non-www homepage all do? Do they all resolve, or do they also bounce around like a tennis ball at a match?

If you are not sure, I recommend using https://httpstatus.io/ for a very fast way to check. Simply drop in your domain, select “Canonical domain check”, and hit the Check Status button.

What you will want to see is 3 of the 4 versions of your domain redirecting to the 4th like this:

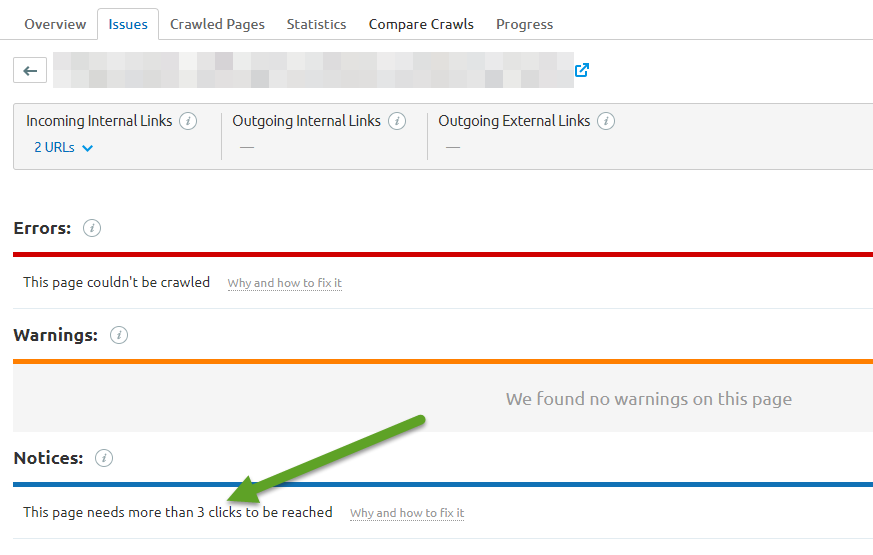

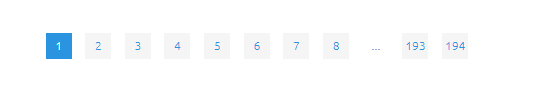

8. Deep Content or Click Depth

This is often called either “clicks from the homepage” or “click depth”, and it is one of the issues that create this is pagination. Deep Content or content many clicks away from the Homepage is most often found in very large e-Commerce sites and large blogs.

To see what your click depth is and what content is currently being impacted, you can easily check it with your site crawler you're used or by using the SEMrush Audit Tool. Both of these methods will help you identify pages that are 5,6,7 and at times, even 10+ clicks from the homepage.

Here is a screenshot of the pagination from the SEMrush Blog which is often a contributor to deep content as well:

The SEMrush Blog, however, has several ways internal links are found – Tags, User/Author Pages, Internal Links in Blog posts, and others. However, on many sites, these other methods of internal linking are not as well done, and the site simply uses the blog homepage and maybe a few categories to try and link to 100s, 1000s or even 100,000s of products and pages. For a deeper dive into pagination, you should read Arsen’s post “ Pagination; You’re Doing it Wrong”.

So if most of your content is not in Google or Bing but is 5,6 or even 10+ clicks from the homepage, I suggest that you do an audit and look for ways to increase the internal links to those pages.

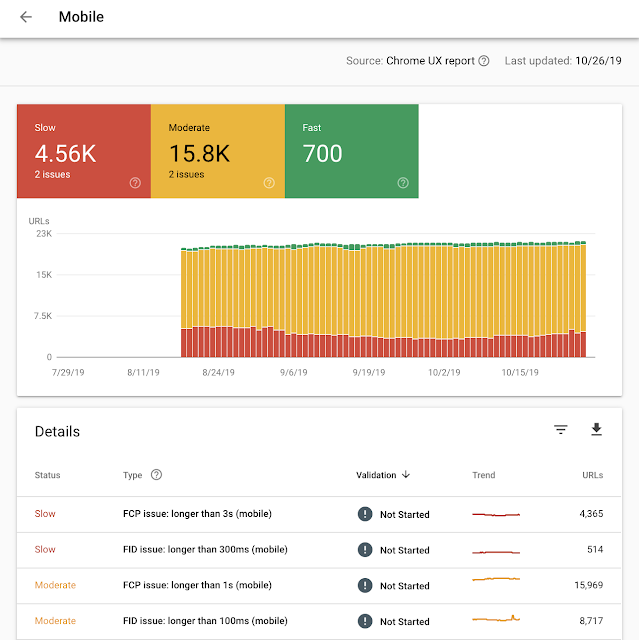

9. Page Speed / Page Load Time

While I have personally never seen this impact an entire site from showing up in Google/Bing, I have seen it impact specific pages or page types. Just because I have not personally seen slow page speed impact, an entire site doesn’t mean it also hasn’t happened.

If your server is not responding or is being slow to respond to search engines, this slow response will make it look as if your site is slow (which technically it is), and finding your site ranking well or at all could be compromised. Perhaps more importantly, I have seen slow pages impact the ability of a page to convert users to leads and sales, and that impact to your bottom line is the most important impact.

To test your site’s speed, you can use a number of tools like Google Search Console’s new speed report, Google Analytics, Lighthouse in Chrome, or any of the other free testers available on the web.

Image source: https://webmasters.googleblog.com/2019/11/search-console-speed-report.html

10. Faceted Navigation

If you are still reading this list and didn’t flag your site for Duplicate or Thin content, perhaps the issue is that your eCommerce site uses faceted navigation. While this is not only a problem for e-commerce sites, it most often is where one will see it.

So, what is faceted navigation, and why could it be tripping up search engines? Spider traps. Crawl budget. Duplicate content. These are just some negative side effects of a poorly done faceted navigation.

Overall faceted navigation can be great for users and crawlers but here are some issues that your site may have that could be impacting the ability of it to get crawled and rank well:

You use URL variables that change order and make it look like new pages to Google. Below are 3 URLs that all present the same list but appear to be 3 unique pages.

/mens/?type=shoes&color=red&size=10

/mens/?color=red&size=10&type=shoes

/mens/?type=shoes&size=10&color=red

Multiple Paths, i.e. Site Navigation vs. Site Search

In Best Buy when you drill down to a Mountable & Smart Capable TV, you get https://www.bestbuy.com/site/tvs/smart-tvs/pcmcat220700050011.c?qp=features_facet%3DFeatures~Mountable

The URL showing very well in Google, however, is https://www.bestbuy.com/site/searchpage.jsp?_dyncharset=UTF-8&browsedCategory=pcmcat220700050011&id=pcat17071&iht=n&ks=960&list=y&qp=features_facet%3DFeatures~Mountable&sc=Global&st=pcmcat220700050011_categoryid%24abcat0101001&type=page&usc=All%20Categories

Issue: Limited Crawl Depth — Sometimes fixes to limiting the options will also limit what pages are available to rank leaving not your best foot forward. Example:

Drill down Grainger to https://www.grainger.com/category/machining/drilling-and-holemaking/counterbores-port-tools/counterbores-with-built-in-pilots?attrs=Material+-+Machining%7CHigh+Speed+Steel&filters=attrs

When searching Google for “High-Speed Steel Counterbores with Built-In Pilots”, a similar but not exact page is ranking — https://www.grainger.com/category/machining/drilling-and-holemaking/counterbores-port-tools/counterbores-with-built-in-pilots.

While each case is unique, often, the solutions include:

Canonical Tags

Disallowing via Meta Tags

Disallowing via Robots.txt

Nofollowing Internal Links

Using JavaScript + Other Methods to hide links

11. Your Site Has a Manual or Algorithmic Penalty

This is the opposite of #5, in a way. Instead of no links, your site likely has links, lots of them even, but they are of the toxic and suspicious variety. You also could have been hacked and booted from the index, but more likely, you just have too many toxic links.

So how do you know if you have a penalty? Google and Bing should have told you. To double-check, you can find more info on your site here:

Open Google Search Console

Open Bing Webmaster Tools

Open your email address associated with Google Search Console (or the email that is sent the alerts). Do a search for “google”, “penalty” and see if you missed or accidentally deleted a notice from Google.

As far as the topic of what is a bad vs. good link is already covered on the site, I won't go into it, but if you do have a penalty because of links, you should check out Ross Tavendale’s Weekly Wisdom about Evaluating Links.

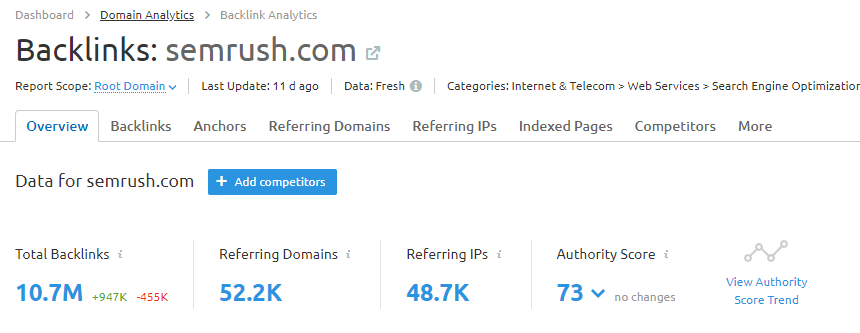

12. Your Site Lacks Links

If you have a new site, it is very likely that you have no or very few links. Since no site starts off with 100s and 1000s of links, it may take some time. Take a look at your Google Search Console or look up your site in SEMrush Backlink Analytics to check how many links you have.

Even without any links, Google and Bing will crawl your site, but it is likely that you will not show up for many competitive keywords without them. So if you are finding that your site technically is sound, you have content that is finable and, in general, your onsite SEO is in place, the idea that you lack links and that search engines just haven't prioritized your site is a very real issue.

I have ranked sites and see them rank all of the time with no or very few links, but you can’t count on that always happening for sites and industries.

13. Your Site Lacks Content (& Context)

Visual vs. Content often has a visual side win. This, however, leads many sites to perhaps visually be very appealing but leaves little to no room for content and context on a site. Think of an art gallery where the floors and walls will be white or very simple but let the art stand out. Perhaps next to it will be a very small plaque with the artist’s name and maybe the name of the art. If your site is similar, there is no context, and search engines need the context to understand more about the art, the artist, and why it is in the gallery.

Often sites will put all the content in an image, use just sparse text on a page, and an image or in many cases, never really spell out in text what it is the company does. As my cohost wrote in our Podcasting Guide – it is all about finding your angle and telling your story.

Test your site and pages by copying just the main text of the page and showing it to someone – ask them what the page is about, ask them what questions they have and ask them to answer questions about your business/services/product from that content. They likely will score poorly with all those tasks if you have thin content and no context.

If you are a seafood restaurant but never mention seafood or any of the types of fish, how would a user or search engine understand what your site is about? If you are a lawn care service but don’t list out any of your offerings, how will a user or search engine know that you do it?

If your description of a product is the same as every other site and is all of 30 short words, how will a user or search engine really understand what you are selling?

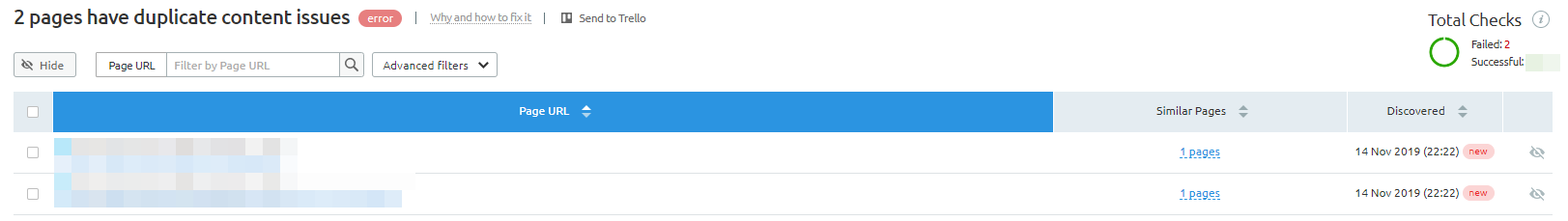

14. You Have LOTS of Content. Lots of Duplicate Content, That Is

If you have a 10-page site and 6 are for cities near you all with 80-99% of the same content, you are likely going to have issues ranking. The same goes for a site with 10-30 blog posts but 10 category pages and 100 tag pages. The amount of thin and duplicate content is very high, and thus, the perceived value of your site is going to be low.

Also, think of a user trying to find something on your site and how they likely don’t care for those thin pages, so why would a search engine want to rank them, let alone crawl them?

For a deep dive into identifying duplicate content, you can read How to Identify Duplicate Content, which will walk you through scraping your site.

Another option for finding duplicate content is to use the SEMrush Site Audit tool. If the SEMrush Site Audit bot identifies multiple pages with 80% similarities in content, it will flag them as a duplicate content. Besides, pages having too little content might also be counted as a duplicate content. After you run an audit, you will see this screen:

In the example above, the issue was simply that Page 2 was supposed to have been redirected in a recent update and was missed.

So, if you are having issues making gains in the search engines, look at your internal pages and see if they are too similar but also look at your content compared to other sites. Something that is common with affiliate and ecommerce sites is that 10s, if not 100s of sites all use the same product description. If your site has 100 products and there is nothing unique about any of them, you are going to find it tough to get ranked for keywords you want.

15. Your Keywords Are Competitive

This could be one of the first things I would recommend checking, but it also can be the last. Often the issue isn’t really that a site isn’t showing up but that it isn’t showing up for industry keywords and target keywords that a CEO or someone wants to see a site show up for.

Take a look at this article from Nikolai Boroda that dives into keyword research, and take a stab and trying to better define your target keywords (and expectations of your boss).

This is not to say that one day, you won’t compete for the most competitive keywords in your industry, but if your site is new, you will need to build up to that and should start with your brand and longer tail keywords first.

Have you faced any of these issues? Tell us how you dealt with them in the comments.

Innovative SEO services

SEO is a patience game; no secret there. We`ll work with you to develop a Search strategy focused on producing increased traffic rankings in as early as 3-months.

A proven Allinclusive. SEO services for measuring, executing, and optimizing for Search Engine success. We say what we do and do what we say.

Our company as Semrush Agency Partner has designed a search engine optimization service that is both ethical and result-driven. We use the latest tools, strategies, and trends to help you move up in the search engines for the right keywords to get noticed by the right audience.

Today, you can schedule a Discovery call with us about your company needs.

Source: